Licensed under a Creative Commons Attribution-NoDerivs 3.0 Unported License.

The views expressed in the Shorenstein Center Fellows Research Paper Series are those of the author(s) and do not necessarily reflect those of Harvard Kennedy School or of Harvard University. Fellows Research Papers have not undergone formal review and approval. Such papers are included in this series to elicit feedback and to encourage debate on important issues and challenges in media, politics and public policy. Copyright belongs to the author(s). Papers may be downloaded for personal use only.

Download a PDF of this paper here.

The Fight Against Disinformation in the U.S.: A Landscape Analysis is a map of the disinformation initiatives currently in play to undergird newsrooms and improve media literacy at this challenging moment for the free press. Universities, platforms, foundations, and private donors are all working to understand how a contagion spreads in this viral digital culture and to discover potential solutions.

In an effort to discover who is stepping up, what they are doing and where they are making early headway, the work is organized into four categories:

- Internal Platform Efforts

- Institutional Initiatives

- Upstart Initiatives

- Leading Funders

How is Disinformation Changing Us?

The iPhone is 10 years old, Twitter is 12, and Facebook is 14. History has shown that the medium is the message and that cataclysmic changes in how we communicate bring dramatic shifts in our society. Now, a decade later, society has begun to ask, “how different is this moment in time from others?”

Some have long predicted this change and their prescience is back in vogue. Neil Postman, a visiting Lombard Professor at the Shorenstein Center back in 1991, wrote: Amusing Ourselves to Death: Public Discourse in the Age of Show Business in which he clearly described his concern for this new emerging world of pictures that led to this digital age. He feared the shift from text to pictures would fundamentally change the way we communicate and thus change our culture and politics. He believed this change in content and its form would drown us in a sea of irrelevance and kill any semblance of civil and intellectual society. In his seminal 1987 work, Postman carefully describes how culture and politics change dramatically with pivotal communication shifts. A headline last year in Paste Magazine asked: “Did Neil Postman Predict the Rise of Trump and Fake News?”

Fast forward thirty years. This past February 2018, Washington Post Executive Editor Marty Baron referred to the prophetic quality of Postman’s book when he delivered the Reuters Memorial Lecture at the University of Oxford. Baron spoke of credibility and its measure in this current climate of disinformation, quoting Postman. “In the Age of Show Business, as Postman put it, credibility ‘does not refer to the past record of the teller for making statements that have survived the rigors of reality-testing. It refers only to the impression of sincerity, authenticity, vulnerability or attractiveness . . .’” The track record of the teller and the rigors of reality testing appear more difficult than ever to measure as we wade through a deluge of digital, celebrity-driven content across our multiple screens, 24/7.

As is often the case in a crisis, historians and journalists have begun to turn to historical context for reference at this critical moment for journalism, pointing to information disorders that have come before. Harvard historian Jill Lepore’s new book These Truths: A History of the United States is a sweeping investigation into the American past that places truth itself at the center of the nation’s history. Craig Silverman, media editor at BuzzFeed News who carved out a very early beat in the disinformation space, reminds us that the original print newspapers were partisan and while bias and untruths are not new, our society has never experienced their deluge at such scale. He also cautions that something far more damaging is at play today. “It’s different when you can be put off into a filter bubble and that is all you get. It’s different when misinformation is flowing very rapidly through all those sites.”

Columbia University’s Michael Schudson has long been ahead of this predicament for journalism asking the practice, “what is going to take the place of what is being lost, and can the new array of news media report on our nation and our communities as well as—or better than—journalism has until now? More importantly, what should be done to shape this new landscape, to help assure that the essential elements of independent, original and credible news reporting are preserved?” He warns that what is under threat is independent reporting, particularly in the coverage of local affairs. Schudson has been sounding a bell for decades asking the news world to make wise choices at this pivotal moment in time. He believes these decisions will not only have far-reaching effects but if the choices are sound, significantly beneficial ones. The industry is listening as there appears to be an all hands on deck approach as we illustrate in this analysis.

In the chaos, polarization has emerged and pushed society to question, “what is true?” In his talk at the Institute of Politics at the University of Chicago in February 2017, Silverman defines how he sees truth being assessed: “Whoever has the most people and activates them the most effectively determines what truth is.” Digital content is all about the number of viewers available to click. The difference from the age of the written word is that this digital content is so much easier to produce and the market for creating and consuming it is global in scale.

According to Silverman, the meta-moment is not disinformation. He argues that disinformation is but a symptom of what many are now calling the emergence of a new dominant economy: the Attention Economy. An article published by The Atlantic called Where Has Teen Car Culture Gone? suggests a new economy is emerging, one moving away from the long-dominant oil economy. The writer explains that today’s youth do not perceive the automobile as a path to freedom and way to connect with the outside world, but rather yearn instead for a series of devices that can connect them to this new attention economy. With this enormous shift from an oil economy to an attention economy, disinformation appears as a byproduct.

Harvard Business School Professor Thales Teixeira defines the Attention Economy using the core principle that consumers only have a fixed amount of attention to offer. With the advent of the internet came an explosion of information and content producers, all of whom now must compete for the same fixed resource. Given that the supply of attention is far outweighed by its demand, the price of attention “has skyrocketed in recent years,” Teixeira says. Many online content producers earn their revenue by hosting advertisements on their sites and gathering data, and this business model maximizes profit by collecting as much attention as possible. Emotional material that reinforces the reader’s preconceived political beliefs and opinions thrive. Accurate and balanced news content does not usually maximize attention.

“If you do well, you get a check every month. At the core of it, attention is their crop. They are trying to harvest as much attention as they can possibly get through Facebook and through other means to get that onto the page and the more page views they get, the more money they get. It doesn’t matter to them what it is. And it doesn’t matter if it’s true.” What has been revealed since the 2016 election is that there were many players involved in the sowing of disinformation, and some had exclusively economic motivations.

This new economy has led to an entirely new stable of workers from content makers to Search Engine Optimization strategists to data analytics miners. Silverman attempts to define the news sellers in this Attention Economy: “If you do well, you get a check every month. At the core of it, attention is their crop. They are trying to harvest as much attention as they can possibly get through Facebook and through other means to get that onto the page and the more page views they get, the more money they get. It doesn’t matter to them what it is. And it doesn’t matter if it’s true.” What has been revealed since the 2016 election is that there were many players involved in the sowing of disinformation, and some had exclusively economic motivations. Silverman reported that Macedonian content makers were running health sites before they realized they could make more money with American politics. As producers saw that pro-Trump and anti-Clinton material secured more clicks, they created content accordingly.

The Nieman Lab at Harvard reported in June 2018, that researchers at the Indiana University Observatory on Social Media “found that steep competition for the limited attention of users means that some ideas go viral despite their low quality — even when people prefer to share high-quality content.” This is disheartening when even in early 2016, a survey by the Pew Research Center in association with the John S. and James L. Knight Foundation found that 62 percent of U.S. adults consume their news on social media, while another 18 percent said they “often do.” Today, that number has grown even higher. The Pew Center reported in September 2017 that 67 percent of American adults rely on social media platforms such as Facebook, Twitter, and Snapchat for news. It also found that 55 percent of American adults over 50 years old were consuming news on social media sites, up from 45 percent in 2016.

Trust in news has fallen dramatically and the rise in polarizing content, created to look like news, is being driven by both profiteers and malevolent players. Add to this a president that undercuts the credibility of the press on a daily basis and who has declared the press as an “enemy of the people.”American journalism, already shouldering practically non-existent revenue models that have led to the decimation of quality local news, is in deep defense. The industry knows it has a problem. Christine Schmidt at the Nieman Lab published a roundup of initiatives in the industry trying to rebuild trust and reported that “a Knight-Gallup report this year that found that the average media trust score (1-100) for American adults ranged from numbers in the 50s for Democrats to 18 for conservative Republicans. Less than half of those surveyed could identify a news source they believe is objective. This isn’t new: Even before Donald Trump started campaigning, Gallup’s annual poll has shown a decline from 55 percent of Americans saying they had a “great deal/fair amount” of trust in mass media in 1997 to 32 percent in 2016.”

Trust in news has fallen dramatically and the rise in polarizing content, created to look like news, is being driven by both profiteers and malevolent players. Add to this a president that undercuts the credibility of the press on a daily basis and who has declared the press as an “enemy of the people.”American journalism, already shouldering practically non-existent revenue models that have led to the decimation of quality local news, is in deep defense.

In this landscape analysis, it became apparent that a number of key advocates swooping in to save journalism are not corporations or platforms or the U.S. government, but rather foundations and philanthropists who fear the loss of a free press and the underpinning of a healthy society. They are focused on building up media literacy, applying platform pressure, and disrupting nefarious actors. With none of the authoritative players – the government and platforms who push the content – stepping up to solve the problem quickly enough, the onus has fallen on a collective effort by newsrooms, universities, and foundations to flag what is authentic and what is not and clean up the stream.

The Ford Foundation’s Lori McGlinchey wrote recently about the Ford Foundation’s intent to have an impact on this space. “Although propaganda and disinformation aren’t new, what is different in the digital environment today is the speed, reach, and sophistication of the use of information and communication technologies to manipulate perceptions, affect cognition, and influence behavior. These new tools and practices have negative consequences both for public trust in technology innovation and for the quality of public deliberation and decision-making. The global rise of such digital manipulation of public opinion is of great concern to civil society groups working to advance democracy and equality both on and offline. Misinformation and ‘fake news’ undermines a core tenet of democracy — an informed electorate.”

Along with Ford, the Knight Foundation is one of the largest funders of the information disorder initiatives in this comprehensive landscape analysis. These collective funders are taking a multi-pronged approach to circle this epic moment. Many journalists, scholars, and foundations point to the decimation of local news as a major catalyst that has led to the crumbling of credibility in both journalism and pillared institutions in society. David Beard, Dr. Claire Wardle, and Dan Kennedy, all former and current fellows at the Shorenstein Center, point to the loss of local newsrooms as a tipping point with the void being filled with content makers who have their own agenda based on capital or political gain, rather than journalists seeking truth. Lower barriers to entry for both journalists and content makers have further decentralized the information landscape and destabilized the public’s sense of trust in the information that it consumes.

In Dr. Claire Wardle’s paper for the Council of Europe, she defines The Information Disorder and examines how the collapse of local journalism has enabled disinformation to take hold. Wardle’s greater focus and previous work lie in the spread of disinformation across the globe, while voices like Kennedy and Beard work to capture the glimmer of progress in local journalism stateside and have pushed to rebuild a robust local pipeline of high-quality and thoughtful news reporting. Kennedy regularly measures the state of local news in his blog Media Nation and his rather hopeful book, The Return of the Moguls: How Jeff Bezos and John Henry are Remaking Newspapers for the Twenty-First Century, speaks to the future of what digital newspapers can be and their vitality for democracy. Beard, for his part, uses Twitter and his Morning Mediawire to highlight solid local journalism, having worked as digital content editor of the Washington Post, editor of Boston.com and executive editor of Public Radio International.

There are robust efforts underway that need further funding and amplification to clean up information disorder, and we have scanned the landscape to identify where vigorous attempts are already underway. While there are more, these are the largest initiatives that have already begun to scale to grapple with information disorder and prepare newsrooms for their imminent coverage of the U.S. midterms and beyond to 2020.

We have identified four categories:

- Internal Efforts by Platforms

- Institutional Initiatives

- Upstart Initiatives Combatting Disinformation

- People and foundations funding the efforts to make a collective dent

Internal Platform Efforts

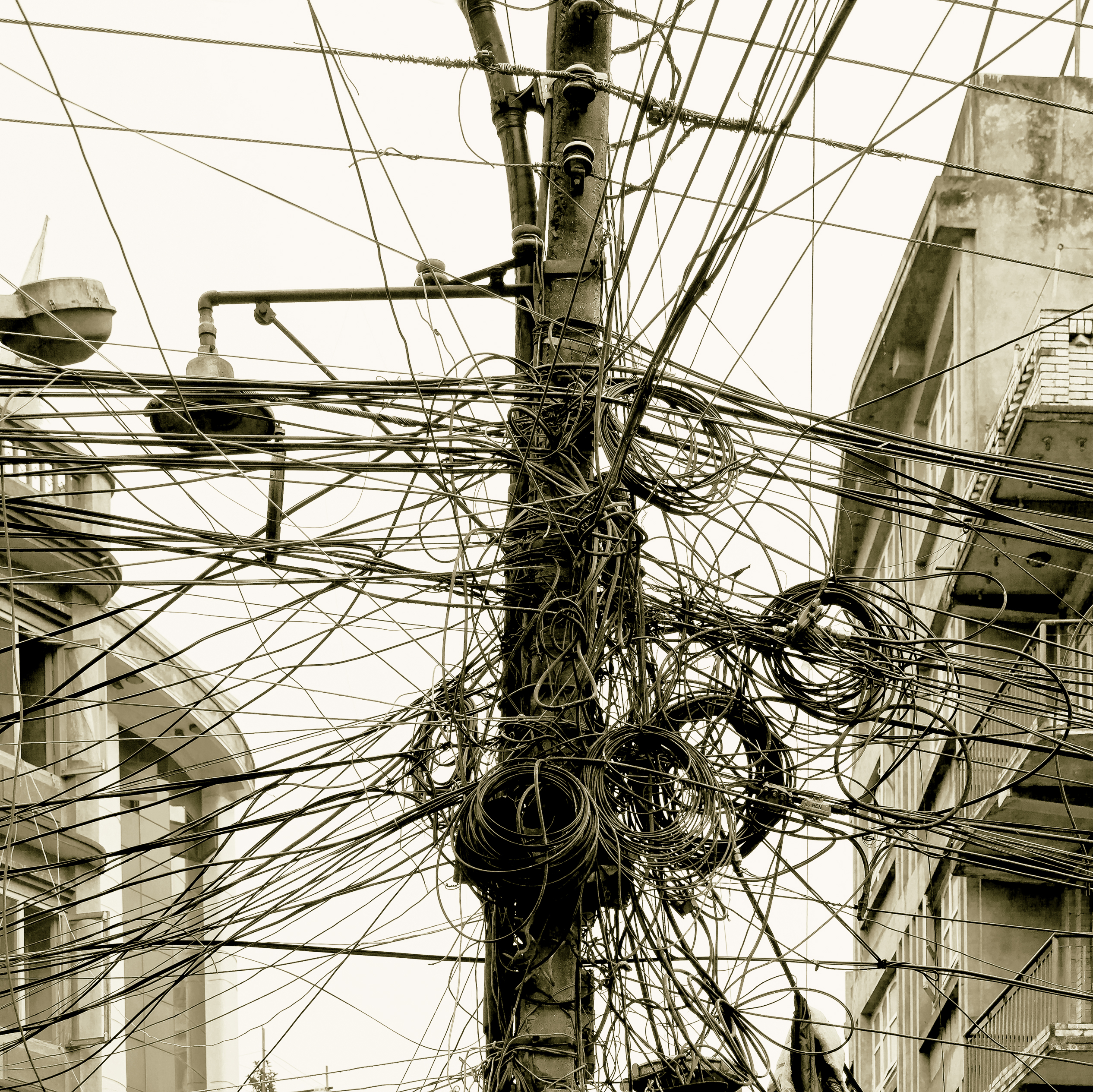

The Tyranny of Analytics: In the social media age, the measurability and commoditization of content, in the form of traffic, clicks, and likes, has tethered editorial strategy to analytics like never before. The emphasis on quantifiable metrics stacks the news cycle with stories most likely to generate the highest level of engagement possible, across as many platforms as possible. Things traveling too far, too fast, with too much emotional urgency, is exactly the point, but these are also the conditions that can create harm. – Data & Society Oxygen of Amplification study

Facebook and other tech giants have been under growing pressure to crack down on the spread of mis- and disinformation across their platforms. Alongside taking away ad revenue from local news and the selling of consumer data, one of the biggest dilemmas for these platforms has been hate speech. Until this August, when Facebook and others removed Alex Jones and Infowars from their platforms, users could pretty much say anything they wanted online without consequence as long as they were not directly threatening individuals or groups based on certain protected identities such as race, ethnicity, national origin, religious affiliation, sexual orientation, and gender. These relatively loose policies have enabled a surge in controversial speech and incited deep debate over the arbiters of truth, the bounds of free speech, and how online communities could, or should, support productive civil discourse.

In comparison, there was little controversy when platforms began to clamp down on online content that promoted ISIS’s extremist ideology. But this is changing. Since the Unite the Right rally in Charlottesville in the summer of 2017, Mark Zuckerberg’s testimony in April 2018 with Congress, Jack Dorsey’s heated barrage on his resistance to removing Alex Jones, and countless publicized instances of online harassment, the American public has begun to demand more stringent control by platform companies. Since May, we have seen Twitter, Facebook, and Google take more steps than ever before to show their good faith in tackling this problem from bot removals to the scourging of false accounts and hate accounts. Almost every day, changes on these platforms make front page news.

These relatively loose policies have enabled a surge in controversial speech and incited deep debate over the arbiters of truth, the bounds of free speech, and how online communities could, or should, support productive civil discourse.

Platform changes to respond to the information disorder evolved at such a dramatic pace this summer of 2018 and into the early fall making it is a moving target to attempt to capture it all. Here is what was happening leading up to the removal of Alex Jones and Infowars from Facebook, Apple, and YouTube and pervasive leaks of data plagued Google, Facebook and more.

After extreme public outcry over its role in the Russian disinformation campaign that targeted the 2016 U.S. presidential election and the Cambridge Analytica scandal over user privacy, Facebook declared its commitment to protecting the public from disinformation. Since then, the platform has experimented with a slew of different methods to confront the issue, provoking praise and ridicule with almost every move. Facebook continues to adjust its stance on the issue of disinformation, and recent developments mark a significant shift in the company’s perspective.

One of Facebook’s major approaches to curbing disinformation has involved directly managing the spread of false information. In cases where content violates Facebook’s community standards, Facebook will remove the information from the platform, and when an account has repeatedly violated these terms, the account itself will be deleted. More commonly, Facebook limits the spread of disinformation by downranking content that is has judged to be false in its News Feed algorithm. These judgements are made not by Facebook, but by third-party fact checkers that partner with the platform and are certified through the non-partisan International Fact-Checking Network. When these organizations determine that a story is false, Facebook rates it significantly lower in users’ News Feeds, cutting the article’s future views by over 80 percent on average.

Until very recently, Facebook has been reluctant to remove disinformation from the platform just because it is false. Unwilling to accept the responsibility of determining truthfulness and the risk of being accused of censorship, the company has opted to suppress the reach of misleading content, rather than removing it entirely. However, after attracting criticism for Mark Zuckerberg’s remark that Facebook would not remove Holocaust deniers from the platform just because their information was wrong, Facebook shifted toward a more “proactive” approach to managing disinformation. In July, the platform pledged to remove any misleading information that has the potential to lead to actual violence. In early August, Mark Zuckerberg himself made the decision to ban Alex Jones, a well-known conspiracy theorist and founder of the far-right news site InfoWars, from Facebook, which led to several other platforms following suit. Just two weeks later, Facebook removed over 650 pages and accounts linked to Russia and Iran for “inauthentic behavior” that meant to influence world politics.

Facebook aims to fight false news by advancing media literacy and giving users more information about the content they encounter on the platform. Facebook’s partnerships with third-party fact-checkers have allowed the platform to provide users with context for the origin and accuracy of the information they encounter on the platform By working with fact-checkers from different regions rather than creating a fact-checking system internally, Facebook can outsource the responsibility of determining what is “truth” and rely on organizations that might have a better sense of how information will be interpreted in a given region. In a move to give more context about the material published on the platform, Facebook now requires greater transparency than it has in the past regarding political advertisements. The platform now requires political ads to be marked as such, along with information about who funds the ads. It has added a tag on any publisher page where users can click to see all of the ads a publisher has created and placed on Facebook. Facebook has supplemented these efforts within the platform with a broader advertising campaign, which warns users, “Fake news is not your friend.” This campaign is aimed at spreading awareness of possible abuses of the platform, encouraging media literacy, and rebuilding users’ trust in the platform.

In addition to partnering with third-party fact-checkers, Facebook plans to combat false news by collaborating with researchers and granting them privileged access to the platform. In April 2018, Facebook launched an initiative that will give journalists and academics access to an archive of political advertisements, allowing them to study ads after they’ve run.

With much pressure from the industry and public regarding the demise of local news revenue models, Facebook has begun to focus on local news. It piloted a Digital Subscriptions Accelerator, a three-month partnership with the Lenfest Institute in Philadelphia to work with 14 metro news organizations to increase local subscriptions. On August 2, Facebook announced it was extending the project through the end of the year and adding an additional $3.5 million in funding. The platform also announced the launch of its Facebook Membership Accelerator, another pilot project intended to help nonprofit news organizations and local publishers with membership models to grow their business. (Source: Shorenstein Center/Lenfest Institute Business Models For Local News: Field Scan)

Algorithms are another hot spot. The company says it aims to fight the spread of disinformation through adjustments in the Facebook News Feed algorithm. Social media algorithms have historically prioritized content that users are likely to engage in, creating “filter bubbles” with biases toward information that users agree with and will evoke an emotional response. Because websites tend to make money based on how many clicks they receive and how many people view the advertisements that they host, this traditional filtering system does not incentivize publishers to produce balanced and fact-focused content.

Social media algorithms have historically prioritized content that users are likely to engage in, creating “filter bubbles” with biases toward information that users agree with and will evoke an emotional response. Because websites tend to make money based on how many clicks they receive and how many people view the advertisements that they host, this traditional filtering system does not incentivize publishers to produce balanced and fact-focused content.

Political advertising on Facebook has emerged as another public outcry for discontent. In lieu of Facebook not moving fast enough around political ads, ProPublica has pioneered a research project that has worked to address the issue of filter bubbles and “dark ads,” which are advertisements that can only be viewed by the ad’s producer and its target audience. ProPublica recently created a service called the Facebook Political Ad Collector that allows users to input demographic information and view the political advertisements that were targeted to that specific demographic and actively crowd-sourcing to build a database to scan what different filter bubbles are being served as U.S. voters approach the 2018 midterms. While Facebook had created its own new tool to promote political advertising transparency in May, a series of news reports reveal that political advertisers are exploiting loopholes in Facebook’s system to buy the ads and remain relatively anonymous.

Overall, Facebook wants to disrupt financial incentives that support disinformation ecosystems and encourage misleading content, and the company is building new products to help accomplish this. Facebook will leverage machine learning in the fight against false news, using it to more efficiently identify suspicious material published on the platform and then flag the content so that it can be reviewed by members of the Facebook team. This will allow Facebook to find misleading pieces more quickly, suppressing the spread of false news and limiting the amount of attention it receives on the platform. When Facebook limits a disinformation producer’s reach, it hurts their ad revenue, and thereby chips away at the financial incentives for conducting that kind of business. Facebook will additionally use machine learning to prevent fake accounts from being created and bar false news producers from running Facebook ads or taking advantage of Facebook’s monetization services. All of these actions use technology to financially disincentivize the production of disinformation.

If there were ever worry that Black Mirror’s plotlines were too close to home, in late August, Facebook announced it had begun to assign each of its users a reputation score, predicting their trustworthiness on a scale from zero to one. The methodology behind its scoring is unclear. The Washington Post reported that “the assessments of user reputations come as Silicon Valley, faced with Russian interference, fake news and ideological actors who abuse the company’s policies, is recalibrating its approach to risk — and is finding untested, algorithmically driven ways to understand who poses a threat.”

WhatsApp (Owned by Facebook)

Facebook has applied its concern about violence to WhatsApp, an encrypted messaging application owned by the company. Unlike Facebook, a semi-open platform where many users can see what is trending and spreading, WhatsApp is a private messaging app incredibly popular in nations outside the U.S. In India, home to WhatsApp’s largest market with over 200 million users, chain messages circulated on closed media have led to 69 cases of violence. Of these reports we identified 77 percent traced back to disinformation. WhatsApp disinformation and rumors led to incidents of mob violence in India, including a dozen murders during a local election in Karnataka state. In response, WhatsApp recently adopted measures to limit the forwarding of messages and to mark messages that have been forwarded. In addition to its efforts within the platform, WhatsApp has also turned to traditional forms of media: It published print advertisements warning users not to trust news that circulates on the app and could be used to provoke violence, and in late August began a radio campaign encouraging users to verify content before forwarding it.

Fact-checking organizations have set up WhatsApp hotlines in Colombia, Mexico, Brazil, and South Africa, where users can forward questionable content to be debunked. The fact-checkers then return the correct story to the person who sent it and encourage that person to share it with their groups. Some also asked for proof that the person has shared it.

Fact-checking organizations have set up WhatsApp hotlines in Colombia, Mexico, Brazil, and South Africa, where users can forward questionable content to be debunked. The fact-checkers then return the correct story to the person who sent it and encourage that person to share it with their groups.

In addition to changes its made around forwarded messages, WhatsApp has begun providing non-profits access to its Business API, thus allowing these organizations to send and receive messages at scale. A recent use case can be found in Comprova – First Draft’s collaborative verification project around the 2018 Brazil general election – which fielded and responded to questions from audience members using the API. Comprova, funded by Facebook and Google, is an effort to organize news organizations to work collaboratively around fact-checking and verification led by Dr. Claire Wardle, executive director of First Draft, and a recent fellow at the Shorenstein Center.

Google appears to be moving to support two major concerns in the news industry: business models for journalism and help with the disinformation crisis – One part of its effort funds journalism and verification while the other builds tools to sell back to them. Its approach is equal parts philanthropy and capitalism.

While Google tries to stay central in helping to design a vision for a new media landscape with digital tools to support digital-first outlets, they have not escaped the platform controversy. Google suffered its own breach of privacy in early October 2018, shuttering its Google+ after the Wall Street Journal reported that the company did not disclose that up to 500,000 Google+ users’ data had been exposed since 2015. Similar to Facebook’s privacy problems accessed by external apps, Google’s breach was through a bug related to interfaces for third-party developers. After announcing Google+ was to be no longer, the company quickly introduced new tools to give users more control over the data they share with apps and services that connect to Google products.

The Google News Initiative (GNI) is its effort to work with the news industry to help journalism thrive in the digital age. It highlights a commitment to building products that address the news industry’s most urgent needs.

In March 2018, Google announced a $300 million ‘Google News Initiative’ to include $10 million towards media literacy in U.S. high schools, fact-checking efforts in Google search around health issues, and to build a stronger future for journalism over the next three years. While Google’s previous efforts in Europe have funded newsrooms and media startups directly, the company has switched gears to support a variety of disinformation initiatives. The Google News Initiative (GNI) is its effort to work with the news industry to help journalism thrive in the digital age. It highlights a commitment to building products that address the news industry’s most urgent needs. As the world’s leading search engine, Google is responsible for serving up information people can trust. The company has made a real push to support journalists and news organizations around the world, while still retaining most of the ad dollars, alongside Facebook, that once flowed to news outlets. At the same time, it is actively trying to help build tools that support an industry-wide transition to digital. Understanding the disruption of disinformation leading up to elections, it was an early funder of First Draft, CrossCheck, and ProPublica’s Electionland project. Google seems to be upstream trying to mitigate the problem newsrooms are facing around disinformation. It makes clear on the GNI website that platforms like Search and YouTube depend on a healthy ecosystem of publishers. This may be easier to achieve in search versus their content challenges in YouTube which we also cover in this landscape analysis. The investment through GNI is intended to:

- Elevate and Strengthen Quality journalism

- Evolve Business Models to Drive Sustainable Growth

- Empower news organizations through technological innovation

It must be noted that Google has led the platforms in philanthropic support of many of the disinformation efforts. Its Reverse Image Search is a leading verification tool for fact-checkers and journalists. Practical applications are part of its effort. The Shorenstein Center convened over 120 local and national journalists this past July at the Harvard Kennedy School where a Google engineer hosted a morning session for journalists on how to use Reverse Image Search to verify disinformation spreading online.

Other initiatives on which it has worked with the industry to launch include open-source Accelerated Mobile Pages Project to improve mobile web, Flexible Sampling to help with the discovery of news content on Google, fact-checker and verification training through First Draft, and the Digital News Initiative directly funded many European newsrooms. One such example is The Journalism Trust Initiative created by Reporters Without Borders (RSF) focused on a European publisher collaboration to combat disinformation. This collaboration includes Agence France Presse, the European Broadcasting Union, and the Global Editors Network and is also funded by corporations, foundations, and government agencies. These publishers announced recently that they will work with Google News Initiative to develop standards for transparency in media ownership, ensure compliance with ethical standards and reveal revenue sources.

Internal efforts to directly manage the spread of disinformation on its own search platform narrowed in on combating the disinformation that spreads during breaking news events. Breaking news is a vulnerable spot where bad actors have been shown to target Google to expose inaccurate content. Google has trained its system to recognize these events and signal the search to more authoritative and trusted content. It has also teamed with the Poynter Institute, Stanford University, and the Local Media Association to launch MediaWise, a U.S. project designed to improve digital information literacy for youth. Two of the key MediaWise efforts include:

- Fact Check now available in Google Search and News around the world: Google announced that in a few countries it would enable publishers to show a “Fact Check” tag in Google News for news stories partnering with Jigsaw and a few fact-checking companies to include Politifact and Snopes. This label identifies articles that include information corroborated by news publishers and fact-checking organizations. Google News determines whether an article might contain fact checks in part by looking for the schema.org ClaimReview markup. It is also searching for sites that follow the commonly accepted criteria for fact checks. Publishers who create fact-checks and would like to see it appear with the “Fact check” tag should use that markup in fact-checked articles.

- Algorithm changes to punish low quality, fake news and fringe content: Google is now changing the way its core search engine works to stop the spread of hate speech and disinformation. It has added new metrics to its ranking systems that should help to stop false information from entering the top results for particular search terms.

As noted earlier, Google has been rapidly creating tools with commercial intent for newsrooms, in parallel with its philanthropic funding. This could be seen as good news for journalism if Google believes revenue models for news will return enabling newsrooms to buy more tools, given the tenuous relationship between journalism and platforms. Newsrooms have lost most of their advertising revenue to platforms. To be seen to spread disinformation, as well as take up 90 percent of the advertising revenue that once went to publishers, has not made for easy bedfellows. Modern newsrooms will need state-of-the-art digital tools to improve revenue and reach and the platforms are in a good position to help create those for journalism. It is a fine line to walk, but Google is walking it. Google’s tagline makes its intent clear, “To help journalism thrive in a digital age.” Here are internal efforts by Google to build new products to help newsrooms modernize and find revenue:

- Reverse Image Search allows journalists to verify where an image on social media has appeared and very useful in disinformation verification.

- Advanced machine learning expertise to automatically surface key insights about revenue opportunities (generating recommendations worth over $300 million in additional revenue) and supported the creation of faster, better ad experiences for the mobile web through AMP and native ads.

- Subscribe with Google, a way for people to easily subscribe to various news outlets, helping publishers engage readers across Google and the web and to ease the subscription process to get more readers consuming publishers’ journalism, as quickly as possible.

- They also added News Consumer Insights dashboard with audience metrics to see who will flip to being a paid subscriber with tags like “casual reader”, “loyal reader”, “brand lovers”, and “subscribers.” At the St. Louis Post-Dispatch, this project led to a 150 percent increase in pageviews to their Subscribe pages and a month-over-month tripling of new digital subscription purchases.

- With a few choice publishers (Hearst, La Republicca, and the Washington Post), Google is testing the “propensity signal” using machine learning to understand who is willing to pay.

- Outline, an open-source tool from Jigsaw that lets news organizations provide journalists with more secure access to the internet. Outline makes it easy for news organizations to set up their own VPN on a private server – no tech savviness required.

In 2017, Google pushed over 200 publishers (out of 2 million) that it thought were fake news publishers from its platform.

YouTube (owned by Google)

YouTube was an early player in the fight against disinformation when in 2017 it released a tool known as the Redirect Method which served anti-ISIS content to users searching for ISIS-related videos in an effort to curb the interest of people searching for extremist ideology. This tool, developed by Google’s think tank Jigsaw, was flagged by some as a potential solution to the racist and alt-right ideologies that led to the Unite the Right rally in Charlottesville in July 2017. But as Issie Lapowsky wrote in an Wired op-ed shortly after the events in Charlottesville, “these are, after all, companies, not governments, meaning they’re free to police speech in whatever way they deem appropriate.”

Google has moved to fund disinformation efforts on YouTube, committing $10 million through Google.org with a global media literacy initiative. It will spend $3 million in the U.S. again through MediaWise, similar to the effort with Google search. The U.S.-based partnership between Poynter Institute, Stanford University Education Group, and the Local Media Association will focus on media literacy to help millions of young people in the U.S. discern fact from fiction online, through classroom education, and video with the help of several teen loved YouTube stars like John Green. YouTube has also been investing in external fact-checking and journalism endeavors, working on its algorithm and adding a fact-checking flag to its news and search results.

YouTube announced at SXSW this past year that it is teaming up with Wikipedia to debunk conspiracy videos by directing viewers to “fact-based” Wikipedia pages. However, critics point out that both Wikipedia and YouTube contain user-generated and crowd-sourced content and question the impact of the matchup. Like its parent company, YouTube is aware that nefarious actors often target breaking news on Google platforms, increasing the likelihood that people are exposed to the inaccurate content. In response it is training its systems to recognize these events and adjust its signals toward more authoritative content, highlighting relevant content from verified news sources in a “Top News” shelf.

Still, YouTube has long asserted that it is not a media company and therefore isn’t liable for falsehoods on its platform. These unclear definitions also allow platforms to theoretically bear less responsibility for user content. The company did commit to adding 10,000 people to its moderation team this year, a move which will hopefully bear some results. Problematic algorithms and the lack of a strong editorial voice have allowed disinformation to flourish on the platform, such as the trending of a video that accused Parkland shooting survivor David Hogg of being a crisis actor.

Although Twitter has openly declared that it is “not the arbiter of truth” – namely in its initial initial refusal to ban Alex Jones and Infowars along with the other tech giants – the platform has admitted to being the arbiters of popularity amplified by bots, and therefore moved dramatically to solve this issue last May and June when it suspended more than 70 million false accounts. But there was more to be done. On July 12th, Twitter reduced the total follower accounts on the platform by six percent. While many celebrities saw their follower counts plummet, “no change” became a viral humblebrag when those who retained their followings noted that they had survived the purge, proving they had long held authentic followers. The Washington Post also reported that Twitter investigates bots and other fake accounts through an internal project known as “Operation Megaphone.” They describe this as a process through which Twitter buys suspicious accounts and then investigates their connections.

Twitter’s move to remove tens of millions of suspicious accounts came after persistent pressure from users to solve what is seen as a pervasive form of social media fraud. Users have long been able to buy followers, and revelations of such a market prompted investigations in at least two states and calls in Congress for intervention by the Federal Trade Commission. This market for fake followers has also brought heat from Twitter advertisers, most prominently Unilever, which looks to social media influencer endorsements on the platform. In June, Unilever announced that it would no longer pay for any advertising on accounts whose followers had been purchased.

Yet Twitter’s cleanup did not come without repercussions. In late July, President Donald Trump accused Twitter of “shadow banning” prominent Republicans, and Republican congressman Devin Nunes went as far as to say Congress will investigate the claim. Twitter reacted swiftly with a cordial but direct blog post by its legal and product leads: “We do not shadow ban. You are always able to see the tweets from accounts you follow (although you may have to do more work to find them, like go directly to their profile). And we certainly don’t shadowban based on political viewpoints or ideology.” The blog post also addressed complaints that some accounts were not “auto-suggested” even when specifically searched by name with a comment that said that the issue has been resolved and had affected “hundreds of thousands of accounts,” not only those with certain ideologies. Twitter further explained the way some bad-faith actors game the system, noting that some communities try to boost each other’s presence on a platform through coordinated engagement.

TechCrunch reported that when Twitter identifies an account that it deems suspicious, it then “challenges” that account, giving legitimate Twitter users an opportunity to prove their sentience by confirming a phone number. When an account fails this test it gets the boot, while accounts that pass are reinstated.

In another effort to curb disinformation, in March 2018, Twitter began to livestream local news reports during crises in a window next to its timeline. Twitter’s General Manager of Livestream, Kayvon Beykpour, told BuzzFeed News in an emailed statement earlier this year that the platform wanted to improve the credibility of coverage of breaking news by pairing with local news stations. Buzzfeed News reported that, “when people click into live videos appearing on Twitter’s home timeline, they’ll be brought to a custom timeline that places algorithmically selected tweets about the news event next to it. When live video airs on Twitter, conversation on the platform about the streamed event increases. Algorithms are notoriously vulnerable to exploitation and misinformation. But pairing algorithmically selected tweets with news stations’ live video could help mitigate these issues by offering Twitter users an authoritative news source alongside the tweets.”

The Washington Post reported in late August that Twitter is also assessing a user’s reputation for credibility by the behavior of others in a person’s network as a risk factor in judging whether a person’s tweets should be spread.

Yet Twitter has been more reluctant than its peers to moderate the content posted by genuine accounts. Jack Dorsey, Twitter’s CEO, received major backlash after initially refusing to join Apple, YouTube, and virtually all other major platforms in banning Alex Jones, even though Jones had used Twitter for years to promote conspiracy theories and alt-right content. In early September, Twitter finally banned Jones’ account but only a day after Jones publicly harassed Dorsey at a congressional hearing.

As Facebook moved away this past year to deemphasize news content in its News Feed, Twitter seems to have stepped fully into the role of media and news company. The confusion remains on whether platforms, like Twitter, are a newswire or a media entertainment company.

Institutional and Upstart Initiatives

While the term “fake news” has made its way into the everyday vernacular, leading thinkers, venerable institutions, and upstarts alike see it as a limiting and narrow phrase and have begun to shape the space using instead the terms “disinformation” and “information disorder.” Methods to combat this information disorder emerged through many early conferences held by indispensable stakeholders in the journalism space alongside early disinformation thinkers hashing out the problem and trying to create some semblance of a game plan. These regular voices included Shorenstein Center Fellow Dr. Claire Wardle, Data and Society’s Dr. Joan Donovan, and BuzzFeed News’ Craig Silverman. Wardle lends a useful metaphor.

It’s as though we are all home for the holidays and someone pulls out a 50,000 piece jigsaw puzzle. Everyone has gathered round, but our crazy uncle has lost the box cover. So far, we’ve identified a few of the most striking pieces, but we still haven’t even found the four corner pieces, let alone the edges. No individual, no one conference, no one platform, no one research center, think tank, or non-profit, no one government or company can be responsible for ‘solving’ this complex and very wicked problem. – Dr. Claire Wardle who led the creation of the Information Disorder Project at the Harvard Kennedy School’s Shorenstein Center

These early initiatives have begun to assume the role of tent poles to help define an early map of where we are headed. Ethan Zuckerman, the longtime leader of the Center for Civic Media at MIT Media Lab and critical thinker on the spread of disinformation, told a room full of leading academics, journalists, and funders at a recent conference, that “research takes time.” Mark Zuckerberg himself told Congress at his testimony that artificial intelligence is a few years out from finding this hate speech and disinformation.

The urgency was palpable over the two-day conference in June as academics, researchers, fact checkers, newsrooms and foundations looked for any means that will help tackle this information disorder threat that some fear has the potential to topple democracy. Harvard’s new President, Larry Bacow, opened the event and encouraged the gathering to consider how we educate and improve critical thinking skills through media literacy as well as the clearing of the content deluge that has created this information disorder plaguing society.

This is the beginning of a landscape analysis to map the early initiatives and longer-term research efforts scaling to confront disinformation in the U.S.

Institutional Initiatives

The Information Disorder Project at The Shorenstein Center at the Harvard Kennedy School

Inspired by the early and leading research of Dr. Claire Wardle who urged the use of the term “disinformation,” the Information Disorder Project (ID Project) has set out to curtail the spread of mis- and disinformation online. Wardle was a founding member of First Draft and, as a fellow at the Shorenstein Center, brought her vision of a world where news organizations and technology companies effectively mitigate the influence of mis/disinformation on society.

The ID Project is an initiative to combat the spread of disinformation in media through research, training and helping to build capacity for newsrooms to be able to perform thorough verification on their own. The project has three objectives: researching ways in which disinformation spreads and is amplified, training domestic and international journalists and fact-checkers, and developing the Information Disorder Lab.

The Information Disorder Lab (IDLab) is designed to help identify, assess and confront mis- and disinformation on the Internet in real time through a system of weekly briefings about suspicious content and research reports on how disinformation is spreading using two tools they have built called IssueTracker and IDTracker. These tools Identify, collect, and analyzes news content from social media and deliver an internal content management system that allows IDLab research staff to collect, catalog, and code instances of information disorder. This database of coded content can be analyzed to identify patterns and trends, and to track engagement on different kinds of content. The pilot phase began in May 2018.

Its mission is to produce academic research to better understand the production and spread of disinformation; create a living laboratory of engineers, academics, journalists, and digital experts doing research in order to design and test strategies for the mitigation of mis- and disinformation; and develop/disseminate research-based resources to educate journalists, journalism schools, industry, and other academics about how to identify and mitigate the spread of mis- and disinformation.

The Shorenstein Center IDProject is being funded by the John S. and James L. Knight Foundation, Craig Newmark Philanthropies, the Ford Foundation, and the U.S. Open Society Foundation. The ID Project’s international efforts and research are being funded by grants and gifts from the the Gates Foundation, Google and Facebook.

The Truthiness Collaboration at USC’s Annenberg School for Communication and Journalism

The Truthiness Collaboration comes out of The Annenberg Innovation Lab at the University of Southern California’s Annenberg School for Communication and Journalism (USC). Their mission is to advance research and engagement around misinformation, disinformation, propaganda and other discourse fueled by our evolving media and technology ecosystems.

The “AnnLab” is a hipster community with research heft. Made up of artists, scientists, and humanists collaborating to understand the digital transformation, The Truthiness Collaboration is one of five projects coming out of the AnnLab run by Colin Miles MacLay, who spent more than a decade helping to build and lead the Berkman Center at Harvard University and the Digital Initiative at Harvard Business School.

The Truthiness Collaboration says they are exploring the abundant and fractious networked media environment where public and private life collide looking at the risks for individuals, institutions, and democracy as boundaries blur. Areas they are investigating and categories where they are populating research include:

- The complex systems of the old and new forces in media

- Priorities that guide social media platforms which Tarleton Gillespie calls “Custodians of the Internet”

- Disinformation that is out of control and out of context with help from algorithmic decisions

- The limitations of practical interventions – and if there is no silver bullet, what does silver buckshot look like?

- Research-Industry Relationships and Data Diversity

- Policy Interventions and Considerations

- Agenda and Infrastructure for Discovery and Engagement

Bringing the industry research together, in early June 2018, USC held a leading disinformation conference at Harvard Law School called Information Disorder, New Media Ecosystems, and Democracy: From Dysfunction to Resilience sponsored the Ford Foundation, the Knight Foundation, and Craig Newmark Philanthropies.

A few of the concerns at the Annenberg Lab include research relationships with the tech industry and data diversity, a lag in public policy progress with regards to regulation now seen in Europe and what will work in the free spirit entrepreneurialism of America, and cross-cutting research and the tools needed to capture it properly given the scale of the challenge.

The NewsCo/Lab at the Walter Cronkite School of Journalism and Mass Communication, Arizona State University

The NewsCo/Lab at the Walter Cronkite School of Journalism and Mass Communication at Arizona State University was created to explore how news works in a digital age. Dan Gillmor, a leading technology and journalism writer, Director of the Knight Center for Digital Media Entrepreneurship at the Walter Cronkite School and a Harvard Berkman Klein fellow, and Dr. Kristy Roschke, managing director of the News Co/Lab, have built this initiative to help the public find new ways of using digital platforms to engage with the communities they cover to build trust.

The NewsCo/Lab is focused on the demand side of disinformation. The initiative aims to boost media literacy skills to better understand critical thinking around disinformation. The lab seeks to collaborate with newsrooms to increase transparency and community involvement around the news creation process. Its launch project is a collaboration with McClatchy newsrooms and its readers to ensure more transparency and community engagement in the reporting process with the goal of helping people better understand how news becomes news and how they get to a trustworthy, credible result.

Roschke believes that journalists play a major role in helping people understand news literacy. “Sometimes reporters take the reporting process as common sense and journalists have long believed that their trust is earned and understood,” she said. “However, that is not the world we are living in so we believe journalists have a responsibility to explain more of what they are doing and how they do it.” In its experiment with McClatchy, the NewsCo/Lab will explore how journalists should devote time and effort toward involving the communities they serve in their work. The lab will help journalists make this effort and measure what is working and document it for the industry. The first cities where it will partner with McClatchy are Fresno, Calif.; Kansas City, MO; and Macon, Ga. It is experimenting with new technology like Harken and Spaceship (deep dialogue journalism) being developed by other information disorder initiatives such as the News Integrity Initiative. These tools help each city bridge members of their newsrooms, and their communities – such as educators and libraries – to create a working group locally to augment news literacy and trust. The goal is transparency in how the reporters create the story. Roschke says McClatchy offers a great partnership given its diverse range of cities, populations, and demographics. Scale, she says, is a big part of what they hope to achieve.

“Sometimes reporters take the reporting process as common sense and journalists have long believed that their trust is earned and understood,” she said. “However, that is not the world we are living in so we believe journalists have a responsibility to explain more of what they are doing and how they do it.”

NewsCo/Lab, accepting funding from both Google and Facebook, is also working with platforms to examine how newsrooms can scale corrections and experiment with methods that ensure the correction spreads far and fast. Given all the efforts tackling disinformation directly, the lab is focused on the end user and shoring up resources to help the media consumer deal with disinformation. It is also looking at best practices in the journalism field and experimenting by adding news literacy into certain fields outside of journalism. For example, science and health information online is rife with misinformation which has led to a pilot with science professors who will teach a few courses in media literacy at Arizona State using drop-in journalism modules. In the spirit of multi-pronged approaches to solve this crisis, this ensures others are approaching journalism from many touch points to further advance news literacy in our society. Roschke and her team recently published a survey by the NewsCo/Lab and Google on Local News and Opinion in July 2018.

Berkman Klein Center for Internet and Society at Harvard University

The Berkman Klein Center, housed at Harvard Law School, centers its efforts around the question, “How can the internet elicit the best from its users?” The research center explores the limits of our understanding of cyberspace, studying its “development, norms, dynamics, and standards” in an attempt to evaluate “the need or lack thereof for laws and sanctions.” With a focus on internet censorship as well as the internet’s impact on democracy, the Berkman Klein Center is positioned as an important voice in the battle against disinformation. This work has been funded by the Ford Foundation, The AI Fund, and the Open Society Foundation.

The Media Cloud team at the Berkman Klein Center has been focused primarily on disinformation in American politics, although the team includes members who have been mapping online discourse in Russia, Iran, and across the Middle East for a decade. The team’s most recent work has focused on analyzing several million stories related to American national politics from April of 2015 through a few months before the present, adding stories on a rolling basis. The effort uses hyperlinks, tweeting patterns, aggregate Facebook sharing data, and text analysis to trace and map the architecture of attention and accreditation in American political communication. Methodologically, what makes the project distinct are, first, its integration of several platforms into the analysis, including not only the open web and Twitter, but also YouTube, Reddit, and, to a more limited extent, television; and, second, its integration of data science techniques to analyze the very large data sets using network analysis, text mining, and natural language processing with qualitative social science to assess the political and social context of the observations. The primary finding associated with this team’s work is the highly asymmetric nature of polarization in American politics, and its documentation that the right-wing of the American media ecosystem is more susceptible to disinformation campaigns, foreign and domestic, than the rest of the media ecosystems. Their present distinctive argument is that the greatest source of disinformation in American politics is much more pedestrian and mainstream–Fox News and talk radio–than more exotic, technology-centric explanations favored by most researchers in the field.

Media Cloud at the MIT Media Lab

Powering the International Hate Observatory and The Provenance Project

Ethan Zuckerman and his colleagues have spent a decade building Media Cloud at Harvard’s Berkman Klein Center and the MIT Media Lab. The project is not focused on disinformation explicitly, but it is a resource for anyone studying the spread and influence of media, including the mapping of online discourse in Russia, Iran, and across the Middle East. Media Cloud functions like a free, open-source Lexis/Nexis designed to let researchers study how news stories begin and how stories are framed differently in different publications. Zuckerman’s team at the Media Lab used Media Cloud to understand the origins and spread of media coverage around Trayvon Martin’s death. A team led by Yochai Benkler at Berkman used the tool to write papers and a book unpacking anti-immigrant narratives in the 2016 US elections. Media Cloud currently gives researchers access to as much as eight years of data from 50,000 newspapers, blogs, and open web resources, and is expanding to include content from Reddit, YouTube, and other social media platforms.

The MIT Media Lab is launching two new initiatives that build on existing Media Cloud work: The International Hate Observatory and the News Provenance Project.

The International Hate Observatory is focused on discovering the origins of online hate. Zuckerman notes that by the time you have people marching in Charlottesville, hate speech has been online for months or years. Working with ISD, an anti-extremism think tank based in London, and Data & Society, a leading internet research group in New York City, the Media Cloud team is building a rich database for any online researchers studying online hate. They plan to release a set of case studies that examine the spread of extremist content from online forums, through its amplification in social networks and its normalization in mainstream media, as well as guidance for other researchers who want to use the tools to track specific online discourses. This has similar crossover with the Harvard Shorenstein Center ID Lab’s IDtracker and IssueTracker currently being developed.

The other project is the Provenance Project. Using the same systems, Zuckerman and his team are interested in looking at a collection of stories around breaking news to find out who first reported on it. He uses the case of Dr. Larry Nassar and his sexual abuse of members of the US women’s gymnastics team. The stories appeared online in outlets from the New York Times to Huffpost and some mentioned that the Nasser story was broken by Indianapolis Star. The problem is this does not help the Indystar.com in revenue terms. Most of the revenue from clicks goes to the larger outlets with a larger reach who amplified the story. The Provenance Project wants to scan the news and figure out which stories have a clear, first investigate author in the hopes that journalists and their outlets could be rewarded with revenue sharing in those cases.

Run by Zuckerman, the Civic Media Group’s research focuses on the use of media as a tool for social change, the role of technology in international development, and the use of new media technologies by activists. Media Cloud has been supported for over a decade by the Gates Foundation, Robert Wood Johnson, the Ford Foundation, the MacArthur Foundation, the Open Society Foundation, and the Knight Foundation as well as the consortium of funders who support the MIT Media Lab.

Indiana University’s Observatory on Social Media

If the word “disinformation” has taken the helm of this space, observatories appear to be the metaphor of choice. Indiana University has created the Observatory on Social Media where it is building tools that allow people to reflect on their own biases and “protect themselves from outside influences designed to exploit them.” The Observatory on Social Media is a collaboration between the Indiana University Network Science Institute and the Center for Complex Networks and Systems Research.

The project studies the way content and information move through social media, with a focus on meme culture. The Observatory on Social Media defines a meme as “an idea, piece of information, or behavior that is passed from one person to another by imitation.” Acknowledging that this definition is broader than the common conception of internet memes – images superimposed with text – the Observatory tools also consider a meme to be a Twitter #hashtag or, coming soon, a @username.

In an effort to make large social data sets more accessible to social scientists, reporters, and the general public, the Observatory has released a series of tools that allow people to interact with data derived from its meme diffusion analytics. Different tools allow users to observe how a bot-like Twitter user behaves, compare the trends of different memes, analyze who is engaging with a meme, how different memes are related, map out where people are discussing certain memes, and generate movies portraying the way that conversations about a certain meme change over time. Hoaxy, one of the Observatory’s most popular tools, lets users visualize the spread of claims and fact-checks and analyze the way this information has moved around the internet.

Duke Tech and Check Cooperative

The Duke Tech and Check Cooperative, sometimes labeled the “Trust & News Initiative” aspires to stop the spread of falsehoods online. Established in 2017 as part of Duke University’s Reporters’ Lab where they explore new forms of journalism, including fact-checking and structured journalism, this new initiative hopes that by flagging inaccurate information in online articles, it can do its part to restore trust in journalism. Instead of social media, the Trust & News Initiative examines content already propagating through mainstream media outlets.

Bill Adair, the leader of the Tech and Check Cooperative, describes the endeavor as “largely a technology and journalism project…not a social psychology project.” With this focus, the project will mine transcripts for claims that can be fact-checked, create pop-up fact checks for information on the internet, and build an online talking-point tracker. In addition to launching its own tools to help journalists assess the accuracy of information online, the Duke Tech and Check Cooperative will facilitate collaboration and dialogue around information pollution by hosting meetups, webinars, and annual conferences. It will monitor different automation projects that are focused on the spread of disinformation and add to the “Share the Facts” database with the ultimate goal of creating a real-time automated fact checker.

Trusting News at the Missouri School of Journalism

Trusting News at the Missouri School of Journalism wants to give journalists tools to establish and maintain their credibility in the eyes of the public. Funded by Ebay founder Pierre Omidyar, the Democracy Fund, and the Knight Foundation, the research project is part of the Reynolds Journalism Institute, a center at the Missouri School of Journalism that explores the intersection of journalism and technology. Trusting News will work in collaboration with 53 newsrooms to conduct experiments that investigate strategies journalists can employ to show readers that their material is trustworthy and encourage readers to share reliable work. The entire effort is built around helping journalists “teach users to be smarter consumers and sharers.” Local newsrooms participating include the Fort Worth Star-Telegram, St. Louis Magazine, Religion News Service, CALmatters, Discourse Media, and USA Today to name a few.

Studies have shown that emotionally charged content that shows dominance tends to elicit the most engagement online, leading it to be shared more on social media and favored by search engine algorithms. In this way, the platforms that enable individuals to share news online incentivize sensationalism. Trusting News recognizes the essential question in this media environment: “What can credible journalists do to stand out?”

Studies have shown that emotionally charged content that shows dominance tends to elicit the most engagement online, leading it to be shared more on social media and favored by search engine algorithms. In this way, the platforms that enable individuals to share news online incentivize sensationalism.

The project is led by Joy Mayer, a community engagement strategist and former journalist and academic, as well as Lynn Walsh, an Emmy award-winning journalist. In 2016, the Trusting News team conducted interviews with journalists and readers to begin to explore “the elements that create trust and credibility between communicator and receiver.” Trusting News reports that a few themes emerged from this initial research. They found that journalists should be transparent about their motives and the story’s background, engage with readers in a conversation about their reporting, and encourage readers to share their work.

The Trust Project at Markkula Center for Applied Ethics, Santa Clara University

The Trust Project’s mission is to “provide clarity on news organizations’ ethics and standards, the journalists’ backgrounds and how they do their work,” as reported by the Nieman Lab. Led by Sally Lehrman at the Markkula Center for Applied Ethics at Santa Clara University, The Trust Project was conceived in 2014 when major news organizations and tech companies agreed to come together to draft these ethics. Participants include the Washington Post, the Economist, the Globe and Mail, Zeit, Facebook, Google, and Twitter. The collaborators developed eight core indicators to help readers understand from whom the information was coming. These indicators were then standardized in CMS and site code allowing search engines and platforms to recognize them. Schmidt’s “truthiness” report in the Nieman Lab reported that the tech giants’ involvement and application of these indicators seem experimental. However, Lehrman is focused on pushing for transparency on journalists’ practices so the public can be more informed on who to trust.

Center for Media Engagement at University of Texas at Austin

Launched as the “Engaging News Project” in 2013, the Center for Media Engagement works to provide newsrooms with “research-based techniques for engaging digital audiences in commercially viable and democratically beneficial ways.” The Center operates out of the Moody College of Communication at the University of Texas at Austin, and aspires to enable “a vibrant American news media that empowers citizens to understand, appreciate, and participate in the democratic exchange of ideas.”

The project focuses on conducting research, improving news organizations’ resources, and encouraging collaboration between different efforts to connect reporters and their audiences. The Center’s research investigates how news organizations can most effectively engage both active news readers and passive news consumers. On the practical side of the project, the initiative aims to equip news producers and consumers with “content, tools, and strategies to quantitatively improve news and democratic engagement.” Its intent is to encourage collaboration between academia, newsrooms, and news-related organizations fostered by the Center for Media Engagement.

The Center for Media Engagement is supported by NII, Google News Initiative, Knight, Facebook, The Coral Project, Democracy Fund, Hewlett Foundation, Rita Allen Foundation, and more. The project will see its mission as complete when media practices are informed by research, and when media “organizations are routinely making decisions based on both business and democratic considerations.”

The Oxford Internet Institute Computational Propaganda Lab

The Oxford Internet Institute Computational Propaganda Lab is a project funded by the Ford Foundation championing for the health of public life with a multidisciplinary team of researchers investigating the social and political impact of computational propaganda. Computational propaganda describes the use of information and communication technologies and social media platforms to manipulate perceptions, affect cognition, and influence behavior. The Lab will take a multidisciplinary approach with this project and draw from organizational sociology, human-computer interaction studies, communications studies, information science, and political science. The Lab is housed at the Oxford Internet Institute at the University of Oxford and they will be studying the U.S. as well.

Upstart Initiatives

First Draft

First Draft started in 2015 as a coalition of nine organizations, established and supported by Google News Lab, that worked in verifying information that circulates online. The coalition worked to improve verification practices and create ethical standards in newsrooms and classrooms globally when including user-generated content in reporting. First Draft expanded its coalition with a partner network initiative in September 2016, which brings together newsrooms, technology companies, human rights organizations and universities across the globe to share best practices, scale training, and champion collaboration. After the 2016 U.S. election, First Draft shifted to address mis- and disinformation online by developing experimental projects, researching those projects and creating training materials based on project research. Building upon ProPublica’s Electionland model, First Draft assembled 37 newsroom partners in France to debunk mis- and disinformation around the French presidential elections with its 2017 Online Journalism Award-winning project CrossCheck France. First Draft refined the CrossCheck model for its 2018 collaborative verification project for the Brazilian elections, Comprova newsroom, which brought together 24 newsrooms to combat rumors online and is the first non-profit to have API access to the closed-messaging app WhatsApp. It plans to have election projects in Nigeria, India and Central America in 2019.

Data & Society

Data & Society, a four-year-old nonprofit based in Brooklyn, began with funding from Microsoft. Today, it counts close to 50 funders backing its work, including Twitter co-founder Eve Williams and his wife Sara’s foundation, Craig Newmark Philanthropies, Arthur P. Sloan, and a number well-known foundations. Data & Society are focused on accessible and activist research for journalists, the public, and researchers and out front as one of the most vocal groups fighting disinformation with their Media Manipulation Initiative led by Joan Donovan. Donovan and her team of 15 researchers are studying ways to help news organizations, civil society, platforms, and policymakers have informed relationships between technical research and socio-political outcomes. This includes assessing strategic manipulation, encoding fairness, and accountability into technical systems, and ethnographic research.

Well known for their hip Brooklyn-designed publications, Data & Society has built its brand with research heft. Alice Marwick’s and Rebecca Lewis’ Media Manipulation and Disinformation Online and Whitney Phillips’s Oxygen of Amplification were circulated widely within the industry. Data & Society researchers quickly became the keystone voices at every disinformation conference and gathering held over the past 18 months in the U.S. They recently announced their own lab for projects around civil society called the Disinformation Action Lab launching two pilot projects – The Data Integrity Project will be focused on improving feedback loops around adversarial actors who strategically deploy disinformation while Data Voids will serve as a collaboration between artists, journalists, comedians, and developers to fill data voids with humor to redirect people from darker places on the internet.

Oxygen of Amplification became an immediate guide for those in the disinformation space with ethnographic research of trolling and hate on the internet outlined by Phillips. In Phillips Tips for Reporters section of the study, she outlines eight broad categories for reporters to consider. She writes, “Journalists, particularly those assigned to politics and technology beats, were presented with a unique challenge before, during, and after the 2016 U.S. presidential election. The bigoted, dehumanizing, and manipulative messages emanating from extremist corners of the internet were impossible, and maybe even unethical, to ignore.”

NewsGuard

NewsGuard, a for-profit startup with $6 million in funding will research and rate thousands of news sources, licensing these findings to social media platforms and search engines in order to inform readers about the news content they’re consuming. Co-founder Steven Brill says, “We’re going to apply common sense to a problem that the algorithms haven’t been able to solve.” That sums up the common front these initiatives are pursuing, some as a public service to protect the free press and democracy and some as a paid service. Brill is the Founder of Court TV, The American Lawyer Magazine, American Lawyer Media, Brill’s Content Magazine, Journalism Online, and The Yale Journalism Initiative.

NewsGuard plans to hire dozens of journalists to serve as news analysts, reading and reviewing the 7,500 news and information sites that account for 98% of the news consumption in the U.S. NewsGuard will assign sites a red, green, or yellow “nutrition label,” accompanied by a description between 200 and 300 words long that will provide background on the publication. This description will include information about the publication’s ownership, financial backing, history, and reliability, as well as information on how readers can complain if they identify an issue with material the publication has produced. In addition to licensing this data to social media sites and other platforms, NewsGuard plans to license their work to advertisers who could be hurt by partnering with a website that promotes disinformation. Brill reasons that the licensing fees that NewsGuard will charge are a fraction of the expense that these advertisers are already paying lawyers and PR firms to manage the risk of advertising on shady news sites.

Services like NewsGuard face one significant obstacle: bias. NewsGuard intends to address accusations of bias by publishing the backgrounds and names of every writer of every review and establishing a public system through which readers can submit complaints and appeals. For tech platforms who continue to resist the assignment as an editorial voice, the question remains on who will assume the editorial voice in this new digital information world? Will these initiatives pick up where platforms have refused to take an editorial voice? As these experiments take shape, this conversation will continue.

Deepnews.ai