Disclaimer: This is a working paper of the Democracy and Internet Governance Project at the Shorenstein Center. As a working paper it represents research in progress. This paper represents the opinions of the authors and is the product of professional research. It is not meant to represent the position or opinions of the Shorenstein Center, the Harvard Kennedy School, Harvard University as a whole, nor the official position of any staff members or faculty. We welcome comments on the paper and any observations on where the paper may present errors or incomplete observations, which we can address in due course.

Trigger Warning: this document references instances of hate speech, violence, and Islamophobia related to the use of social media. It contains references to murder, riots, and violent rhetoric targeting specific communities. These topics may be distressing to certain readers, who we advise to proceed with caution.

Case Study Goals:

- Highlight potential conflicts that may arise between political and economic considerations and integrity in certain country contexts

- Discuss the role the media can play in reviewing governance and integrity practices at tech companies

- Highlight benefits and risks/sensitivities from the sharing of internal company information (e.g., the Facebook Papers leaked by Frances Haugen), including how this information can be effectively disseminated and acted upon

Executive Summary

This case study examines Facebook’s failure to consistently enforce its community guidelines on hate speech and ‘violence and incitement’ in India due to internal trade-offs between integrity and political objectives. It highlights the role that media outlets supported by internal stakeholders at the company played in increasing transparency around governance processes, leading to internal change.

The Public Interest Tech Lab and the Shorenstein Center at the Harvard Kennedy School have created FBarchive, a curated collection of “The Facebook Papers.”[i] Internal documents in FBarchive illustrate concerns from employees at Facebook that company was not doing enough to curb hate speech, violence and incitement in India. This includes evidence of groups and pages with “inflammatory and misleading” anti-Muslim content propagated by the RSS,1Rashtriya Swayamsevak Sangh, a right-wing paramilitary group closely allied with the BJP anti-Muslim narratives spread through coordinate social media campaigns, and the designation of India amongst five countries at “severe risk” or worse for societal violence, alongside Ethiopia, Myanmar, Syria and Yemen.

The Wall Street Journal (WSJ) published two articles in August 2020 which suggested a likely conflict between Facebook’s moderation practices and political and economic objectives in the country. Central to this analysis was the case of Indian politician, R. Raja Singh, who used the platform to say, “Rohingya Muslims should be shot,” to call Muslims traitors, and to threaten to raze mosques. No action had been taken despite the recommendations for Facebook moderation teams to remove Singh from the company’s platforms in March 2020.

The WSJ coverage noted the importance of India as a core market for Facebook and WhatsApp, and the consequent importance of staying on the side of the BJP2Bharata Janatiya Party, the incumbent government and regulators. The coverage also centered its criticisms on one Facebook employee, Ankhi Das, then the company’s most senior Public Policy executive in India. Das had opposed the removal of R. Raja Singh from the platform and had intervened in the cases of at least three other Hindu Nationalist individuals flagged internally for promoting or participating in violence. The article also cited personal activities by Das to highlight her pro-BJP convictions. It claimed that Das’s actions were part of “a broader pattern of favoritism by Facebook towards [Narendra] Modi’s BJP party and Hindu hard-liners.”

Facebook’s leadership initially refuted allegations of bias and defended Ankhi Das’s conduct. However, an FBarchive documents shows the WSJ’s coverage provoked internal discussion at Facebook, where employees challenged this stance. It also created the momentum for public pressure from a coalition of local opposition politicians, civil society organizations and internal stakeholders at Facebook. This was followed by the removal of Singh from the platform and his designation as a “dangerous individual” in September 2020, and Das’s resignation from Facebook in October 2020.

The case highlights the crucial role media outlets can play in increasing transparency of governance standards at private organizations, and how this can in turn affect change. It also demonstrates the power of coalition-building in affecting change; the internal information provided by employees was crucial to inform press coverage, and in turn, press coverage catalyzed public pressure on Facebook to address internal governance issues, resulting in R. Raja Singh’s removal from the platform and Ankhi Das’s resignation. Furthermore, the actions of internal Facebook employees challenging leadership reflects that big tech companies are not a monolith – rather they are comprised of stakeholder groups with multiple, and at-times conflicting priorities.

The political dynamics of this case point to a broader issue on how digital platforms balance safety and integrity with commercial and political concerns. The Indian government holds the power to restrict their citizens from accessing Facebook as a platform, and the internet more broadly, and have a track record of doing so. The digital rights advocacy group Access Now found that India was the leading country globally for shutdowns over five successive years. There is therefore a balance between being able to operate with integrity and being able to operate at all.

The personalized nature of the coverage should be examined. Das’s resignation can be viewed as an outcome of due process for her conduct, though one which played out in public rather than through Facebook’s internal governance processes. However, if her resignation does not change the underlying organizational calculus of integrity being traded off for political and commercial considerations in India, it could absolve Facebook of the responsibility of addressing this broader issue. As a representative from Facebook states, “policy decisions are made on an organizational and not an individual level.”

Where there are demonstrative risks and harms, there is a duty of care to address these harms. In this case, the purported internal bias towards the BJP set a dangerous precedent, where social media platforms could be leveraged for political gain. There were clear shortcomings in Facebook’s governance of hate speech in India, and it was important to make the resulting risks and harms transparent to the public. The case illustrates the role of the media as an important actor in doing so.

Historical context

Anti-minority and anti-Muslim sentiments have escalated in India in the last decade. The Council on Foreign Relations (CFR) relays that “experts say anti-Muslim sentiments have heightened under the leadership of Prime Minister Narendra Modi and the ruling Bharatiya Janata Party (BJP), which has pursued a Hindu nationalist agenda since being elected to power in 2014.”[ii]

This has spilled over into violence, with notable incidents including the Muzaffarnagar riots in 2013, where more than 60 people were killed and an estimated 50,000 displaced, and the New Delhi clashes in 2020, where around 50 people were killed.[iii] The CFR narrates that “Hindu mob attacks [against Muslims] have become so common in recent years that India’s Supreme Court warned they could become the ‘new normal’.” A 2019-20 statistical risk analysis by the Early Warning Project ranked India as 13th globally for the risk of genocide.[iv]

In a letter written to Mark Zuckerberg and Sheryl Sandberg in September 2020, a group of 41 civil rights groups noted that “mass riots in India spurred on by content posted on Facebook [had] been occurring for at least [the past] seven years.”[v] They refer to an early reported instance of this occurring in 2013, when a mislabeled video on the platform triggered the Muzaffarnagar riots, resulting in 63 deaths.[vi] The video was initially shared by a BJP party politician, Suresh Rana, who was later arrested.[vii] This was an early example of right-wing nationalist movements using the platform to incite violence.

As Archana Venkatesh, a researcher at Ohio State University narrates, “Critics of the BJP have noted that the party based its campaign around a rhetoric of ’Hindutva’ or Hindu Nationalism. The BJP popularized an ethnically divisive discourse in order to gain Hindu votes and create a culture of majoritarianism that would exclude minority communities from India.”[viii]

“An anti-Muslim stance was central to this process,” she adds.

Alongside this is a track record of curbing online dissent, with Indian legislators and regulators regularly restricting their citizens from accessing Facebook as a platform, as well as the internet more broadly. The digital rights advocacy group Access Now found that India accounted for 58% of all documented global shutdowns in 2022 and was the leading country for shutdowns over five successive years.[ix]

The Facebook Papers – internal knowledge of governance practices in India

A set of internal Facebook documents, termed “The Facebook Papers,” were provided to Congress and news outlets around the world by Frances Haugen, a former Facebook employee and whistleblower.[x] These documents were also provided to the Public Interest Tech Lab at the Shorenstein Center at Harvard Kennedy School, where they were used to create ”FBarchive”, a searchable, curated collection of these documents.[xi]

Internal documents from FBarchive illustrate concerns from certain employees and stakeholder groups at the company that Facebook was not doing enough to curb hate speech, violence and incitement in India. One such document[xii] presented evidence of groups and pages “replete with inflammatory and misleading anti-Muslim content propagated by the RSS3Rashtriya Swayamsevak Sangh, a right-wing paramilitary group closely allied with the BJP. They found these anti-Muslim narratives had “violence and intimidation” intent and included messaging to oust Muslim populations from India or introduce Muslim population control laws. They also found dehumanizing posts comparing Muslims to “pigs” and “dogs,” such as the screenshot in Figure 1, captioned ”Pakistani pig,” and misinformation claiming the Quran calls for men to rape their female family members.

Figure 1 – Screenshot of example of post with political messaging to oust Rohingya Muslim populations from India

Figure 2 – Screenshot of example of dehumanizing anti-Muslim content, captioned “Pakistani pig”

The document also provides evidence of these narratives spread through more coordinated social media campaigns.[xiii] The “Love Jihad” campaign, spread by the hashtag “#stop_love_jihad,” accused Muslim men of luring Hindu women with the promise of marriage or love in order to convert these women to Islam. The #Boycott Halal campaign was commonly spread in West Bengal, Kerala, Assam and Tamil Nadu, and is a larger Islamophobic campaign propagated by pro-BJP and pro-RSS entities. This suggested that Muslim clerics spit on food to “make it halal” and spread COVID-19,.

Figure 3 – Screenshot of video spreading the “Love Jihad” campaign

Another internal document, entitled “2021 Integrity Prioritization for At-Risk Countries,” classifies India as amongst the five countries which are at “severe risk” or worse for societal violence, alongside Ethiopia, Myanmar, Syria and Yemen.[xiv] This reflects not only the external risk of violence, but also the poor performance and inadequate capabilities of Facebook’s integrity systems outside of the US. The issues identified include limited localized understanding of problems outside the US, lack of content moderation classifiers in local non-English languages, and a lack of human moderators including labelers, content reviewers and third part fact-checkers:[xv]

Wall Street Journal coverage of the case

On August 14th 2020, the Wall Street Journal published an article reporting on Facebook’s handling of posts by Indian politician R. Raja Singh, who used the platform to say “Rohingya Muslims should be shot,” to call Muslims traitors and to threaten to raze mosques.”[xvi]

By March 2020, Facebook’s moderation teams concluded that Singh had not only violated hate speech rules, but qualified as a “dangerous individual,” based on his off-platform activities.[xvii] As of September 2020, Singh had “60 [criminal] cases against him,” mostly related to hate speech.[xviii] Their recommendation was that he should be permanently banned from the company’s platforms. However, at the time of writing, Singh was still active on Facebook and Instagram.

The article suggested a likely conflict between moderation practices, and political and economic considerations.[xix] It quoted Alex Stamos, Director of Stanford University’s Internet Observatory, who said in May 2020, “A core problem at Facebook is that one policy org is responsible for both the rules of the platform and keeping governments happy.”

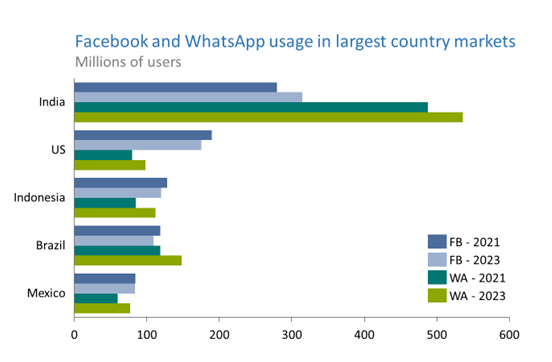

India represented and continues to represent a core market for Facebook, being the leading market for both the Facebook[xx] and WhatsApp[xxi] platforms by daily active users. In April 2020 they spent $5.7bn on a partnership with Indian telecoms operator, Jio, their largest foreign investment to date at the time of writing.[xxii] Furthermore, India’s ban of TikTok in June 2020, and its previous blocking of a Facebook-centric telecommunications service, “Free Basics,” in 2016, highlighted the consequences of not complying with regulators and the country’s political leadership.

Figure 4 – Facebook and WhatsApp usage in largest country markets4Facebook 2021 figures, as presented in the Wall Street Journal, from “We are Social”; Facebook 2023 figures from Statista; WhatsApp 2021 and 2023 figures from “World Population Review”

The article centered its criticisms on Facebook’s then most senior Public Policy executive in India, Ankhi Das.[xxiii] It noted that Das opposed the application of internal hate-speech rules to R. Raja Singh and at least three other Hindu Nationalist individuals and groups flagged internally for promoting or participating in violence,. Moreover, citing current and former employees at Facebook, it claimed that Das’s actions were part of “a broader pattern of favoritism by Facebook towards [Narendra] Modi’s BJP party and Hindu hard-liners.”

The article then went on to cite previous personal activities by Das which highlight her pro-BJP convictions.[xxiv] This included a 2017 essay where she praised Narendra Modi, and her sharing of a post on her own Facebook page from a former police official, claiming to be Muslim, who called India’s Muslims traditionally a “degenerate community” for whom “Nothing except purity of religion and implementation of Shariah [Islamic Law] matter.” In response she wrote “the post spoke to me last night. As is should to the rest of India.”

The initial 14th August article was followed by a second article by the WSJ on August 30th 2020, a feature with Das as its subject, detailing her history in supporting Narendra Modi’s first Prime Ministerial campaign and pro-BJP groups in an internal Facebook discussion board from 2012 to 2014.[xxv] The quoted messages on this board explicitly cite her as being conscious of trading for political favors. “We’ve been lobbying them for months to include many of our top priorities,” she is quoted as saying of the BJP, adding, “Now they just need to go and win the elections.”

The piece was also critical of Facebook’s relationship with the BJP, noting the platform’s failure to act after discovering the party’s non-compliance with its political ad transparency requirements.[xxvi] The BJP was found to have spent thousands of dollars to create organizations which did not disclose their connection to the party. Facebook took no action in response, in contrast with its treatment of the opposition Congress Party.

The reaction from Facebook’s leadership

The August 30th WSJ article provides an official response from Facebook to the initial coverage, delivered by company spokesperson Andy Stone, “These posts [by Ankhi Das] are taken out of context and don’t represent the full scope of Facebook’s efforts to support the use of our platform by parties across the Indian political spectrum.”[xxvii]

This is also reflected in internal communications from senior leadership to employees on the company’s internal message board. [xxviii]

In this communication they refuted allegations of political bias:

| “The story implies that we are biased here in India in our enforcement of our content policies on alleged hate speech by political leaders due to relationships our leaders have with key politicians.

We carefully reviewed each of the reporter’s queries and are confident that the article’s claim that political affiliations influence decision making in India is inaccurate and without merit.” |

They also defended Ankhi Das’s conduct:

| “Over the last 18 months, I have had the opportunity to work with [redacted] closely. I have only seen work of the highest integrity from her. This article does not reflect the person I know or the extraordinarily complex issues we face everyday that benefits from [redacted] and the Public Policy team’s expertise.”

“We believe freedom of expression is important, and support the right of our employees to speak openly and respectfully about issues that are important to them” |

They noted that the “hateful, harmful” content referenced in the August 14th WSJ had since been taken down:

| “We want to make it clear that we stand with our Muslim colleagues against anti-Muslim bigotry and hate in any form. The WSJ article made reference to a number of pieces of content that are hateful, harmful, and do not belong on our services; we removed this content as soon as we were made aware of it and will continue to take down content that goes against our Community Standards.” |

They also noted that a decision had not yet been taken on the designation of R. Raja Singh as a “dangerous individual”:

| “Decisions about designating people or groups as “dangerous individuals or organizations” are different – these are based on a combination of signals and are made by our dangerous organizations team who have deep expertise in terrorism and organized hate and pay attention to global and regional trends. The concerned individual’s designation is currently under review |

They noted there is a cross-functional review process for decisions to designate “dangerous individuals” and explain the role the Public Policy team plays in this process, which includes factoring in the “socio-political context” and “regulatory risks” of enforcement options.

| “In addition to the external input that informs our designation policy, we also solicit inputs from an internal cross-functional team when we’re making decisions about individual designations. These decisions cannot and are not made unilaterally by just one person; rather, they are inclusive of different views from around the company, a process that is critical to making sure we consider, understand and account for both the local and global contexts.

In the case of designations specific to India, for example, it is standard practice for our dangerous organizations team to reach out to the India public policy team to get their views, but this is just one input into the process and the India public policy team is not the ultimate decision maker” |

Overall, though action was taken in removing R. Raja Singh’s posts, Facebook refuted allegations of political bias on an organizational level, and on behalf of Ankhi Das. Furthermore, no further action had been taken on the broader case concerning Singh’s designation as a “dangerous individual.”

Internal reaction from employees at Facebook

In the resulting discussions involving employees at Facebook, the response was more mixed, with many asking questions or making comments to hold the company leadership to account.5Note: These quotations are from comments made on an internal discussion board at Facebook, as presented in the FBarchive document “August 2020 Update on Integrity Enforcement, Diversity, and Inclusion Issues for Facebook in India.” The identities of the employees are redacted

On the slow moderation process in R. Raja Singh’s case:

| “I understand that the designation process is going on and has been going on for more than 6 months. Is this, in your view, normal? Are there cases where we have designated individuals of similar political prominence in less time? It seems quite surprising that this took 6 months when for e.g. CCI approval for FB’s investment in Jio took only 2 months to obtain” |

On the influence held by the Indian Public Policy team in decision-making:

| “In this case, I think it would be beneficial to understand what those other cross-functional groups recommended – i.e. was there general consensus that this person should be designated with Public Policy being the only dissent/roadblock?” |

On the broader effectiveness of moderation processes:

| “On this point here: “We rely heavily on our community to identify and report potential hate speech and remove it as soon as we are made aware of it.” Are you suggesting that no one flagged content from a prominent politician calling for violence against Muslims?” |

| “The fact that such content from prominent accounts which is under review for designation, remains on our platforms until someone reports it, does feel inadequate.

Also, if reporting is the primary way for taking down this kind of content, how likely is it that people who don’t share beliefs of this kind see this content – and if it remains within groups of like-minded people, are we ok with it? Does it not help fuel these misplaced, hateful beliefs? Would be great if we can use this instance as an opportunity to take a hard look at why this kind of content stayed up and what we can do differently” |

| “Reports suggest that we took action and removed posts after the WSJ article. Not a good look and raises a number of questions

As it relates to T. Raja Singh given the speed we move at its hard to believe that a decision should take 5 months. Certainly complicated with many moving parts but the article alleges a conclusion was arrived at back in March. At a minimum again the optics don’t look good. Overall this incident is just sad – it breaks trust and makes it that much harder to build community around the world” |

On Ankhi Das’s conduct:

| “People’s lives are at risk in India and we are acting like the public comments from our leadership were harmless. We understand that things become complicated in the policy world but this is a trust issue for us as coworkers. We must hold ourselves to a higher standard as employees of this institution regardless of our personal feelings” |

| “If someone engages in communications or behaviors outside of work that carry over into work and create an uncomfortable or unsafe work environment, then we would investigate the situation and determine whether additional interventions are appropriate to maintain a safe and respectful work environment for our people” |

| “A head of policy amplifying a post that calls the Muslim community in India “degenerate” and a 2nd post that calls on Muslims in India to be more responsible and lays the blame for COVID spread in the country at the feet of this minority. Free speech yes, but assuming good intent at a minimum its not a good I look coming from someone with this amount of clout / power.

This is especially true given a policy environment where communal tensions are high, people fan content to raise hate towards others, and real-world violence most certainly occurs” |

Another article from the Wall Street Journal, this time published on 21st August, documented an organized response from Muslim@, the internal group for Muslim employees at Facebook.[xxix] Members of this group from India, the U.S. and the Middle East wrote a letter to leadership, advocating for a “more transparent” policy-enforcement process for high-profile users that is “less susceptible to political influence.” It also detailed “a pattern of favoritism in India towards the BJP and Hindu hard-liners.”

“Many of us believe our organizational structure combining content policy and government affairs is fundamentally flawed.,” The letter went on to add, “There was no acknowledgment that we might have made mistakes in allowing such content to remain on the platform. This is deeply saddening and can be viewed as indicating a lack of empathy for the Muslim experience at best and a tacit condoning of this behavior at worst.”[xxx]

External response from civil society and political opposition

Internal reactions from employees at Facebook were mirrored by an external response. On 9th September, a coalition for 41 civil rights groups sent an open letter to Mark Zuckerberg and Sheryl Sandberg, citing the potential for these events, and Facebook’s broader handling of moderation practices in India, to escalate violence in the country.[xxxi] Citing prior violent acts linked to Facebook, including genocide in Myanmar and a shooting in Kenosha, Wisconsin, these groups wrote, “Facebook should not be complicit in more offline violence much less another genocide, but the pattern of inaction displayed by the company is reckless to the point of complicity. We write to urge you to take decisive action to address Facebook India’s bias and failure to address dangerous content in India.”[xxxii]

There was also a response from opposition members of Parliament in India. Shashi Tharoor, a member of the opposition Congress party and head of the Parliamentary information-technology committee, tweeted on 15th August that the committee would seek input from Facebook on “safeguarding citizens,” preventing the misuse of social media platforms, and the company’s plans to address hate speech.[xxxiii] The Congress party’s General Secretary K.C. Venugopal demanded a parliamentary probe on behalf of the party, calling on Facebook to investigate its India operation, to make transparent “all instances of hate speech,” and to consider personnel changes in the management team. Others, including Raghav Chadha, spokesman for the opposition Aam Aadmi party, and the Internet Freedom Foundation, an India-based digital rights advocate, demanded hearings for senior Facebook executives on the issue.[xxxiv]

Outcomes

The journal’s coverage was followed by the removal of R. Raja Singh from the platform on 3rd September 2020 and his designation as a dangerous individual.[xxxv] “We have banned Raja Singh from Facebook for violating our policy prohibiting those that promote or engage with violence and hate from having a presence on our platform. The process for evaluating potential violators is extensive and what led us to our decision to remove his account,” the company wrote in an email statement to the Times of India.

Following this, Ankhi Das resigned from Facebook on 27th October. Though the official reason given was that Das was leaving Facebook to “pursue public service,” her resignation is likely connected to the response, both internal and public, to this incident.[xxxvi] Verge notes that “she resigned her position after months of escalating pressure from activists.”

Is personalized coverage productive?

Both the 14th August and 30th August WSJ articles are focused on Ankhi Das, with the latter being a feature focused on her conduct. The personalized nature of this coverage is emphasized by the decision to feature a photo of her in both articles, with Figure 4 depicting the headline and lead picture of the 30th August article.[xxxvii] Though Das is demonstrably culpable of problematic behaviors in the evidence presented, including amplification of hate speech and abusing her position to favor a political party, she was enabled in these behaviors by Facebook as a company. This is noted not just in her track record of behavior, but in the response from leadership at the company, which defended her conduct. The articles put Das, not a publicly prominent figure, at the center of the issue, rather than Facebook as an organization.

Moreover, in this case, the personalized nature of the coverage put not only Das in danger, but through her response endangered others. Following the initial August 14th article, Das filed a complaint to the Delhi Police Cyber Cell unit on August 16th after reportedly receiving threats and offensive messages on social media, naming at least five individuals and online accounts and calling for their immediate arrest.[xxxviii] She publicized this complaint in local media.

Amongst the cited individuals and accounts was a journalist, Awesh Tiwari, State Bureau Chief of a local news channel.[xxxix] As reported by the Committee to Protect Journalists, Das’s complaint asked for an investigation to be opened against Tiwari for sexual harassment, defamation and criminal intimidation, with consequences of fines as well as up to two years in prison for sexual harassment, up to two years for defamation, and up to seven years in prison for criminal intimidation, according to the Indian penal code. The complaint cites a post made by Tiwari on Facebook.

This post, made on 16th August, and seen in Figure 6 below, makes political criticisms of Das, but does not appear to reflect her accusations. Her complaint of Tiwari drew criticism from the International Committee to Protect Journalists, which saw the charges as a potential threat to the free press.[xl] These accusations resulted in Tiwari receiving threats, including 11 calls from unknown numbers threatening him with imprisonment, lawsuits and physical harm.

Figure 5 – Extract from August 30th WSJ article

Figure 8 – Awesh Tiwari’s Facebook post cited in Das’s criminal complaint (translated from Hindi)8A copy of this post is presented in an article by local news outlet Gauri Lankesh News, published on 18 August 2020

Discussion

The case study highlights theclear tension between preserving the integrity of FB’s community guidelines and supporting political objectives in India. Though there is a strong commercial incentive to secure the favor of the current government, there are also potentially legitimate operational concerns. Indian legislators and regulators can restrict their citizens from accessing Facebook as a platform, as well as the internet more broadly, given that India accounted for 58% of all documented global shutdowns in 2022 and was the leading country for shutdowns over five successive years.[xli] There is therefore a balance between being able to operate with integrity and being able to operate at all. Furthermore, the platform may have to navigate allegations of unequal treatment across countries. If inflammatory BJP politicians are treated a certain way, there will be questions on whether inflammatory politicians in the US or Europe receive equivalent treatment.

Nevertheless, the internal evidence presented in FBarchive highlights the very real risks and harms to minority communities that resulted from the failure to enforce integrity measures in India, illustrated by India’s position as one of the five countries most at risk for societal violence in 2021. This was a clear signal that governance standards needed to change.

The Wall Street Journal’s coverage of the R. Raja Singh incident was an effective example of the role the media could play in increasing transparency around these governance standards, with the purpose of affecting change . The interplay between the media and internal stakeholders was particularly instrumental. Internal Facebook employees provided critical internal evidence to support the coverage, and produced an organized response to advocate for intentional policy on hate speech. It was this movement, supported by public pressure from civil society and political officials, that led Facebook to act and ban Singh from the platform. This reflects that big tech companies are not a monolith and are comprised of stakeholder groups with multiple, and at-times conflicting, priorities.

This incident raises questions around the effectiveness of personalized coverage, and the sensitivity with which it should be handled. Ankhi Das’s resignation can be seen as an outcome of due process for her conduct, though one which played out in public rather than through Facebook’s internal governance processes. However, if her resignation does not change the underlying organizational calculus of integrity being traded off for political and commercial considerations in India, it could absolve Facebook of the responsibility of addressing this broader issue. Moreover, the resulting threats to the safety of both Das and Tiwari highlight the sensitive subject matter and political context of the coverage. When shining a spotlight on such issues, it is important to do so with care to avoid creating greater risks or harms.

Nevertheless, where there are demonstrative risks and harms – and loss of life due to violence is as demonstrative as they come – there is a duty of care to address these harms. The purported internal bias towards the BJP in this case set a dangerous precedent for social media platforms to be leveraged for political gain, in this case to the danger of minority groups. There were clear shortcomings in Facebook’s governance of hate speech in India, and it was important to make the resulting risks and harms transparent to the public. The case illustrates the role of the media as an important actor in doing so.

Discussion questions

- How successful was the WSJ’s coverage in achieving its objectives?

- What mechanisms can media use to hold digital platforms accountable?

- How do digital platforms handle accusations of political bias, especially across different cultural and political contexts?

- Should the lobbying and integrity-setting functions of public policy teams be separated? What would be the consequences of doing so?

- How do companies balance political interests and integrity, especially in contexts where countries hold strong negotiating power?

- Is whistleblowing ethical?

- Is targeted reporting around an individual justified?

- How widespread is alleged political bias by platforms – is this an isolated case?

- Are the issues presented in this case systemic to social media companies?

Endnotes

[i] ‘fbarchive.Org’, accessed 11 October 2023, https://fbarchive.org/.

[ii] ‘India’s Muslims: An Increasingly Marginalized Population’, Council on Foreign Relations, accessed 16 October 2023, https://www.cfr.org/backgrounder/india-muslims-marginalized-population-bjp-modi.

[iii] Ibid

[iv] ‘Country – India- Early Warning Project’, accessed 16 October 2023, https://earlywarningproject.ushmm.org/countries/india.

[v] Open letter to Mark Zuckerberg and Sheryl Sandberg from 41 civil rights groups, https://cdn.vox-cdn.com/uploads/chorus_asset/file/21866414/FINALDEAR.MARK.FBINDIA.pdf. Accessed 6 Sept. 2023

[vi] ‘Muzaffarnagar Riots: Fake Video Spreads Hate on Social Media’. Hindustan Times, 10 Sept. 2013, https://www.hindustantimes.com/india/muzaffarnagar-riots-fake-video-spreads-hate-on-social-media/story-WEOKBAcCOQcRb7X9Wb28qL.html.

[vii] ‘BJP MLA Suresh Rana Arrested for Muzaffarnagar Riots’, The Economic Times, 20 September 2013, https://economictimes.indiatimes.com/news/politics-and-nation/bjp-mla-suresh-rana-arrested-for-muzaffarnagar-riots/articleshow/22815590.cms?from=mdr.

[viii] ‘Right-Wing Politics in India’, Origins, accessed 11 October 2023, https://origins.osu.edu/article/right-wing-politics-india-Modi-Kashmir-election?language_content_entity=en.

[ix] Five Years in a Row

[x] ‘The Facebook Papers’, The Facebook Papers, accessed 11 October 2023, https://facebookpapers.com/.

[xi] ‘fbarchive.org’

[xii] Adversarial Harmful Networks

[xiii] Ibid

[xiv] 2021 Integrity Prioritization

[xv] Ibid

[xvi] Facebook’s Hate-Speech Rules

[xvii] Ibid

[xviii] Mallika Goel, ‘Facebook Row: BJP Leaders Hegde & Raja Singh’s Past Controversies’, TheQuint, 17 August 2020, https://www.thequint.com/news/india/facebook-row-past-controversies-of-anantkumar-hegde-raja-singh.

[xix] Ibid

[xx] Facebook Users by Country

[xxi] WhatsApp Users by Country

[xxii] Horwitz, Jeff, and Newley Purnell. ‘Facebook Takes $5.7 Billion Stake in India’s Jio Platforms’. Wall Street Journal, 22 Apr. 2020. www.wsj.com, https://www.wsj.com/articles/facebook-takes-5-7-billion-stake-in-indias-jio-11587521592.

[xxiii] Facebook’s Hate-Speech Rules

[xxiv] Ibid

[xxv] Facebook Executive Supported India’s Modi

[xxvi] Ibid

[xxvii] Ibid

[xxviii] ‘August 2020 Update on Integrity Enforcement, Diversity, and Inclusion Issues for Facebook in India’, FBarchive Document odoc5456787897w32 (Green Edition). Frances Haugen [producer]. Cambridge, MA: FBarchive [distributor], 2022. Web. 15 May 2023. URL: fbarchive.org/user/doc/odoc5456787897w32

[xxix] Facebook Staff Demand

[xxx] Ibid

[xxxi] Facebook’s India Policy Chief

[xxxii] Open Letter to Mark Zuckerberg

[xxxiii] Facebook Faces Hate-Speech Questioning

[xxxiv] Ibid

[xxxv] ‘Facebook Bans T Raja Singh of BJP, Tags Him as a “Dangerous Individual”’. The Times of India, 4 Sept. 2020. The Economic Times – The Times of India, https://timesofindia.indiatimes.com/india/facebook-bans-t-raja-singh-of-bjp-tags-him-as-a-dangerous-individual/articleshow/77907922.cms.

[xxxvi] Brandom, Russell. ‘Facebook India’s Controversial Policy Chief Has Resigned’. The Verge, 27 Oct. 2020, https://www.theverge.com/2020/10/27/21536149/ankhi-das-facebook-india-resigned-quit-bjp-hindu-muslim-conflict.

[xxxvii] Facebook Executive Supported India’s Modi

[xxxviii] [19] Crouch, Erik. ‘Facebook India Executive Files Criminal Complaint against Journalist’. Committee to Protect Journalists, 19 Aug. 2020, https://cpj.org/2020/08/facebook-india-executive-files-criminal-complaint-against-journalist-for-facebook-post/.

[xxxix] Facebook India Executive Files

[xl] [23] Statt, Nick. ‘Facebook Linked to Free Speech Crackdown in India after Lobbyist Files Charges’. The Verge, 19 Aug. 2020, https://www.theverge.com/2020/8/19/21375798/facebook-india-free-speech-criminal-complaint-journalist.

[xli] Five Years in a Row