Disclaimer: This is a working paper, and hence it represents research in progress. This paper represents the opinions of the authors and is the product of professional research. It is not meant to represent the position or opinions of the Shorenstein Center, the Harvard Kennedy School, Harvard University as a whole, nor the official position of any staff members or faculty. We welcome comments on the paper and any observations on where the paper may present errors or incomplete observations, which we can address in due course.

Please also note this paper makes several references to appendices. Though these appendices are not essential to the narrative, they are helpful to refer to for additional context. Download a PDF copy of this paper, including appendices here.

Case Study Goals:

- Examine internal governance around product development and integrity processes at tech companies

- Understand the extent of internal research and evidence available at tech companies including Meta, and how this can be used to address evidence gaps around social media and mental health harms to teens

- Understand the extent internal research and evidence, and how this can be used to generate solutions to address risks / harms related to youth mental health

- Understand where they may be conflicts between commercial interests and integrity objectives, and how these play out in internal governance

- Generate discussion on models to better use internal research and evidence at tech companies, and the extent to which it should be brought into the public domain

Executive Summary

In September 2021, Frances Haugen, a former Facebook employee and whistleblower, revealed “The Facebook Papers” to Congress and global news outlets.[i] These disclosures, accompanied by eight whistleblower reports to the SEC, emphasized concerns about teenagers’ mental and physical health.[ii] While media coverage provided an overview of these findings, there remains a lack of comprehensive evidence in the public domain concerning the relationship between technology use and mental health concerns among teenagers. The US Surgeon General, in their May 2023 Advisory, identified gaps in public knowledge and urged tech companies to share their data with independent researchers.[iii]

The Public Interest Tech Lab and the Shorenstein Center at the Harvard Kennedy School have created FBarchive, a curated collection of “The Facebook Papers.”[iv] Using this archive, we provide detailed insights into all 19 studies contained within the documents accompanying the SEC whistleblower report, their methodologies, and findings (Appendix A). Additionally, we present a comprehensive list of the harms mentioned in these studies and map their findings to the Surgeon General’s evidence gaps (Appendix B). We also outline 70 distinct recommendations from these studies, outlining the key themes (Appendix C).

Among these studies is a thorough examination of the product development process for “Project Daisy,” an internal initiative at Facebook and Instagram designed to reduce the public visibility of like counts on user posts.[v] Project Daisy was introduced by Facebook and Instagram in November 2019 to alleviate the stress and anxiety that teenagers often experience due to the number of likes their posts receive. The internal Meta documents also suggested that it was intended to improve the company’s reputation and demonstrate a commitment to teen well-being.[vi] The prototype featured an option to hide like counts from viewers while still allowing post creators to see their own likes.

Extensive user testing was conducted across both Facebook and Instagram.[vii] Results indicated reductions in commercial metrics related to revenues, ads and user engagement. However, Project Daisy also reduced the importance of likes for teens and had positive effects on their well-being. Project Daisy also faced challenges in identifying popular posts, which was seen as a drawback.

The decision to launch Project Daisy on Instagram and discontinue it on Facebook was made, partly influenced by positive feedback from policy makers, press, and academics.[viii] Despite similar test results for teens across both platforms, teens were a much smaller proportion of the Facebook user base. However, the launch was delayed until May 2021, and in the final version the feature was turned off by default, allowed users to opt-in to hide like counts.

This case study underscores the flexibility tech companies have in designing interventions to mitigate potential harms without removing existing benefits. It also highlights potential issues in the product development process and the prioritization of commercial interests over product integrity, particularly the decisions to have the feature turned off by default, and the discontinuation of the Facebook project. Project Daisy is a relatively small intervention in the context of the array of product updates and content moderation decisions at Facebook’s disposal. It appears to have been strategically chosen to preserve commercial goals while being seen to tackle integrity issues. Moreover, recent coverage by CNN suggests that this prioritization of commercial interests is enforced by decision-makers at the most senior levels, including Mark Zuckerberg, despite considerable internal support for integrity initiatives.[ix] To address these concerns and potential harms to teenagers, collaboration between tech companies and external regulators is crucial. Tech giants possess the technical expertise and data needed to identify and address platform risks, while external regulators can ensure accountability and transparency. An inclusive approach, involving regulators, academics, and impacted communities, is essential to navigate and mitigate online platform risks effectively. Evidence from “The Facebook Papers” can serve as a blueprint for such collaboration and inform future efforts to protect the well-being of platform users, particularly teenagers.

Background Context – The Facebook papers

In September 2021, Frances Haugen, a former Facebook employee and whistleblower, disclosed internal Facebook documents, “The Facebook Papers” to Congress and news outlets around the world.[x] This was followed by eight whistleblower disclosures made to the SEC in 2021.[xi] Teenagers‘ mental and physical health was a key topic of these disclosures, which contained documents containing 19 internal Meta research studies on this topic.[xii]

Coverage of the disclosures by the Wall Street Journal[xiii] and other press outlets provides a broad overview of findings from these studies, but it is not comprehensive. Consequently, there is insufficient evidence in the public domain on the links between technology use and mental health concerns. Recent studies by researchers at Oxford University,[xiv] UC Irvine,[xv] the UK Medical Research Council,[xvi] and the Stanford Media Lab[xvii] find little evidence of links between social media use and mental health.

The US Surgeon General presented the evidence gaps in current public knowledge in their May 2023 Advisory and called for tech companies to share their data with independent researchers.[xviii] This was echoed by the Oxford research team, who called for more transparency from the tech sector, urging them to unlock their data for “neutral and independent investigation.”[xix]

Our team at the Public Interest Tech Lab and the Shorenstein Center at the Harvard Kennedy Schools have used “The Facebook Papers” to establish FBArchive, a curated, searchable, indexed collection of the documents.[xx] Informed by the FBarchive, we provide:

- Further details on all 19 studies, including findings and methodologies (Appendix A)

- A comprehensive list of the harms cited in these studies, and an illustrative mapping of study findings to the evidence gaps put forward by the Surgeon General (Appendix B)

- Key themes and details from 70 distinct recommendations made in these studies, including product features, content moderation policies, and education & outreach initiatives (Appendix C)

Contained within these studies is a detailed review of the product development process for “Project Daisy”, an internal project at Facebook and Instagram to reduce public visibility of like counts on user posts.[xxi] In this discussion paper, we review this process and the implications for broader governance efforts to mitigate harms to youth at tech companies.

Project Daisy Product Development and Testing

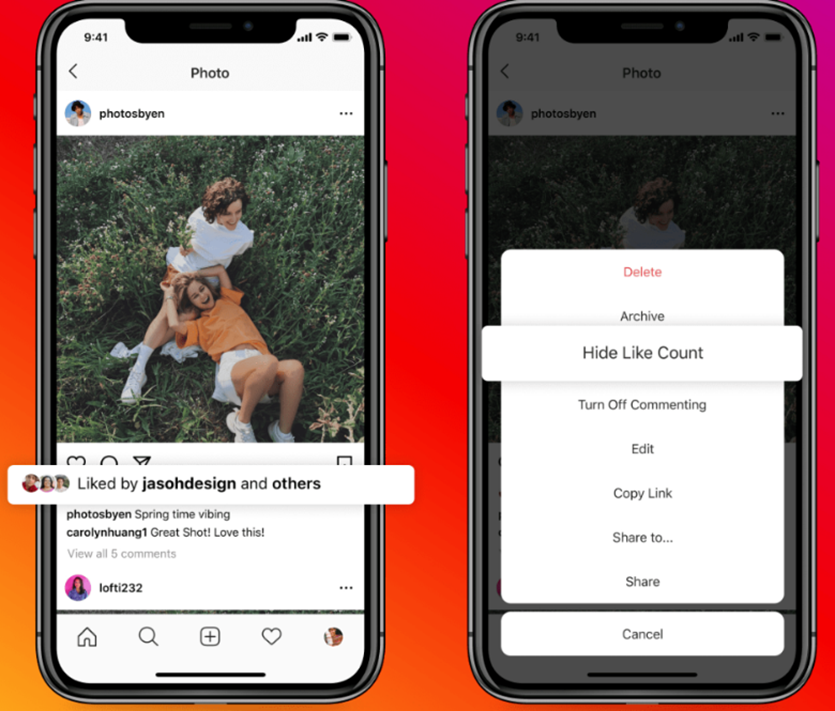

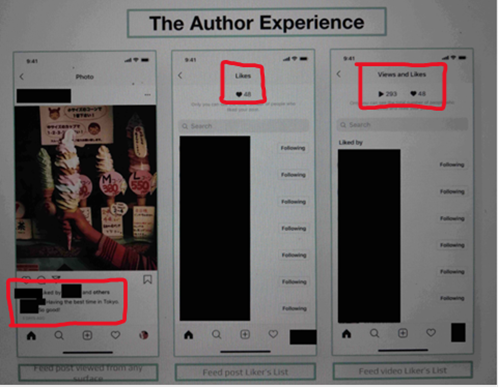

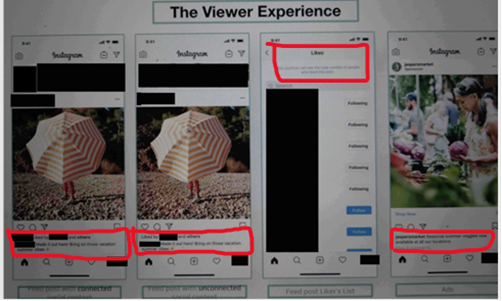

“Project Daisy” was announced by Facebook and Instagram in November 2019 as a feature to reduce public visibility of “like counts” on user posts.[xxii] It aimed to address concerns of teens feeling stressed or anxious over likes received on their posts, depressurize sharing and reduce negative social comparison.[xxiii] Internal Meta documents also reveal intent for the project to signal the company “cares about wellbeing” and improve “reputation and positioning”. The prototype features, presented in Figures 1 and 2, included hiding like counts from viewers while still allowing post creators to view their own likes.

Project Daisy underwent user testing for Instagram and Facebook, with more extensive testing on Instagram. Testing on Facebook was limited to the Australian market. Full results for these tests can be found in Appendix D.

Table 1 Impact of Project Daisy Prototype on Revenue Metrics for Instagram (key findings)1Cells highlighted red are negative and statistically significant impacts. Cells highlighted grey are not statistically significant. The size of confidence intervals is not specified but assumed to be 95% two-tailed

| Affected metric | % change | Confidence interval (CI) |

| Instagram event based revenue | -1.0% | +/- 0.9% |

| Ad impressions, feed (for 12/11 to 12/25) | -0.060% | +/- 0.189% |

| Ad clicks (for 12/11 to 12/25) | -0.923% | +/- 0.295% |

| Ads click-through-rate (CTR), feed | -0.612% | +/- 0.317% |

Figure 1 – Project Daisy Prototype for “The Author Experience”

Figure 2 – Project Daisy Prototype for “The Viewer Experience”

Results reveal that for Instagram:

- Revenues and ads-related metrics decreased significantly, with average revenue loss of 1%

- Engagement metrics decreased significantly for both teens and the overall user base

- Project Daisy reduced the importance of likes for users

- Users who tested the product observed “positive impacts on themselves, and on others” from reducing visibility of likes

| Feedback from Instagram user survey:

“It’s pretty simple, it’s not a big change. I don’t think a lot of people will notice it. Besides those big celebrities with those big numbers” Survey participant #1 (redacted) “It basically means that my post flop thing won’t happen anymore because l’ll be the only one that will notice if it flopped. Everybody else will just see that I posted, which is pretty lit.” Survey participant #2 (redacted) |

However, Project Daisy also made it more challenging for users to identify popular posts on Instagram, which was viewed as a negative, as likes served as a signal of post quality.

| Feedback from Instagram user survey:

“Right so for like the meme’s up here… I would just scroll through it. I wouldn’t read it but let’s say it had a lot of likes, like a hundred thousand, then maybe I might actually read through it. So it will affect how long I would stay on a certain post.” Survey participant #3 (redacted)

“See for something like this, I don’t really care about the like count because it’s just a friend of mine. A skateboarding video. This is a brand new video that came out and see this is where / would like to see the like count because I’d want to see how big this would get just to see how interesting it really is.” Survey participant #4 (redacted) |

Table 2 Impact of Project Daisy Prototype on Revenue Metrics for Facebook (key findings)2Cells highlighted red are negative and statistically significant impacts. Cells highlighted grey are not statistically significant. The size of confidence intervals is not specified but assumed to be 95% two-tailed

| Affected metric | % change | Confidence interval (CI) |

| Instagram event-based revenue | -0.23% | +/- 2.29% |

| Ad impressions, feed | -0.29% | +/- 0.27% |

| Ad clicks | -0.43% | +/- 0.31% |

| Ads click-through-rate (CTR), feed | -0.20% | +/- 0.27% |

Results for Facebook were comparable to Instagram for teens, but differed for older users:

- Revenues were not significantly affected, but other commercial metrics were, including ad impressions, clicks, user sessions, newsfeed views, and posts

- Internal notes at Meta assess the impact on revenue and engagement metrics to be “directionally aligned between Facebook and Instagram”

- Teens observed “positive impacts on themselves, and on others” from reducing visibility of likes, and reduced concerns about being judged

- For older users, Project Daisy “did not reduce concern about receiving likes and reactions”, and “slightly reduced concerns about others seeing how many likes / reactions [the user] received”, with overall concern about this was very low

- As with Instagram Project Daisy also made it more challenging for Facebook users to identify popular posts on the platform.

Project Daisy – Internal Recommendations and Outcomes

The internal research team at Meta tentatively recommended launching Project Daisy on Instagram, with the caveat that the research findings didn’t strongly support this decision, leaving “the case for shipping” as “a judgment call”.[xxiv] In favor of shipping, the cited “overwhelmingly positive responses from policy makers, press, and academics”, and believed it could help define Instagram’s values moving forward.

However, they recommended not launching Project Daisy on Facebook, citing that it was “not a top concern for people” and that Daisy did not “meaningfully move the concern”.

The next steps were to ship Daisy on Instagram from March 3rd, 2020, and to discontinue the Facebook project. There was a go-to-market strategy to “ensure credit ladders up to the Facebook company”, minimize negative reactions, and provide evidence-backed explanations for the decision.

However, the Instagram product launch did not go ahead in March 2020 as recommended. Another follow-up internal Meta document shows that two options were considered: a tentative recommendation to launch Daisy with further research and feature adjustments, or to block the launch based on failure to meet commercial metrics including revenue targets. The team also considered product mitigations for issues related to ad performance, content evaluation, and concerns from creators.

Project Daisy was eventually launched on Instagram in May 2021, 14 months after the initially proposed launch date.[xxv] In the launch version, presented in Figure 3, the feature to hide like counts on others’ posts was opt-in citing mixed user feedback.

| “We heard from people and experts that not seeing like counts was beneficial for some, and annoying to others, particularly because people use like counts to get a sense for what’s people, so we’re giving you a choice.” Instagram Blog, May 2021 |

Figure 3 User interface for Project Daisy on Instagram at launch

Project Daisy – Commentary

The opt-in nature of the launched version of Project Daisy reflects an important point – given that social media ostensibly brings with it benefits as well as harms, interventions that reduce access or product efficacy correspondingly also the potential to do harm as well as good. Tech companies have flexibility in designing interventions, particularly those related to product features, to mitigate potential harms without removing existing benefits. As Verge noted, Project Daisy highlighted “a lesson that social networks are often too reluctant to learn: rigid, one-size-fits-all platform policies are making people miserable.”.[xxvi]

However, the case also highlighted potential issues in the product development process, particularly in how evidence was treated. The opt-in nature of the launched product means it is switched off by default, reducing its impact. This decision discounts evidence of the positive impacts of Project Daisy observed by teens on themselves and those around them, some of whom would not have opted into it.[xxvii] Furthermore, the decision to discontinue the Facebook project should be questioned, given evidence from user testing was comparable across both platforms for teens, if not for older users. As teens only constitute c.5% of Facebook, a worrying implication is that concerns for a vulnerable minority group, teens, were not considered as seriously as concerns for the majority.

Moreover, while the research behind this initiative is extensive, the Project Daisy proposal in all its forms is a relatively small intervention in the context of the array of product updates and content moderation decisions at Facebook’s disposal, highlighted by the 70 distinct recommendations we identify in the SEC disclosure documents (presented in Appendix C). Project Daisy appears to be strategically chosen to preserve commercial goals while being seen to tackle integrity issues. Public announcements and strategic commentary indicate that it was meant to signal a directional change for Facebook, showing their commitment to addressing platform harms. However, as Verge noted, it seemed “like a remarkable anticlimax” and missed potential for a more “fundamental transformation.”[xxviii]

Recent coverage by CNN suggests the decisions made during Project Daisy are part of a broader pattern of insufficient investment in integrity initiatives, and the blocking of those initiatives where they impeded commercial objectives from the most senior internal stakeholders.[xxix] For example, another 2019 product proposal to disable beauty filters, was by senior executives including Instagram’s CEO Adam Mosseri, Instagram’s Policy Chief, Karina Newton, the Head of Facebook, Fidji Simo, and Meta’s Vice President of Product Design, Margaret Gould Stewart. Mark Zuckerberg rejected the plan despite this internal support and recommendations from academics and outside advisors.

Furthermore, requests for “additional investment” to address addiction, self-harm, and bullying on the platform in August 2021 were rebuffed, again by Zuckerberg, on the grounds that staffing was “too constrained” to meet the request.[xxx]A former Facebook employee notes that “Nothing like Project Daisy with its implications for ad revenue would pass without approval from the [senior team], namely Mark.”[xxxi]

The breadth of recommendations we find in the disclosure documents demonstrate an appetite to mitigate product harms amongst internal stakeholders, and the recent coverage also suggests support from certain senior stakeholders. Nevertheless, decisions appeared to be finalized at the very top, and those decisions protected commercial interests.

The Path Forward

“The Facebook Papers” and the 2021 SEC disclosures shed light on the concerns surrounding online safety, especially for children and teens. The potential harms cited in these documents became a focal point of coverage, even though, as stated by the Surgeon General and several academics, the true impact of online platforms on youth mental health remained under-researched. Furthermore, a clarity in solutions to these potential harms has yet to emerge in ongoing discussions.

A closer inspection of these studies can fill in some of the evidence gaps highlighted by the Surgeon General’s Advisory. While media outlets have covered headline statistics, an in-depth look is crucial, particularly to understand the mechanisms through which social media can cause harm to teens. One ongoing piece of research in this area is from a team at Oxford University that is reviewing the papers to rigorously assess these studies for associations between Meta’s platforms and impacts on teen well-being.

Furthermore, the studies can shed light on how tech companies can deliver the solutions to address these harms, as evidenced by the 70 distinct recommendations identified. However, product development and integrity initiatives may be hindered by internal governance. Commercial motivations, like those seen in Project Daisy, may obstruct substantive changes, especially if such changes threaten revenue or demand high costs without clear returns. The SEC’s inaction post-disclosure underlines this, as tech companies prioritize advertiser interests over user safety.[xxxii]

Recent coverage by the Wall Street Journal suggests that failure to handle integrity issues is shortsighted even on a commercial basis.[xxxiii] Their investigation into Instagram Reels demonstrated that its algorithm not only recommended inappropriate sexual content to teens, but it placed ads from major brands adjacent to these videos. By not addressing this integrity issue, Instagram not only failed to address serious ethical and safety concerns but also jeopardized future ad revenue streams.[xxxiv]

Effective resolutions will likely require collaboration between tech giants and external regulators. Without the tech companies, external regulators will not have the requisite technical expertise or access to internal data and testing to identify harms and mandate appropriate solutions without the risk of unintended consequences. It is therefore essential for tech companies to provide access to internal data to facilitate informed oversight. For instance, the Twitter Developer Platform, which provided API access to internal Twitter data for academics, was a ‘best-in-class’ example of data sharing before access was paywalled.[xxxv]

Furthermore, data made public through the Facebook Papers, including the recommendations we have highlighted, could provide the impetus for renewed internal integrity initiatives, similar action taken by competitors, or informed regulations. Moreover, though there is potential for them to develop these practices as a voluntary industry standard, given increased public pressure to act on harms, alongside lawsuits,[xxxvi] short-term commercial incentives are powerful.

External regulation may therefore be required for meaningful action. This regulation could involve creating an external ‘regulatory watchdog’ with whom tech companies share their data. Representatives in this watchdog should encompass a diverse range of professionals, from regulators to academics and impacted communities, ensuring a comprehensive review and representation. Effective use of evidence from the Facebook Papers could provide a blueprint for a joint, inclusive approach between the tech industry and regulatory agencies in navigating and mitigating online platform risks.

Discussion questions

- To what extent are harms / risks related to social media and youth mental health a priority issue?

- To what extent is the evidence presented in the SEC disclosure documents in the public domain?

- To what extent does the evidence presented in the SEC disclosure documents strengthen stated evidence gaps?

- In the case of Project Daisy, where were internal governance and product development processes effective / ineffective?

- How can the evidence contained in the SEC disclosure documents on risks and harms arising from social media be best used to empower stakeholders? What are the benefits, barriers and risks to suggested approaches?

- Increased public awareness of harms results in social media users advocating for change, and/or reducing use of the platforms

- Increased public awareness of harms results in social media users changing their behavior when using the platforms

- Justification for regulatory changes / enforcement

- Impetus for legislative changes

- How can the information contained in the SEC disclosure documents on recommendations (product features, moderation and/or outreach) be best used to empower stakeholders? What are the benefits, barriers and risks to suggested approaches?

- Allows regulators to make more precise recommendations and/or regulations

- Internal impetus within tech companies for increased focus and activity related to governance and integrity

- What are potential unintended consequences of legislation, regulations, company policy changes and/or product changes?

- Reducing access to services which deliver user and/or community benefits

- Non-consideration or marginalization of vulnerable groups

- What are the full set of levers available to the following groups to address harms / risks related to social media and youth mental health?

- Social media technology companies

- Regulatory agencies (FCC, FTC, SEC, HHS)

- Legislative bodies (Congress and state)

- NGOs and advocacy groups

Endnotes

[i] ‘The Facebook Papers’, The Facebook Papers, accessed 11 October 2023, https://facebookpapers.com/.

[ii] ‘Whistleblower’s SEC Complaint: Facebook Knew Platform Was Used to “Promote Human Trafficking and Domestic Servitude” – CBS News’, accessed 11 October 2023, https://www.cbsnews.com/news/facebook-whistleblower-sec-complaint-60-minutes-2021-10-04/.

[iii] Office of the Assistant Secretary for Health (OASH), ‘Surgeon General Issues New Advisory About Effects Social Media Use Has on Youth Mental Health’, Text, HHS.gov, 23 May 2023, https://www.hhs.gov/about/news/2023/05/23/surgeon-general-issues-new-advisory-about-effects-social-media-use-has-youth-mental-health.html.

[iv] ‘fbarchive.Org’, accessed 11 October 2023, https://fbarchive.org/.

[v] “Teen Mental Health SEC Disclosure Set 2, Part 1”, FBarchive Document odoc9178301w32 (Green Edition). Frances Haugen [producer]. Cambridge, MA: FBarchive [distributor], 2022. Web. 15 May 2023. URL: fbarchive.org/user/doc/odoc9178301w32

[vi] Ibid

[vii] Ibid

[viii] Ibid

[ix] Fung, Brian. ‘Mark Zuckerberg Personally Rejected Meta’s Proposals to Improve Teen Mental Health, Court Documents Allege | CNN Business’. CNN, 8 Nov. 2023, https://www.cnn.com/2023/11/08/tech/meta-facebook-instagram-teen-safety/index.html.

[x] ‘The Facebook Papers’

[xi] Whistleblower’s SEC Complaint

[xii] Facebook Misled Investors and the Public about the Negative Impact of Instagram and Facebook on Teenagers’ Mental and Physical Health“ (SEC Office of the Whistleblower), accessed 11 October 2023, https://facebookpapers.com/wp-content/uploads/2021/11/Teen-mental-health_Redacted.pdf.

[xiii] Georgia Wells, Jeff Horwitz, and Deepa Seetharaman, ‘Facebook Knows Instagram Is Toxic for Teen Girls, Company Documents Show’, Wall Street Journal, 14 September 2021, https://www.wsj.com/articles/facebook-knows-instagram-is-toxic-for-teen-girls-company-documents-show-11631620739.

[xiv] ‘Study Finds No Link Between Time Teens’ Spend On Tech Devices And Mental Health Problems’, accessed 11 October 2023, https://www.forbes.com/sites/roberthart/2021/05/04/study-finds-no-link-between-time-teens-spend-on-tech-devices-and-mental-health-problems/?sh=1f3b1d6a2cc9.

[xv] ‘Panicking About Your Kids’ Phones? New Research Says Don’t – The New York Times’, accessed 11 October 2023, https://www.nytimes.com/2020/01/17/technology/kids-smartphones-depression.html.

[xvi] Ibid

[xvii] Ibid

[xviii] ”Surgeon General Issues New Advisory”

[xix] “Study Finds No Link”

[xx] ‘fbarchive.Org’, accessed 11 October 2023, https://fbarchive.org/.

[xxi] Teen Mental Health SEC Disclosure Set 2, Part 1

[xxii] ‘Instagram Will Test Hiding “Likes” in the US Starting Next Week | WIRED’, accessed 11 October 2023, https://www.wired.com/story/instagram-hiding-likes-adam-mosseri-tracee-ellis-ross-wired25/.

[xxiii] ”Teen Mental Health SEC Disclosure Set 2, Part 1”

[xxiv] Ibid

[xxv] ‘Giving People More Control on Instagram and Facebook’, accessed 11 October 2023, https://about.instagram.com/blog/announcements/giving-people-more-control.

[xxvi] ‘What Instagram Really Learned from Hiding like Counts – The Verge’, accessed 11 October 2023, https://www.theverge.com/2021/5/27/22456206/instagram-hiding-likes-experiment-results-platformer.

[xxvii] ”Teen Mental Health SEC Disclosure Set 2, Part 1”

[xxviii] What Instagram Really Learned

[xxix] Mark Zuckerberg Personally Rejected

[xxxi] Based on feedback from former Trust & Safety Analyst at Facebook, 2018-19

[xxxii] ‘When Even the SEC Can’t Police Bad Behavior: The Facebook Whistleblower’, Chicago Policy Review (blog), 16 November 2021, https://chicagopolicyreview.org/2021/11/16/when-even-the-sec-cant-police-bad-behavior-the-facebook-whistleblower/.

[xxxiii] Blunt, Jeff Horwitz and Katherine. ‘WSJ News Exclusive | Instagram’s Algorithm Delivers Toxic Video Mix to Adults Who Follow Children’. WSJ, 27 Nov. 2023, https://www.wsj.com/tech/meta-instagram-video-algorithm-children-adult-sexual-content-72874155.

[xxxiv] Instagram’s Algorithm Delivers Toxic

[xxxv] Calma, Justine. ‘Scientists Say They Can’t Rely on Twitter Anymore’. The Verge, 31 May 2023, https://www.theverge.com/2023/5/31/23739084/twitter-elon-musk-api-policy-chilling-academic-research.

[xxxvi] ‘States Sue Meta Claiming Its Social Platforms Are Addictive and Harm Children’s Mental Health’. AP News, 24 Oct. 2023, https://apnews.com/article/instagram-facebook-children-teens-harms-lawsuit-attorney-general-1805492a38f7cee111cbb865cc786c28.