Conference held February 17–18, 2017

Organized by Matthew Baum, David Lazer, and Nicco Mele

Sponsored by

Final report written by

David Lazer †‡, Matthew Baum ‡, Nir Grinberg †‡, Lisa Friedland †‡, Kenneth Joseph†‡, Will Hobbs †‡, and Carolina Mattsson †

Drawn from presentations by

Yochai Benkler (Harvard), Adam Berinsky (MIT), Helen Boaden (BBC), Katherine Brown (Council on Foreign Relations), Kelly Greenhill (Tufts and Harvard), David Lazer (Northeastern), Filippo Menczer (Indiana), Miriam Metzger (UC Santa Barbara), Brendan Nyhan (Dartmouth), Eli Pariser (UpWorthy), Gordon Pennycook (Yale), Lori Robertson (FactCheck.org), David Rothschild (Microsoft Research), Michael Schudson (Columbia), Adam Sharp (formerly Twitter), Steven Sloman (Brown), Cass Sunstein (Harvard), Emily Thorson (Boston College), and Duncan Watts (Microsoft Research).

† Northeastern University ‡ Harvard University

Executive Summary

Recent shifts in the media ecosystem raise new concerns about the vulnerability of democratic societies to fake news and the public’s limited ability to contain it. Fake news as a form of misinformation benefits from the fast pace that information travels in today’s media ecosystem, in particular across social media platforms. An abundance of information sources online leads individuals to rely heavily on heuristics and social cues in order to determine the credibility of information and to shape their beliefs, which are in turn extremely difficult to correct or change. The relatively small, but constantly changing, number of sources that produce misinformation on social media offers both a challenge for real-time detection algorithms and a promise for more targeted socio-technical interventions.

There are some possible pathways for reducing fake news, including:

(1) offering feedback to users that particular news may be fake (which seems to depress overall sharing from those individuals); (2) providing ideologically compatible sources that confirm that particular news is fake; (3) detecting information that is being promoted by bots and “cyborg” accounts and tuning algorithms to not respond to those manipulations; and (4) because a few sources may be the origin of most fake news, identifying those sources and reducing promotion (by the platforms) of information from those sources.

As a research community, we identified three courses of action that can be taken in the immediate future: involving more conservatives in the discussion of misinformation in politics, collaborating more closely with journalists in order to make the truth “louder,” and developing multidisciplinary community-wide shared resources for conducting academic research on the presence and dissemination of misinformation on social media platforms.

Moving forward, we must expand the study of social and cognitive interventions that minimize the effects of misinformation on individuals and communities, as well as of how socio-technical systems such as Google, YouTube, Facebook, and Twitter currently facilitate the spread of misinformation and what internal policies might reduce those effects. More broadly, we must investigate what the necessary ingredients are for information systems that encourage a culture of truth.

This report is organized as follows. Section 1 describes the state of misinformation in the current media ecosystem. Section 2 reviews research about the psychology of fake news and its spread in social systems as covered during the conference. Section 3 synthesizes the responses and discussions held during the conference into three courses of action that the academic community could take in the immediate future. Last, Section 4 describes areas of research that will improve our ability to tackle misinformation in the future. The conference schedule appears in an appendix.

Section 1 | The State of Misinformation

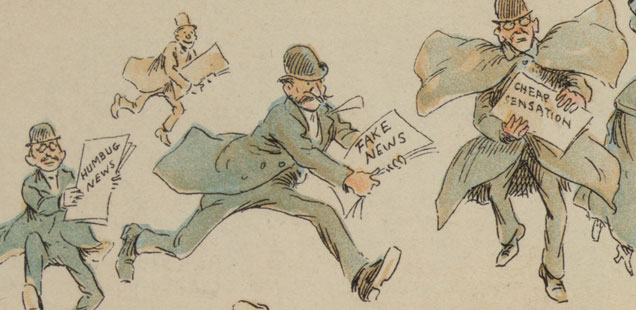

The spread of false information became a topic of wide public concern during the 2016 U.S. election season. Propaganda, misinformation, and disinformation have been used throughout history to influence public opinion.[1] Consider a Harper’s magazine piece in 1925 (titled “Fake news and the public”) decrying the rise of fake news:

Once the news faker obtains access to the press wires all the honest editors alive will not be able to repair the mischief he can do. An editor receiving a news item over the wire has no opportunity to test its authenticity as he would in the case of a local report. The offices of the members of The Associated Press in this country are connected with one another, and its centers of news gathering and distribution by a system of telegraph wires that in a single circuit would extend five times around the globe. This constitutes a very sensitive organism. Put your finger on it in New York, and it vibrates in San Francisco.

Substitute in Facebook and Google for The Associated Press, and these sentences could have been written today. The tectonic shifts of recent decades in the media ecosystem—most notably the rapid proliferation of online news and political opinion outlets, and especially social media—raise concerns anew about the vulnerability of democratic societies to fake news and other forms of misinformation. The shift of news consumption to online and social media platforms[2] has disrupted traditional business models of journalism, causing many news outlets to shrink or close, while others struggle to adapt to new market realities. Longstanding media institutions have been weakened. Meanwhile, new channels of distribution have been developing faster than our abilities to understand or stabilize them.

A growing body of research provides evidence that fake news was prevalent in the political discourse leading up to the 2016 U.S. election. Initial reports suggest that some of the most widely shared stories on social media were fake (Silverman, 2016), and other findings show that the total volume of news shared by Americans from incredible and dubious sources is comparable in volume to news coming from individual mainstream sources such as The New York Times (Lazer, n.d.; although a limitation is that this research focused on dissemination and not consumption of information).

Current social media systems provide a fertile ground for the spread of misinformation that is particularly dangerous for political debate in a democratic society. Social media platforms provide a megaphone to anyone who can attract followers. This new power structure enables small numbers of individuals, armed with technical, social or political know-how, to distribute large volumes of disinformation, or “fake news.” Misinformation on social media is particularly potent and dangerous for two reasons: an abundance of sources and the creation of echo chambers. Assessing the credibility of information on social media is increasingly challenging due to the proliferation of information sources, aggravated by the unreliable social cues that accompany this information. The tendency of people to follow like-minded people leads to the creation of echo chambers and filter bubbles, which exacerbate polarization. With no conflicting information to counter the falsehoods or the general consensus within isolated social groups, the end result is a lack of shared reality, which may be divisive and dangerous to society (Benkler et al., 2017). Among other perils, such situations can enable discriminatory and inflammatory ideas to enter public discourse and be treated as fact. Once embedded, such ideas can in turn be used to create scapegoats, to normalize prejudices, to harden us-versus-them mentalities and even, in extreme cases, to catalyze and justify violence (Greenhill, forthcoming; Greenhill and Oppenheim, forthcoming).

A parallel, perhaps even larger, concern regarding the role of social media, particularly Facebook, is their broad reach beyond partisan ideologues to the far larger segment of the public that is less politically attentive and engaged, and hence less well-equipped to resist messages that conflict with their partisan predispositions (Zaller 1992), and more susceptible to persuasion from ideologically slanted news (Benedictis-Kessner, Baum, Berinsky, and Yamamoto 2017). This raises the possibility that the largest effects may emerge not among strong partisans, but among Independents and less-politically-motivated Americans.

Misinformation amplified by new technological means poses a threat to open societies worldwide. Information campaigns from Russia are overtly aiming to influence elections and destabilize liberal democracies, while those from the far right of the political spectrum are seeking greater control of ours. Yet if today’s technologies present new challenges, the general phenomenon of fake news is not new at all, nor are naked appeals to public fears and attempts to use information operations to influence political outcomes (Greenhill, forthcoming). Scholars have long studied the spread of misinformation and strategies for combating it, as we describe next

Section 2 | Foundations of Fake News: What We Know

The Psychology of Fake News

Most of us do not witness news events first hand, nor do we have direct exposure to the workings of politics. Instead, we rely on accounts of others; much of what we claim to know is actually distributed knowledge that has been acquired, stored, and transmitted by others. Likewise, much of our decision-making stems not from individual rationality but from shared group-level narratives (Sloman & Fernbach, 2017). As a result, our receptivity to information and misinformation depends less than we might expect on rational evaluation and more on the heuristics and social processes we describe below.

First, source credibility profoundly affects the social interpretation of information (Swire et al., 2017; Metzger et al., 2010; Berinsky, 2017, Baum and Groeling 2009; Greenhill and Oppenheim, n.d.). Individuals trust information coming from well-known or familiar sources and from sources that align with their worldview. Second, humans are biased information-seekers: we prefer to receive information that confirms our existing views. These properties combine to make people asymmetric updaters about political issues (Sunstein et al., 2016). Individuals tend to accept new information uncritically when a source is perceived as credible or the information confirms prior views. And when the information is unfamiliar or comes from an opposition source, it may be ignored.

As a result, correcting misinformation does not necessarily change people’s beliefs (Nyhan and Reifler, 2010; Flynn et al., 2016). In fact, presenting people with challenging information can even backfire, further entrenching people in their initial beliefs. However, even when an individual believes the correction, the misinformation may persist. An important implication of this point is that any repetition of misinformation, even in the context of refuting it, can be harmful (Thorson, 2015, Greenhill and Oppenheim, forthcoming). This persistence is due to familiarity and fluency biases in our cognitive processing: the more an individual hears a story, the more familiar it becomes, and the more likely the individual is to believe it as true (Hasher et al 1977; Schwartz et al, 2007; Pennycook et al., n.d.). As a result, exposure to misinformation can have long-term effects, while corrections may be short-lived.

One factor that does affect the acceptance of information is social pressure. Much of people’s behavior stems from social signaling and reputation preservation. Therefore, there is a real threat of embarrassment for sharing news that one’s peers perceive as fake. This threat provides an opening for fact-checking tools on social media, such as a pop-up warning under development by Facebook. This tool does seem to decrease sharing of disputed articles, but it is unlikely to have a lasting effect on beliefs (Schwartz et al, 2007; Pennycook et al., n.d.). While such tools provide a mechanism to signal that an individual is sharing fake news to their existing peers, another opportunity for intervention is to shift peer consumption online. Encouraging communication with people who are dissimilar might be an effective way to reduce polarization and fact distortion around political issues.

How Fake News Spreads

Fake news spreads from sources to consumers through a complex ecosystem of websites, social media, and bots. Features that make social media engaging, including the ease of sharing and rewiring social connections, facilitate their manipulation by highly active and partisan individuals (and bots) that become powerful sources of misinformation (Menczer, 2016).

The polarized and segregated structure observed in social media (Conover et al, 2011) is inevitable given two basic mechanisms of online sharing: social influence and unfriending (Sasahara et al., in preparation). The resulting echo chambers are highly homogeneous (Conover et al, 2011b), creating ideal conditions for selective exposure and confirmation bias. They are also extremely dense and clustered (Conover et al., 2012), so that messages can spread very efficiently and each user is exposed to the same message from many sources. Hoaxes have higher chances to go viral in these segregated communities (Tambuscio et al., in preparation).

Even if individuals prefer to share high-quality information, limited individual attention and information overload prevent social networks from discriminating between messages on the basis of quality at the system level, allowing low-quality information to spread as virally as high-quality information (Qiu et al., 2017). This helps explain higher exposure to fake news online.

It is possible to leverage structural, temporal, content, and user features to detect social bots (Varol et al., 2017). This reveals that social bots can become quite influential (Ferrara et al., 2016). Bots are designed to amplify the reach of fake news (Shao et al., 2016) and exploit the vulnerabilities that stem from our cognitive and social biases. For example, they create the appearance of popular grassroots campaigns to manipulate attention, and target influential users to induce them to reshare misinformation (Ratkiewicz et al., 2011).

On Twitter, fake news shared by real people is concentrated in a small set of websites and highly active “cyborg” users (Lazer, n.d.). These users automatically share news from a set of sources (with or without reading them). Unlike traditional elites, these individuals sometimes wield limited socio-political capital but rather leverage their knowledge of platform affordances to grow a following around polarized and misinformative content. These individuals can, however, attempt to get the attention of political elites with the aid of social bots. For example, Donald Trump received hundreds of tweets, mostly from bots, with links to the fake news story that three million illegal immigrants voted in the election. This demonstrates how the power dynamics on social media can, in some cases, be reversed, leading misinformation to flow from lower status individuals to elites.

Contrary to popular intuition, both fake and real information, including news, is not often “viral” in the implied sense of spreading through long information cascades (Goel, Sharad, et al., 2015). That is, the vast majority of shared content does not spread in long cascades among average people. It’s often messages from celebrities and media sources—accounts with high numbers of followers—that increase reach the most, and do so via very shallow diffusion chains. Thus, traditional elites may not be the largest sharers of fake news content but may be the most important node capable of stemming its spread (Greenhill and Oppenheim, n.d.).

Most people who share fake news, whether it gains popularity or not, share lots of news in general. Volume of political activity is by far the strongest predictor of whether an individual will share a fake news story. The fact that misinformation is mixed with other content and that many stories get little attention from people means that traditional measures of quality cannot distinguish misinformation from truth (Metzger et al., 2010). Beyond this, certain characteristics of people are associated with greater likelihood of sharing fake news: older and more extreme individuals on the political spectrum appear to share fake news more than others (Lazer et al., n.d.).

Nation-states and politically-motivated organizations have long been the initial brokers of misinformation. Both contemporary and historical evidence suggests that the spread of impactful misinformation is rarely due to simple misunderstandings. Rather, misinformation is often the result of orchestrated and strategic campaigns that serve a particular political or military goal (Greenhill, forthcoming). For instance, the British waged an effective campaign of fake news around alleged German atrocities during WWI in order to mobilize domestic and global public opinion against Germany. These efforts, however, boomeranged during WWII, because memories of that fake news led to public skepticism, during WWII, of reports of mass murder (Schudson, 1997).

We must also acknowledge that a focus on impartiality is relatively new to how news is reported. Historically, it was not until the early 20th century that modern journalistic norms of fact-checking and impartiality began to take shape in the United States. It was a wide backlash against “yellow journalism”—sensationalist reporting styles that were spread by the 1890s newspaper empires of Hearst and Pulitzer—that pushed journalism to begin to professionalize and institute codes of ethics (Schudson, 2001).

Finally, while any group can come to believe false information, misinformation is currently predominantly a pathology of the right, and extreme voices from the right have been continuously attacking the mainstream media (Benkler et al., 2017). As a result, some conservative voters are even suspicious of fact-checking sites (Allcott and Gentzkow, 2017). This leaves them particularly susceptible to misinformation, which is being produced and repeated, in fact, by those same extreme voices. That said, there is at least anecdotal evidence that when Republicans are in power, the left becomes increasingly susceptible to promoting and accepting fake news. A case in point is a conspiracy theory, spread principally by the left during the Bush Administration, that the government was responsible for 9/11. This suggests that we may expect to witness a rise in left-wing-promulgated fake news over the next several years. Regardless, any solutions for combating fake news must take such asymmetry into account; different parts of the political spectrum are affected in different ways and will need to assume different roles to counter it.

Section 3 | Action: Stemming Fake News

Discussions of the material above yielded several immediate paths for addressing misinformation.

Making the Discussion Bipartisan

Bringing more conservatives into the deliberation process about misinformation is an essential step in combating fake news and providing an unbiased scientific treatment to the research topic. Significant evidence suggests that fake news and misinformation impact, for the moment at least, predominantly the right side of the political spectrum (e.g., Lazer n.d., Benkler, 2017). Research suggests that error correction of fake news is most likely to be effective when coming from a co-partisan with whom one might expect to agree (Berinsky, 2017). Collaboration between conservatives and liberals to identify bases for factual agreement will therefore heighten the credibility of the endeavors, even where interpretations of facts differ. Some of the immediate steps suggested during the conference were to reach out to academics in law schools, economists who could speak to the business models of fake news, individuals who expressed opposition to the rise in distrust of the press, more center-right private institutions (e.g. Cato Institute, Koch Institute), and news outlets (e.g. Washington Times, Weekly Standard, National Review).

Making the Truth “Louder”

We need to strengthen trustworthy sources of information and find ways to support and partner with the media to increase the reach of high-quality, factual information. We propose several concrete ways to begin this process.

First, we need to translate existing research into a form that is digestible by journalists and public-facing organizations. Even findings that are well-established by social scientists, including those given above, are not yet widely known to this community. One immediate project would be to produce a short white paper summarizing our current understanding of misinformation—namely, factors by which it takes hold and best practices for preventing its spread. Research can provide guidelines for journalists on how to avoid common pitfalls in constructing stories and headlines—for instance, by leading with the facts, avoiding repeating falsehoods, seeking out audience-credible interviewees, and using visualizations to correct facts (Berinsky et al., 2017). Similarly, we know that trust in both institutions and facts is built through emotional connections and repetition. News organizations may find ways to strengthen relationships with their audiences and keep them better informed by emphasizing storytelling, repetition of facts (e.g., through follow-ups to previous stories), and impartial coverage (Boaden, 2010).

Second, we should seek stronger future collaborations between researchers and the media. One option is to support their working together in newsrooms, where researchers could both serve as in-house experts and gather data for applied research. Another route is to provide journalists with tools they need to lower the cost of data-based journalism. For example, proposals from the conference included a platform to provide journalists with crowd-sourced and curated data on emerging news stories, similar to Wikipedia. The resource would provide journalists with cheap and reliable sources of information so that well-sourced reporting can outpace the spread of misinformation on social media. Such tools could also provide pointers to data sources, background context for understanding meaningful statistics, civics information, or lists of experts to consult on a given topic.[3]

Third, the apparent concentration of circulated fake news (Lazer et al., n.d.) makes the identification of fake news and interventions by platforms pretty straightforward. While there are examples of fake news websites emerging from nowhere, in fact it may be that most fake news comes from a handful of websites. Identifying the responsibilities of the platforms and getting their proactive involvement will be essential in any major strategy to fight fake news. If platforms dampened the spread of information from just a few web sites, the fake news problem might drop precipitously overnight. Further, it appears that the spread of fake news is driven substantially by external manipulation, such as bots and “cyborgs” (individuals who have given control of their accounts to apps). Steps by the platforms to detect and respond to manipulation will also naturally dampen the spread of fake news.

Finally, since local journalism is both valuable and widely trusted, we should support efforts to strengthen local reporting in the face of tightening budgets. At the government level, such support could take the form of subsidies for local news outlets and help obtaining non-profit status. Universities can help by expanding their newspapers’ reporting on local communities.

As academics, we need better insight into the presence and spread of misinformation on social media platforms. In order to understand today’s technologies and prioritize the public interest over corporate and other interests, the academic community as a whole needs to be able to conduct research on these systems. Typically, however, accessing data for research is either impossible or difficult, whether due to platform constraints, constraints on sharing, or the size of the data. Consequently, it is difficult to conduct new research or replicate prior studies. At the same time, there is increasing concern that the algorithms on these platforms promoted misinformation during the election and may continue to do so in the future.

In order to investigate a range of possible solutions to the issue of misinformation on social media, academics must have better and more standardized access to data for research purposes. This pursuit must, of course, accept that there will be varying basis of cooperation with platform providers. With very little collaboration academics can still join forces to create a panel of people’s actions over time, ideally from multiple sources of online activity both mobile and non-mobile (e.g. MediaCloud, Volunteer Science, IBSEN, TurkServer). The cost for creating and maintaining such a panel can potentially be mitigated by partnering with companies that collect similar data. For example, we could seek out partnerships with companies that hold web panels (e.g. Nielsen, Microsoft, Google, ComScore), TV consumption (e.g. Nielsen), news consumption (e.g. Parsely, Chartbeat, The New York Times, The Wall Street Journal, The Guardian), polling (e.g. Pollfish, YouGov, Pew), voter registration records (e.g. L2, Catalist, TargetSmart), and financial consumer records (e.g. Experian, Axciom, InfoUSA). Of course, partnerships with leading social media platforms such as Facebook and Twitter are possible. Twitter provides APIs that make public data available, but sharing agreements are needed to collect high-volume data samples. Additionally, Facebook would require custom APIs. With more accessible data for research purposes, academics can help platforms design more useful and informative tools for social news consumption.

Concretely, academics should focus on the social, institutional and technological infrastructures necessary to develop datasets that are useful for studying the spread of misinformation online and that can be shared for research purposes and replicability. Doing so will require new ways of pressuring social media companies to share important data.

Section 4 | Research Directions: What We Need to Find Out

The science of misinformation in applied form is still at its genesis. The conference discussions produced several directions for future work.

First, we must expand the study of social and cognitive interventions that minimize the effects of misinformation. Research raises doubts about the role of fact-checking and the effectiveness of corrections, yet there are currently few alternatives to that approach. Social interventions that do reduce the spread of misinformation have limited effects. Going forward, the field aims to identify social factors that sustain a culture of truth and to design interventions that help reward well-sourced news.

Technology in general and social media in particular does not only introduce new challenges for dealing with misinformation, but also offers a potential for mitigating it more effectively. Facebook’s recent initiative[4] of partnering with fact-checking organizations to deliver warnings before people share disputed articles is one example of a technological intervention that can limit the spread of false information. However, questions remain regarding the scalability of the fact-checking approach and how to minimize the impact of fake news before it has been disputed. Furthermore, more research is needed in order to explore socio-technical interventions that not only stem the flow of misinformation, but also impact people’s beliefs that are a result of misinformation. More broadly, the question is: what are necessary ingredients for social information systems to encourage a culture that values and promotes truth?

Tackling the root causes behind common misperceptions can help inform people about the issues at hand and eliminate much of the misinformation surrounding them. Such educational efforts may be particularly beneficial for improving understandings of public policy, which is a necessary component for building trust in the institutions of a civic society. One of the ways to encourage individual thinking over group thinking is to ask individuals to explain the inner workings of certain processes or issues, for example, the national debt (Fernbach et al., 2013).

Previous research highlights two social aspects of interventions that are key to their success: the source of the intervention and the norms in the target community. The literature suggests that direct contradiction is counterproductive, as it may serve to entrench an individual in their beliefs if done in a threatening manner (Sunstein et al., 2016). This threat can be mitigated if statements come from somewhat similar sources, especially when they speak against their own self-interest. Moreover, people respond to information based on the shared narrative they observe from their community; to change opinions, it is therefore necessary to change social norms surrounding those opinions. One possibility is to “shame” the sharing of fake news—encourage the generation of factual information and discourage those who provide false content.

As an alternative to creating negative social impacts for sharing fake news within communities, forming bridges across communities may also foster the production of more neutral and factual content. Since at least some evidence suggests that people have the tendency to become similar to those they interact with, it’s essential to communicate with people across cultural divides. However, in doing so it is important to adhere to modern social psychological understandings of the conditions under which such interactions are likely to result in positive social interactions.

Finally, it is important to accept that not all individuals will be susceptible to intervention. People are asymmetrical updaters (Sunstein et al., 2016): those with extreme views may only be receptive to evidence that supports their view. Those with moderate views will more readily update their views in either direction based on new evidence. Focusing on this latter set of individuals may lead to the most fruitful forms of intervention. Alternatively, winning over partisans might undermine misinformation at its source, and thus offer a more robust long term strategy at combating the issue.

Conclusion

The conference touched on the cognitive, social and institutional constructs of misinformation from both long-standing and more recent work in a variety of disciplines. In this document, we outlined some of the relevant work on information processing, credibility assessment, corrections and their effectiveness, and the diffusion of misinformation on social networks. We described concrete steps for making the science of fake news more inclusive for researchers across the political spectrum, detailed strategies for making the truth “louder,” and introduced an interdisciplinary initiative for advancing the study of misinformation online. Finally, we recognized areas where additional research is needed to provide a better understanding of the fake news phenomenon and ways to mitigate it.

Footnotes

[1] There is some ambiguity concerning the precise distinctions between “fake news” on the one hand, and ideologically slanted news, disinformation, misinformation, propaganda, etc. on the other. Here we define fake news as misinformation that has the trappings of traditional news media, with the presumed associated editorial processes. That said, more work is needed to develop as clear as possible a nomenclature for misinformation that, among other things, would allow scholars to more precisely define the phenomenon they are seeking to address.

[2] For example, Pew Research found that 62 percent of Americans get news on social media, with 18 percent of people doing so often: http://www.journalism.org/2016/06/15/state-of-the-news-media-2016/

[3] Numerous resources exist already, such as journalistsresource.org, engagingnewsproject.org, and datausa.io. Wikipedia co-founder Jimmy Wales, in turn, recently announced plans for a new online news publication, called WikiTribune, which would feature content written by professional journalists and edited by volunteer fact-checkers, with financial support coming from reader donations. So we would need to target definite gaps and perhaps work in conjunction with such a group.

[4] http://newsroom.fb.com/news/2016/12/news-feed-fyi-addressing-hoaxes-and-fake-news/

References

Allcott, H., & Gentzkow, M. (2017). Social Media and Fake News in the 2016 Election. National Bureau of Economic Research. Retrieved from http://www.nber.org/papers/w23089

Baum, M.A., & Groeling, T. “Shot by the messenger: Partisan cues and public opinion regarding national security and war.” Political Behavior 31.2 (2009): 157-186.

Benkler, Y., Faris, R.Roberts, H., & Zuckerman, E. (2017). Study: Breitbart-led right-wing media ecosystem altered broader media agenda. Retrieved from http://www.cjr.org/analysis/breitbart-media-trump-harvard-study.php

Berinsky, A. (2017) “Rumors and Health Care Reform: Experiments in Political Misinformation.” The British Journal of Political Science.

Boaden, H. (2010). BBC – The Editors: Impartiality is in our genes. Retrieved from http://www.bbc.co.uk/blogs/theeditors/2010/09/impartiality_is_in_our_genes.html

Conover, M., Ratkiewicz, J, Francisco, M., Gonçalves, B., Flammini, A., & Menczer, F. (2011a). Political polarization on twitter. In Proc. 5th International AAAI Conference on Weblogs and Social Media (ICWSM).

Conover, M., Gonçalves, B., Ratkiewicz, J., Flammini, A., & Menczer, F. (2011b). Predicting the political alignment of Twitter users. In Proceedings of 3rd IEEE Conference on Social Computing (SocialCom).

Conover, M., Gonçalves, B., Flammini, A., & Menczer, F. (2012). Partisan asymmetries in online political activity. EPJ Data Science, 1:6.

de Benedictis-Kessner, J., Baum, M.A., Berinsky, A.J., & Yamamoto, T. “Persuasion in Hard Places: Accounting for Selective Exposure When Estimating the Persuasive Effects of Partisan Media.” Unpublished Manuscript, Harvard University and MIT.

Fernbach, P. M., Rogers, T., Fox, C. R., & Sloman, S. A. (2013). Political extremism is supported by an illusion of understanding. Psychological science, 24(6), 939-946.

Ferrara, E., Varol, O., Davis, C.A., Menczer, F., & Flammini. A. (2016). The rise of social bots. Comm. ACM, 59(7):96–104.

Flynn, D. J., Nyhan, B., & Reifler, J. (2016). The Nature and Origins of Misperceptions: Understanding False and Unsupported Beliefs about Politics. Advances in Pol. Psych.

Greenhill, K. M. (forthcoming). Whispers of War, Mongers of Fear: Extra-factual Sources of Threat Conception and Proliferation.

Greenhill, K. M., & Oppenheim B. (forthcoming). Rumor Has It: The Adoption of Unverified Information in Conflict Zones. International Studies Quarterly.

Greenhill, K.M., & Oppenheim, B., A Likely Story? The Role of Information Source in Rumor Adoption and Dissemination.

Hasher L., Goldstein, D., Toppino, T. (1977). Frequency and the conference of referential validity. J. Verbal Learning Verbal Behav. 16, 107–112.

Lazer, D., Grinberg, N., Friedland, L., Joseph, K., Hobbs, W., & Mattsson, C. (2016) Fake News on Twitter. Presentation at the Fake News Conference.

Goel, Sharad, et al. (2015) “The Structural Virality of Online Diffusion.” Management Science 62:1:180-96.

McKernon, E. (1925). Fake News and the Public: How the Press Combats Rumor, The Market Rigger, and The Propagandist. Harper’s Magazine.

Menczer, F. (2016). The spread of misinformation in social media. In Proceedings of the 25th International Conference Companion on World Wide Web (pp. 717–717). International World Wide Web Conferences Steering Committee.

Metzger, M. J., Flanagin, A. J., Medders, R. B. (2010). Social and Heuristic Approaches to Credibility Evaluation Online. Journal of Communication, 60(3), 413-439.

Nyhan, B., & Reifler, J. (2010). When corrections fail: The persistence of political misperceptions. Political Behavior, 32(2), 303–330.

Pennycook, Cannon, & Rand “Prior exposure increases perceived accuracy of fake news,” currently under review. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2958246

Qiu, X., Oliveira, D.F.M., Sahami Shirazi, A., Flammini, A., & Menczer, F. (2017). Lack of quality discrimination in online information markets. Preprint arXiv:1701.02694.

Ratkiewicz, J., Conover, M., Meiss, M., Gonçalves, B., Flammini, A., & Menczer, F. (2011). Detecting and tracking political abuse in social media. In Proc. 5th International AAAI Conference on Weblogs and Social Media (ICWSM).

Sasahara, K., Ciampaglia, G.L., Flammini, A., & Menczer, F. (in preparation). Emergence of echo chambers in social media.

Schudson, M. (1997). Dynamics of Distortion in Collective Memory. In Memory Distortion: How Minds, Brains, and Societies Reconstruct the Past (pp. 346-364). Eds.

Schacter, D. L. et. al. Harvard University Press.

Schudson, M. (2001). The objectivity norm in American journalism. Journalism, 2(2), 149-170.

Schwarz, Norbert, Lawrence J. Sanna, Ian Skurnik, and Carolyn Yoon. 2007. Metacognitive Experiences and the Intricacies of Setting People Straight: Implications for Debiasing and Public Information Campaigns. Advances in Experimental Social Psychology 39:127–61.

Shao, C., Ciampaglia, G.L., Flammini, A., & Menczer, F. (2016). Hoaxy: A platform for tracking online misinformation. In Proc. Third Workshop on Social News On the Web (WWW SNOW).

Silverman, C. (2016). Here Are 50 Of The Biggest Fake News Hits On Facebook From 2016. Retrieved from https://www.buzzfeed.com/craigsilverman/top-fake-news-of-2016

Sloman, S., & Fernbach, P. (2017). The Knowledge Illusion: Why We Never Think Alone. Penguin.

Sunstein, C. R., Bobadilla-Suarez, S., Lazzaro, S. C., & Sharot, T. (2016). How People Update Beliefs about Climate Change: Good News and Bad News (SSRN Scholarly Paper No. ID 2821919). Rochester, NY: Social Science Research Network.

Swire, B., Berinsky, A. J., Lewandowsky, S., & Ecker, U. K. H. (2017). Processing political misinformation: comprehending the Trump phenomenon. Royal Society Open Science, 4(3), 160802.

Tambuscio, M., Ciampaglia, G.L., Oliveira, D.F.M., Ruffo, G., Flammini, A., & Menczer, F. (in preparation). Modeling the competition between the spread of hoaxes and fact checking.

Thorson, E. (2015). Identifying and Correcting Policy Misperceptions. Unpublished Paper, George Washington University. Available at http://www.americanpressinstitute.org/wp-content/uploads/2015/04/Project-2-Thorson-2015-Identifying-Political-Misperceptions-UPDATED-4-24.pdf.

Varol, O., Ferrara, E., Davis, C.A., Menczer, F., & Flammini, A. (2017). Online human-bot interactions: Detection, estimation, and characterization. In Proc. Intl. AAAI Conf. on Web and Social Media (ICWSM).

Appendix: Conference Schedule

Combating Fake News: An Agenda for Research and Action

Day One

Harvard University | Friday, February 17, 2017

8:30am – 8:45am| Welcome by Nicco Mele, Introduction by Matthew Baum (Harvard) and David Lazer (Northeastern): The science of fake news: What is to be done?

Morning Session: Foundations

How and why is fake news a problem? What are the underlying individual and aggregate processes that underlie its capacity to do harm?

8:45am – 10:30am | Panel 1: The Psychology of Fake News

How do people determine what information to attend to, and what to believe? How does fake news fit into this picture?

- Moderator: Maya Sen, Harvard

- Panelists: Brendan Nyhan (Dartmouth), Adam Berinsky (MIT), Emily Thorson (Boston College), Steven Sloman (Brown), Gordon Pennycook (Yale), Miriam Metzger (UC Santa Barbara)

10:45am – 12:30pm |Panel 2: How Fake News Spreads

How does information spread amongst people in the current news ecosystem? How is this driven by our social ties, by social media platforms, and by “traditional” media? What lessons can be learned from history?

- Moderator: Nicco Mele (Harvard)

- Panelists: David Lazer (Northeastern), Filippo Menczer (Indiana), Michael Schudson (Columbia), Kelly Greenhill (Tufts and Harvard Belfer Center), Yochai Benkler (Harvard), Duncan Watts (Microsoft Research)

12:30pm – 1:20pm | Keynote Speaker: Cass Sunstein, Robert Walmsley University Professor, Harvard University Law School

Afternoon Session: Implications and Interventions

1:20pm – 3:05pm|Panel 3: Responses by Public and Private Institutions

What role is there for public institutions (e.g., local, state and federal government) and private actors (e.g., social media companies, scholars, NGOs, activists) to combat fake news and its harmful effects?

- Moderator: Tarek Masoud (Harvard)

- Panelists: Helen Boaden (BBC News & BBC Radio), Katherine Brown (Council on Foreign Relations), Lori Robertson (FactCheck.org), Eli Pariser (UpWorthy), David Rothschild (Microsoft Research), Adam Sharp (former head of News, Government, and Elections, Twitter)

3:05pm – 3:15pm|Public session closing remarks

Matthew Baum and David Lazer

3:45pm – 5:45pm| Panel 4: Executive/Private Session

What don’t we know that we need to know? Todd Rogers (Harvard)

Day Two

Northeastern University | Saturday, February 18, 2017

Executive/Private Sessions

What is to be done? Recommendations for interventions, agenda for research. Moderator: Ryan Enos (Harvard)

8:30am – 10:00am|Four breakout groups considering different avenues for interventions

- Group A: Interventions by government. What regulatory interventions, if any, are appropriate by government actors? In the US context, how does the First Amendment act limit interventions?

- Group B: Interventions by private sector. For companies such as Facebook, what steps are possible and desirable with respect to controlling fake news and misinformation?

- Group C: Interventions by third parties. What kinds of interventions are possible by third parties—fact checkers, extensions, apps?

- Group D: What role for the academy? Crossing disciplinary stovepipes to understand and mitigate the effects of fake news.

10:15am – 11:00am | Report-outs to plenary

11:00am – 12:30pm | Action-oriented agenda for research

12:30pm – 1:30pm | Lunch and discussion, overall summary