The views expressed in Shorenstein Center Discussion Papers are those of the author(s) and do not necessarily reflect those of Harvard Kennedy School or of Harvard University. Discussion Papers have not undergone formal review and approval. Such papers are included in this series to elicit feedback and to encourage debate on important issues and challenges in media, politics and public policy. These papers are published under the Center’s Open Access Policy. Papers may be downloaded and shared for personal use.

Download a PDF version of this paper here.

Abstract

In this paper, we present new empirical evidence to demonstrate the near impossibility for existing machine learning content moderation methods to keep pace with, let alone stay ahead of, hateful language online. We diagnose the technical shortcomings of the content moderation and natural language processing approach as emerging from a broader epistemological trapping wrapped in the liberal-modern idea of the ‘human,’ and provide the details of the ambiguities and complexities of annotating text as derogatory or dangerous, in a way to demonstrate the need for persistently involving communities in the process. This decolonial perspective of content moderation and the empirical details of the technical difficulties of annotating online hateful content emphasize the need for what we describe as “ethical scaling”. We propose ethical scaling as a transparent, inclusive, reflexive and replicable process of iteration for content moderation that should evolve in conjunction with global parity in resource allocation for moderation and addressing structural issues of algorithmic amplification of divisive content. We highlight the gains and challenges of ethical scaling for AI-assisted content moderation by outlining distinct learnings from our ongoing collaborative project, AI4Dignity.

Introduction

“In the southern Indian state of Kerala, the right-wing group is a numerical minority. They are frequently attacked online by members of the communist political party. Should I then categorize this speech as exclusionary extreme speech since it is against a minority group?” asked a fact-checker from India, as we gathered at a virtual team meeting to discuss proper labels to categorize different forms of contentious speech that circulate online. For AI4Dignity, a social intervention project that blends machine learning and ethnography to articulate responsible processes for online content moderation, it was still an early stage of labeling. Fact-checkers from Brazil, Germany, India and Kenya, who participated as community intermediaries in the project, were at that time busy slotting problematic passages they had gathered from social media into three different categories of extreme speech for machine learning. We had identified these types as derogatory extreme speech (demeaning but does not warrant removal of content), exclusionary extreme speech (explicit and implicit exclusion of target groups that requires stricter moderation actions such as demoting) and dangerous speech (with imminent danger of physical violence warranting immediate removal of content). We had also drawn a list of target groups, which in its final version included ethnic minorities, immigrants, religious minorities, sexual minorities, women, racialized groups, historically oppressed caste groups, indigenous groups, large ethnic groups and any other. Under derogatory extreme speech, we also had groups beyond protected characteristics, such as politicians, legacy media, the state and civil society advocates for inclusive societies, as targets.

The Indian fact-checker’s question about right-wingers as a numerical minority in an Indian state was quite easy to answer. “You don’t seek to protect right-wing communities simply because they are a minority in a specific region. You need to be aware of the dehumanizing language they propagate, and realize that their speech deserves no protection,” we suggested instantly. But questions from fact-checkers were flowing continuously, calling attention to diverse angles of the annotation problem.

“You have not listed politicians under protected groups [of target groups],” observed a fact-checker from Kenya. “Anything that targets a politician also targets their followers and the ethnic group they represent,” he noted, drawing reference to a social media post with mixed registers of English and Swahili: “Sugoi thief will never be president. Sisi wakikuyu tumekataa kabisa, Hatuezii ongozwa na mwizi [We the Kikuyu have refused totally, we cannot be led by a thief].” In this passage, the politician did not just represent a constituency in the formal structures of electoral democracy but served as a synecdoche for an entire target community. Verbal attacks in this case, they argued, would go beyond targeting an individual politician.

In contrast, the German fact-checking team was more cautious about their perceptions of danger. “We were careful with the selection of dangerous speech,” informed a fact-checker from Germany, “How can we designate something as dangerous speech when we are not too sure about the sender, let alone the influence they have over the audience?” In the context that we had not requested fact-checkers to gather information about the source of extreme speech instances, and for data protection reasons instructed them strictly to avoid adding any posters’ personal identifiers, the problem of inadequate information in determining the danger levels of speech loomed over the annotation exercise.

The complex semantics of extreme speech added to the problem. “They don’t ever use a sentence like, ‘This kind of people should die.’ Never, explained a fact-checker from Brazil, referring to a hoax social media post that claimed that United States’ President Joe Biden had appointed an LGBTQI+ person to head the education department. Homophobic groups do not use direct insult, he explained:

It’s always something like, ‘This is the kind of person who will take care of our children [as the education minister]’. Although it is in the written form, I can imagine the intonation of how they are saying this. But I cannot fact-check it, it’s not fact-checkable. Because, you know, I don’t have any database to compare this kind of sentence, it is just implicit and it’s typical hate speech that we see in Brazil. Do you understand the difficulty?

As questions poured in and extreme speech passages piled up during the course of the project, and as we listened to fact-checkers’ difficult navigations around labeling problematic online content, we were struck by the complexity of the task that was staring at us. From missing parts of identity markers for online posters to the subtlety of language to the foundational premises for what constitutes the unit of analysis or the normative framework for extremeness in online speech, the challenge of labeling contentious content appeared insurmountable.

In this paper, we present new empirical evidence to demonstrate the near impossibility for existing machine learning content moderation methods to keep pace with, let alone stay ahead of, hateful language online. We focus on the severe limitations in the content moderation practices of global social media companies such as Facebook and Google as the context to emphasize the urgent need to involve community intermediaries with explicit social justice agendas for annotating extreme speech online and incorporating their participation in a fair manner in the lifecycle of artificial intelligence (AI) assisted model building. To advance this point, we present a set of findings from the AI4Dignity project that involved facilitated dialogue between independent fact-checkers, ethnographers and AI developers to gather and annotate extreme speech data.

We employ two methods to highlight the limitations of AI-assisted content moderation practices among commercial social media platforms. First, we compare the AI4Dignity extreme speech datasets with Perspective API’s toxicity scores developed by Google. Second, using manual advanced search methods, we test a small sample of the annotated dataset to examine whether they continue to appear on Twitter. We layer these findings with the ethnographic observations of our interactions with fact-checkers during different stages of the project, to show how even facilitated exercises for data annotation with the close engagement of fact-checkers and ethnographers with regional expertise can become not only resource intensive and demanding but also uncertain in terms of capturing the granularity of extreme speech, although the binary classification between extreme and non-extreme as well as types of extreme speech that should be removed and those that warrant other kinds of moderation actions, such as downranking or counter speech, is agreed upon quite easily.

We argue that such interactions, however demanding, are the precise (and the only) means to develop an iterative process of data gathering, labeling and model building that can stay sensitive to historically constituted and evolving axes of exclusion, and locate shifting, coded and indirect expressions of hate that ride on local cultural idioms and linguistic repertoire as much as global catchphrases in English. We highlight this exercise as a reflexive and ethical process through which communities with explicit social justice agendas and those most affected by hate expressions take a leading role in the process of annotation in ways that the gains of transparency and iteration in the ‘ordering of data’ and content moderation decisions are channeled back towards protecting communities. Such knowledge through iterative processes involves an appreciation not only for social media posts but also broader contextual factors including the vulnerability of target groups and the power differentials between the speaker and target.

This policy approach and the empirical evidence upon which it is built calls for some conceptual rethinking. The exercise of community intermediation in AI cultures highlights the importance of pushing back against the liberal framing of “the human versus the machine” conundrum. We therefore begin this essay with a critique of the liberal conception of the “human” by asking how the moral panics around human autonomy versus machine intelligence in AI-related discussions as well as its inverse—the ambitions to prepare machines as humans—hinge on the liberal-modern understanding of “rationality as the essence of personhood”1Mhlambi, Sabelo. “From Rationality to Relationality.” Carr Center for Human Rights Policy Harvard Kennedy School, Carr Center Discussion Paper, No. 009: 31. 2020, p 1. that obscures the troubled history of the human/subhuman/nonhuman distinction that colonial modernity instituted. We argue that the liberal-modern understanding of rationality that drives the ambitions to transfer rational personhood to the machine and the anxiety around such ambitions are conceptually unprepared to grasp the responsibility of community participation in the design and imagination of the machine. Such a view, for the problem of extreme speech discussed here, elides the responsibility of involving communities in content moderation. Critiquing the rationality-human-machine nexus and the colonial logics of the human/subhuman/nonhuman distinction that underwrite global disparities in content moderation as well as forms of extreme speech aimed at immigrants, minoritized people, religious and ethnic ‘others,’ people of color and women,2These forms of extreme speech are analytically distinct from but in reality come mixed with, amplify or differentially shape the outcomes of other kinds of extreme speech such as election lies and medical misinformation. we propose the principle of “ethical scaling.” Ethical scaling envisions a transparent, inclusive, reflexive and replicable process of iteration for content moderation that should evolve in conjunction with addressing structural issues of algorithmic amplification of divisive content. Ethical scaling builds on what studies have observed as “speech acts” that can have broad-ranging impacts not only in terms of their co-occurrence in escalations of physical violence (although causality is vastly disputed) but also in terms of preparing the discursive ground for exclusion, discrimination and hostility.3See Butler, Judith. 1997. Excitable Speech: A Politics of the Performative. New York: Routledge. Dangers of regulatory overreach and clamping down freedom of expression require sound policies and procedural guidelines but the anxiety around overreach cannot become an excuse for unfettered defence of freedom of expression or to view content moderation as something that has to wait for imminent violence. For a review of this scholarship, see Udupa, Sahana, Iginio Gagliardone, Alexandra Deem and Laura Csuka. 2020. “Field of Disinformation, Democratic Processes and Conflict Prevention”. Social Science Research Council, https://www.ssrc.org/publications/view/the-field-of-disinformation-democratic-processes-and-conflict-prevention-a-scan-of-the-literature/ Far from an uncritical embrace of free speech, we therefore hold that responsible content moderation is an indispensable aspect of platform regulation. In the next sections of the essay, we substantiate the gains and challenges of “ethical scaling” with empirical findings.

We conclude by arguing for a framework that treats the distribution and content sides of online speech holistically, highlighting how AI is insignificant in tackling the ecosystem of what is defined as “deep extreme speech.”

AI in content moderation and the colonial bearings of human/machine

As giant social media companies face the heat of the societal consequences of polarized content they facilitate on their platforms while also remaining relentless in their pursuit of monetizable data, the problem of moderating online content has reached monumental proportions. There is growing recognition that online content moderation is not merely a matter of technical capacity or corporate will but also a serious issue for governance, since regressive regimes around the world have sought to weaponize online discourse for partisan gains, to undercut domestic dissent or power up geopolitical contestations against “rival” nation states through targeted disinformation campaigns. In countries where democratic safeguards are crumbling, the extractive attention economy of digital communication has accelerated a dangerous interweaving of corporate greed and state repression, while regulatory pressure has also been mounting globally to bring greater public accountability and transparency in tech operations.

Partly to preempt regulatory action and partly in response to public criticism, social media companies are making greater pledges to contain harmful content on their platforms. In these efforts, AI has emerged as a shared imaginary of technological solutionism. In corporate content moderation, AI comes with the imagined capacity to address online hateful language across diverse contexts and political specificities. Imprecise in terms of the actual technologies it represents and opaque in terms of the technical steps that lead up to its constitution, AI has nonetheless gripped the imagination of corporate minds as a technological potentiality that can help them to confront a deluge of soul wrecking revelations of the harms their platforms have helped amplify.

AI figures in corporate practices with different degrees of emphasis across distinct content moderation systems that platform companies have raised, based on their technical architecture, business models and the size of operation. Robyn Caplan distinguishes them as the “artisanal” approach where “case-by-case governance is normally performed by between 5 and 200 workers” (platforms such as Vimeo, Medium and Discord); “community-reliant” approaches “which typically combine formal policy made at the company level with volunteer moderators” (platforms such as Wikipedia and Reddit); and “industrial-sized operations where tens of thousands of workers are employed to enforce rules made by a separate policy team” (characterized by large platforms such as Google and Facebook).4Caplan, Robyn. “Content or Context Moderation?” Data & Society. Data & Society Research Institute. November 14, 2018, p 16. https://datasociety.net/library/content-or-context-moderation/. Caplan observes that “industrial models prioritize consistency and artisanal models prioritize context.”5Caplan, 2018, p 6. Automated solutions are congruent with the objective of consistency in decisions and outcomes, although such consistency also depends on how quickly rules can be formalized.6Caplan, 2018.

In “industrial-size” moderation activities, what is glossed as AI largely refers to a combination of a relatively simple method of scanning existing databases of labeled expressions against new instances of online expression to evaluate content and detect problems—a method commonly used by social media companies7Gillespie, Tarleton. “Content Moderation, AI, and the Question of Scale.” Big Data & Society 7 (2): 2053951720943234. 2020. https://doi.org/10.1177/2053951720943234.—and a far more complex project of developing machine learning models with the ‘intelligence’ to label texts they are exposed to for the first time based on the steps they have accrued in picking up statistical signals from the training datasets. AI—in the two versions of relatively simple comparison and complex ‘intelligence’—is routinely touted as a technology for the automated content moderation actions of social media companies, including flagging, reviewing, tagging (with warnings), removing, quarantining and curating (recommending and ranking) textual and multimedia content. AI deployment is expected to address the problem of volume, reduce costs for companies and decrease human discretion and emotional labor in the removal of objectionable content.

However, as companies themselves admit, there are vast challenges in AI-assisted moderation of hateful content online. One of the key challenges is the quality, scope and inclusivity of training datasets. AI needs “millions of examples to learn from. These should include not only precise examples of what an algorithm should detect and ‘hard negatives,’ but also ‘near positives’—something that is close but should not count.”8Murphy, Hannah, and Madhumita Murgia. “Can Facebook Really Rely on Artificial Intelligence to Spot Abuse?” FT.Com, November, 2019. https://www.proquest.com/docview/2313105901/citation/D4DBCB03EAC348C7PQ/1. The need for cultural contextualization in detection systems is a widely acknowledged limitation since there is no catch-all algorithm that can work for different contexts. Lack of cultural contextualization has resulted in false positives and over-application. Hate groups have managed to escape keyword-based machine detection through clever combinations of words, misspellings,9Gröndahl, Tommi, Luca Pajola, Mika Juuti, Mauro Contin, and N. Asokan. “All You Need Is ‘Love’: Evading Hate Speech Detection.” ArXiv:1808.09115v3 [Cs.CL]. 2018. satire, changing syntax and coded language.10See Burnap, Pete & Matthew L. Williams (2015). “Cyber Hate Speech on Twitter: An Application of Machine Classification and Statistical Modeling for Policy and Decision Making”. Policy & internet, 7(2), 223-242; Fortuna, Paula, Juan Soler, and Leo Wanner. 2020. Toxic, Hateful, Offensive or Abusive? What Are We Really Classifying? An Empirical Analysis of Hate Speech Datasets. In Proceedings of the 12th Language Resources and Evaluation Conference, pages 6786–6794, Marseille, France. European Language Resources Association; Ganesh, Bharath. “The Ungovernability of Digital Hate Culture.” Journal of International Affairs 71 (2), 2018, pp 30–49; Warner, W., and J. Hirschberg. “Detecting Hate Speech on the World Wide Web.” In Proceedings of the Second Workshop on Language in Social Media, 2012, pp 19–26. Association for Computational Linguistics. https://www.aclweb.org/anthology/W12-2103; The dynamic nature of online hateful speech—where hateful expressions keep changing—adds to the complexity. As a fact-checker participating in the AI4Dignity project expressed, they are swimming against “clever ways [that abusers use] to circumvent the hate speech module.”

A more foundational problem cuts through the above two challenges. This concerns the definitional problem of hate speech. There is no consensus both legally and culturally around what comprises hate speech, although the United Nations has set the normative parameters while acknowledging that “the characterization for what is ‘hateful’ is controversial and disputed.”11See https://www.un.org/en/genocideprevention/documents/UN%20Strategy%20and%20Plan%20of%20Action%20on%20Hate%20Speech%2018%20June%20SYNOPSIS.pdf This increases the difficulties of deploying AI-assisted systems for content moderation in diverse national, linguistic and cultural contexts. A fact-checker from Kenya pointed out that even within a national context, there are not only regional and subregional distinctions about what is understood as hate speech but also an urban/rural divide. “In the urban centers, some types of information are seen as ‘outlaw,’ so it is not culturally accepted,” he noted, “but if you go to other places, it’s seen as something in the norm.” As regulators debate actions against online extreme speech not only in North America, where big tech is headquartered, but also in different regions of the world where they operate, platform companies are reminded that their content moderation and AI use principles that are largely shaped by the “economic and normative…[motivations]…to reflect the democratic culture and free speech expectations of…[users]”12Klonick, Kate. “The New Governors: The People, Rules, and Processes Governing Online Speech”, Harvard Law Review 131, 2017, p 1603. have to step beyond North American free speech values and negotiate the staggeringly diverse regulatory, cultural and political climates that surround online speech.13Sablosky, Jeffrey. “Dangerous Organizations: Facebook’s Content Moderation Decisions and Ethnic Visibility in Myanmar.” Media, Culture & Society 43 (6), 2021, pp 1017–42. https://doi.org/10.1177/0163443720987751.

Several initiatives have tried to address these limitations by incorporating users’ experiences and opinions.14See Online Hate Index developed by Berkeley Institute for Data Science https://www.adl.org Google’s Perspective API and Twitter’s Birdwatch have experimented with crowdsourcing models to evaluate content. Launched in 2021 as a pilot, Birdwatch allows users to label information in tweets as misleading and provide additional context. Google’s Perspective API offers “toxicity scores” to passages based on user inputs feeding the machine learning models. Such efforts have sought to leverage ‘crowd intelligence’ but the resulting machine learning models, while offering some promising results in terms of detecting evolving forms of extreme content, are prone to false positives as well as racial bias.15Sap et al., 2019. Studies have also found that crowdsourced models have the problem of differential emphasis. Whereas racist and homophobic tweets are more likely to be identified as hate speech in the North American context, gender-related comments are often brushed aside as merely offensive speech.16Davidson et al., 2017. More critically, crowdsourced models have channelized corporate accountability and the onus of detection onto an undefined entity called ‘crowd,’ seeking to co-opt the Internet’s promised openness to evade regulatory and social consequences of gross inadequacies in corporate efforts and investments in moderating problematic content.

Such challenges could be framed either as platform governance issues or the problem of technology struggling to catch up to the mutating worlds of words, thereby igniting the hope that they would be addressed as resources for content moderation expand and political pressure increases. However, some fundamental ethical and political issues that undergird the problem prompt a more incisive critical insight. Across attempts to bring more “humans” for annotation, there is not only a tendency to frame the issue as a technical problem or platform (ir)responsibility but also a more taken-for-granted assumption that bringing “humans” into the annotation process will counterbalance the dangers and inadequacies of machine detection. This approach is embedded within a broader moral panic around automation and demands to assert and safeguard “human autonomy” against the onslaught of the digital capitalist data “machine.” In such renderings, the concept of “the human” represents the locus of moral autonomy17Becker, Lawrence C., and Charlotte B. Becker. A History of Western Ethics. v. 1540. New York: Garland Publication. 1992. that needs protection from the “machine.”

Conversely, the human-machine correspondence aspired to in the development of algorithmic machines takes, as Sabelo Mhlambi has explained, “the traditional view of rationality as the essence of personhood, designating how humans and now machines, should model and approach the world.”18Mhlambi, 2020. As he points out, this aspired correspondence obscures the historical fact that the traditional view of rationality as the essence of personhood “has always been marked by contradictions, exclusions and inequality.”19Mhlambi, 2020, p 1. In their decolonial reading, William Mpofu and Melissa Steyn further complicate “the human” as a category, highlighting the risks of its uncritical application:

The principal trouble with the grand construction of the human of Euro-modernity…is that it was founded on unhappy circumstances and for tragic purposes. Man, as a performative idea, created inequalities and hierarchies usable for exclusion and oppression of the other…The attribute human…is not self-evident or assured. It can be wielded; given and taken away.20Steyn, Melissa, and William Mpofu, eds. Decolonising the Human: Reflections from Africa on Difference and Oppression. Wits University Press. 2021, p 1. https://doi.org/10.18772/22021036512.

“The human” as an attribute that is wielded rather than self-evident or assured brings to sharp relief the conceits and deceits of liberal-modern thought. The liberal weight behind the concept of the human elides its troubled lineage in European colonial modernity that racially classified human, subhuman and nonhuman,21Wynter, Sylvia. “Unsettling the Coloniality of Being/Power/Truth/Freedom: Towards the Human, After Man, Its Overrepresentation—An Argument.” CR: The New Centennial Review 3 (3), 2003, pp. 257–337. institutionalizing this distinction within the structures of the modern nation-state (that marked the boundaries of the inside/outside and minority/majority populations) and the market (that anchored the vast diversity of human activities to the logic of accumulation). As Sahana Udupa has argued, the nation-state, market and racial relations of colonial power constitute a composite structure of oppression, and the distinctive patterns of exclusion embedded in these relations have evolved and are reproduced in close conjunction.22Udupa, Sahana. “Decoloniality and Extreme Speech.” In Media Anthropology Network E-Seminar. European Association of Social Anthropologists. 2020. https://www.easaonline.org/downloads/networks/media/65p.pdf.

For online content moderation and AI, attention to colonial history raises four questions. A critical view of the category of the “human” is a reminder of the foundational premise of the human/subhuman/nonhuman distinction of coloniality that drives, validates and upholds a significant volume of hateful langauge online based on racialized and gendered categories and the logics of who is inside and who is outside of the nation-state and who is a minority and who is in the majority. Importantly, such oppressive structures operate not only on a global scale by defining the vast power differentials among national, ethnic or racialized groups but also within the nation-state structures where dominant groups reproduce coloniality through similar axes of difference as well as systems of hierarchy that “co-mingle with if not are invented” by the colonial encounter.23Thirangama, Sharika, Tobias Kelly, and Carlos Forment. 2018. “Introduction: Whose Civility?” Anthropological Theory 18 (2–3), 2018, pp 153–74. https://doi.org/10.1177/1463499618780870. Importantly, extreme speech content is also driven by the market logics of coloniality, and as Jonathan Beller states, “Computational capital has not dismantled racial capitalism’s vectors of oppression, operational along the exacerbated fracture lines of social difference that include race, gender, sexuality, religion, nation, and class; it has built itself and its machines out of those capitalized and technologized social differentials.”24Original emphasis. Beller, Jonathan. “The Fourth Determination”. e-flux, 2017, Retrieved from https://www.e- flux.com/journal/85/156818/the-fourth-determination/ For instance, alongside active monetization of problematic content that deepens these divisions, biased training data in ML models has led to greater probability that African American English will be singled out as hateful, with “disproportionate negative impact on African-American social media users.”25Davidson, Thomas, Debasmita Bhattacharya and Ingmar Weber. 2019. “Racial Bias in Hate Speech and Abusive Langauge Detection Datasets”. Proceedings of the Third Abusive Langauge Workshop, pp. 25-35. Florence: Association for Computational Linguistics. See also Maarten Sap, Dallas Card, Saadia Gabriel, Yejin Choi, and Noah A. Smith. 2019. “The Risk of Racial Bias in Hate Speech Detection. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 1668–1678, Florence: Association for Computational Linguistics. For problems in the category definitions, see also Fortuna, Paula, Juan Soler and Leo Wanner. 2020. “Toxic, Hateful, Offensive or Abusive? What Are We Really Classifying? Empirical Analysis of Hate Speech Datasets”. Proceedings of the 12th Language Resources and Evaluation Conference, pp. 6786–6794. Marseille: European Language Resources Association. There is mounting evidence for how classification algorithms, training data, and the application of machine learning models are biased because of the limitations posed by the homogenous workforce of technology companies that employ disproportionately fewer women, minorities and people of color.26Noble, Safiya Umoja. Algorithms of Oppression: How Search Engines Reinforce Racism. New York: New York University Press, 2018. This is also reflected in the technical sciences. Natural Language Processing (NLP) and other computational methods, which have not only highlighted but are also themselves weighed down by limited and biased data and labeling.

Epistemologies of coloniality also limits the imaginations of technological remedies against hateful language. Such thinking encourages imaginations of technology that spin within the frame of the “rational human”—the product of colonial modernity—as either the basis for the machine to model upon or the moral force to resist automation. Put differently, both the problem (extreme speech) and the proposed solution (automation) are linked to Euro-modern thinking.

At the same time, proposed AI-based solutions to hateful language that take the human as an uncontested category fail to account for how the dehumanizing distinction between the human/subhuman/nonhuman categories instituted by coloniality shape complex meanings, norms and affective efficacies around content that cannot be fully discerned by the machines. As Mhlambi sharply argues, “this is not a problem of not having enough data, it is simply that data does not interpret itself.”27Mhlambi 2020, p 5. Computational processes will never be able to fully model meaning and meaning-making.

Even more, the dehumanizing distinction of coloniality also tacitly rationalizes the uneven allocation of corporate resources for content moderation across different geographies and language communities, and the elision of the responsibility of involving affected communities as an indelible principle of annotation and moderation. Based on the most recent whistleblower accounts that came to be described as the “Facebook Papers” in Western media, The New York Times reported that, “Eighty-seven percent of the company’s global budget for time spent on classifying misinformation is earmarked for the United States, while only 13 percent is set aside for the rest of the world—even though North American users make up only 10 percent of the social network’s daily active users.”28Frenkel, Sheera and Alba, Davey. “In India, Facebook Struggles to Combat Misinformation and Hate Speech.” The New York Times, October 23, 2021. https://www.nytimes.com/2021/10/23/technology/facebook-india-misinformation.html. In the news article, the company spokesperson was quoted claiming that the “figures were incomplete and don’t include the company’s third party fact-checking partners, most of whom are outside the United States,” but the very lack of transparency around the allocation of resources and the outsourced arrangements around “third party partners” signal the severely skewed structures of content moderation that global social media corporations have instituted. Such disparities attest to what Denis Ferreira da Silva observes as the spatiality of racial formation characterized by a constitutive overlap between symbolic spatiality (racialized geographies of whiteness and privilege) and the material terrain of the world.29Ferreira da Silva, Denis. Toward a Global Idea of Race. Minneapolis: University of Minnesota Press. 2007.

To summarize, the liberal-modern epistemology as well as racial, market and nation-state relations of coloniality significantly shape the 1) content of extreme speech 2) limitations in the imagination of technology 3) complexity of meaning of content and 4) disparities in content moderation. Both as a technical problem of contextualization and a political problem that conceals colonial classification and its structuring effects on content moderation, the dichotomous conception of “human vs machine” thus glosses over pertinent issues around who should be involved in the process of content moderation and how content moderation should be critically appraised in relation to the broader problem of extreme speech as a market-driven, technologically-shaped, historically inflected and politically instrumentalized phenomenon.

Ethical Scaling

Far from recognizing the process of involving human annotators as a political issue rather than a mere technical one, the involvement of human annotators in corporate content moderation is framed in the language of efficiency and feasibility, and often positioned in opposition to the necessities of “scaling.” While human annotators are recognized as necessary at least until the machines pick up enough data to develop capacities to judge content, their involvement is seen as fundamentally in tension with machine-enabled moderation decisions that can happen in leaps, matching, to some degree, the hectic pace of digital engagements and data creation. Reading against this line of thinking, Tarleton Gillespie offers some important clarifications around scale and size, and why they should not be collapsed to mean the same. Building on Jennifer Slack’s30Slack, Jennifer. 2006. Communication as articulation. In: Shepherd G, St. John J and Striphas T (eds) Communication as . . . : Perspectives on Theory. Thousand Oaks: SAGE Publications, pp.223–231. work, he suggests that scale is “a specific kind of articulation: …different components attached, so they are bound together but can operate as one—like two parts of the arm connected by an elbow that can now ‘articulate’ their motion together in powerful but specific ways.”31Gillespie, 2020, p 2 . Content moderation on social media platforms similarly involves the articulation of different teams, processes and protocols, in ways that “small” lists of guidelines are conjoined with larger explanations of mandates; AI’s algorithms learnt on a sample of data are made to work on much larger datasets; and, if we may add, small public policy teams stationed inside the company premises in Western metropoles articulate the daily navigations of policy heads in countries far and wide, as governments put different kinds of pressure on social media companies to moderate the content that flow on their platforms. These articulations then are not only “sociotechnical scalemaking”32Seaver, Nick. “Care and Scale: Decorrelative Ethics in Algorithmic Recommendation.” Cultural Anthropology 36 (3), 2021, pp. 509–37. https://doi.org/10.14506/ca36.3.11. but also political maneuvering, adjustments and moving the ‘parts’ strategically and deliberately, so what is learnt in one context can be replicated elsewhere.

Gillespie’s argument is insightful in pointing out the doublespeak of commercial social media companies. As he elaborates:

The claim that moderation at scale requires AI is a discursive justification for putting certain specific articulations into place—like hiring more human moderators, so as to produce training data, so as to later replace those moderators with AI. In the same breath, other approaches are dispensed with, as are any deeper interrogations of the capitalist, ‘growth at all costs’ imperative that fuels these massive platforms in the first place.

We take this critique of digital capitalism alongside the sociotechnical aspects of the annotation process, and argue for a framework that recognizes that scaling as a process that makes “the small…have large effects”33Gillespie, 2020, p 2. and proceduralizes this process for its replication in different contexts as also, and vitally, a political one. It is political precisely because of how and whom it involves as “human annotators,” the extent of resources and imaginations of technology that guide this process, and the deeper colonial histories that frame the logics of market, race and rationality within which it is embedded (and therefore has to be disrupted).

The AI4Dignity project is built on the recognition that scaling as an effort to create replicable processes for content moderation is intrinsically a political practice and should be seen in conjunction with regulatory attention to what scholars like Joan Donovan34Donovan, Joan. “Why Social Media Can’t Keep Moderating Content in the Shadows.” MIT Technology Review. 2020a. https://www.technologyreview.com/2020/11/06/1011769/social-media-moderation-transparency-censorship/; Donovan, Joan. “Social-Media Companies Must Flatten the Curve of Misinformation,” April 14, 2020b. https://www-nature-com.ezp-prod1.hul.harvard.edu/articles/d41586-020-01107-z. and Evegny Morozov35Morozov, Evgeny. The Net Delusion: The Dark Side of Internet Freedom. New York: Public Affairs. 2011. have powerfully critiqued as the algorithmic amplification and political manipulation of polarized content facilitated by extractive digital capitalism. We define this combined attention to replicable moderation process as political praxis and critique of capitalist data hunger as “ethical scaling.” In ethical scaling, the replicability of processes is conceived as a means to modulate data hunger and channel back the benefits of scaling toward protecting marginalized, vulnerable and historically disadvantaged communities. In other words, ethical scaling imagines articulation among different parts and components as geared towards advancing social justice agendas with critical attention to colonial structures of subjugation and the limits of liberal thinking, and recognizing that such articulation would mean applying breaks to content flows, investing resources for moderation, and embracing an inevitably messy process of handling diverse and contradictory inputs during annotation and model building.

In the rest of the paper, based on the learnings gained from the AI4Dignity project, we will describe ethical scaling for extreme speech moderation by considering both the operational and political aspects of involving “human annotators” in the moderation process.

AI4Dignity

Building on the critical insights into liberal constructions of the “human” and corporate appeals to “crowds,” the AI4Dignity project has actively incorporated the participation of community intermediaries in annotating online extreme speech. The project has partnered with independent fact-checkers as critical community interlocutors who can bring cultural contextualization to AI-assisted extreme speech moderation in a meaningful way. Facilitating spaces of direct dialogue between ethnographers, AI developers and (relatively) independent fact-checkers who are not employees of large media corporations, political parties or social media companies is a key component of AI4Dignity. Aware of the wildly heterogenous field of fact-checking that range from large commercial media houses to very small players with commercial interests as well as the political instrumentalization of the very term “fact-checks” for partisan gains,36For instance, in the UK, media reports in 2019 highlighted the controversies surrounding the Conservative party renaming their Twitter account “factcheckUK” https://www.theguardian.com/politics/2019/nov/20/twitter-accuses-tories-of-misleading-public-in-factcheck-row. In Nigeria, online digital influencers working for political parties describe themselves as “fact-checking” opponents and not fake news peddlers. https://mg.co.za/article/2019-04-18-00-nigerias-propaganda-secretaries/ the project has sought to develop relations with fact-checkers based on whether they are independent (enough) in their operations and with explicit agendas for social justice. The scaling premise here is to devise ways that can connect, support and mobilize existing communities who have gained reasonable access to meaning and context of speech because of their involvement in online speech moderation of some kind.

Without doubt, fact-checkers are already overburdened with verification-related tasks, but there is tremendous social value in involving them to flag extreme speech as a critical subsidiary to their core activities. Moreover, for fact-checkers, this collaboration also offers the means to foreground their own grievances as a target community of extreme speech. By involving fact-checkers, AI4Dignity has sought to draw upon the professional competence of a relatively independent group of experts who are confronted with extreme speech both as part of the data they sieve for disinformation and as targets of extreme speech. This way, AI4Dignity has tried to establish a process in which the “close cousin” of disinformation, namely, extreme speech and dangerous speech, are spotted during the course of fact-checkers’ daily routines, without significantly interrupting their everyday verification activities.

The first step in the implementation of AI4Dignity has involved discussions among ethnographers, NLP researchers and fact-checkers to identify different types of problematic content and finalize the definitions of labels for manually annotating social media content. After agreeing upon the definitions of the three types of problematic speech as derogatory extreme speech (forms that stretch the boundaries of civility but could be directed at anyone, including institutions of power and people in positions of power), exclusionary extreme speech (explicitly or implicitly excluding people because of their belonging to a certain identity/community), and dangerous speech (with imminent danger of physical violence),37Dangerous speech definition is borrowed from Susan Benesch’s work (2012), and the distinction between derogatory extreme speech and exclusionary extreme speech draws from Udupa (2021). Full definitions of these terms are available at https://www.ai4dignity.gwi.uni-muenchen.de. See Benesch, Susan. “Dangerous Speech: A Proposal to Prevent Group Violence.” New York: World Policy Institute. 2012; Udupa, Sahana. “Digital Technology and Extreme Speech: Approaches to Counter Online Hate.” In United Nations Digital Transformation Strategy. Vol. April. New York: United Nations Department of Peace Operations. 2021a. https://doi.org/10.5282/ubm/epub.77473. fact-checkers were requested to label the passages under the three categories.

Each gathered passage ranged from a minimum sequence of words that comprises a meaningful unit in a particular language to about six to seven sentences. Fact-checkers from Brazil, Germany, India, and Kenya, who participated in the project, sourced the passages from different social media platforms they found relevant in their countries and those they were most familiar with. In Kenya, fact-checkers sourced the passages from WhatsApp, Twitter and Facebook; Indian fact-checkers gathered them from Twitter and Facebook; the Brazilian team from WhatsApp groups; and fact-checkers in Germany from Twitter, YouTube, Facebook, Instagram, Telegram and comments posted on the social media handles of news organizations and right-wing bloggers or politicians with large followings.

In the second step, fact-checkers uploaded the passages via a dedicated WordPress site on to a database connected in the backend to extract and format the data for NLP model building. They also marked the target groups for each instance of labeled speech. On the annotation form, they identified the target groups from a dropdown list that included “ethnic minorities, immigrants, religious minorities, sexual minorities, women, racialized groups, historically oppressed castes, indigenous groups and any other.” Only under “derogatory extreme speech” were annotators also able to select “politicians, legacy media, the state and civil society advocates for inclusive societies” as target groups. Fifty percent of the annotated passages were later cross-annotated by another fact-checker from the same country to check the inter-annotator agreement score.

In the third step, we created a collaborative coding space called “Counterathon” (a marathon to counter hate) where AI developers and partnering fact-checkers entered into an assisted dialogue to assess classification algorithms and the training datasets involved in creating them. This dialogue was facilitated by academic researchers with regional expertise and a team of student researchers who took down notes, raised questions, displayed the datasets for discussion and transcribed the discussions. We also had a final phase of reannotation of over fifty percent of the passages from Kenya based on the feedback we received during the Counterathon about including a new category (large ethnic groups) in the target groups.

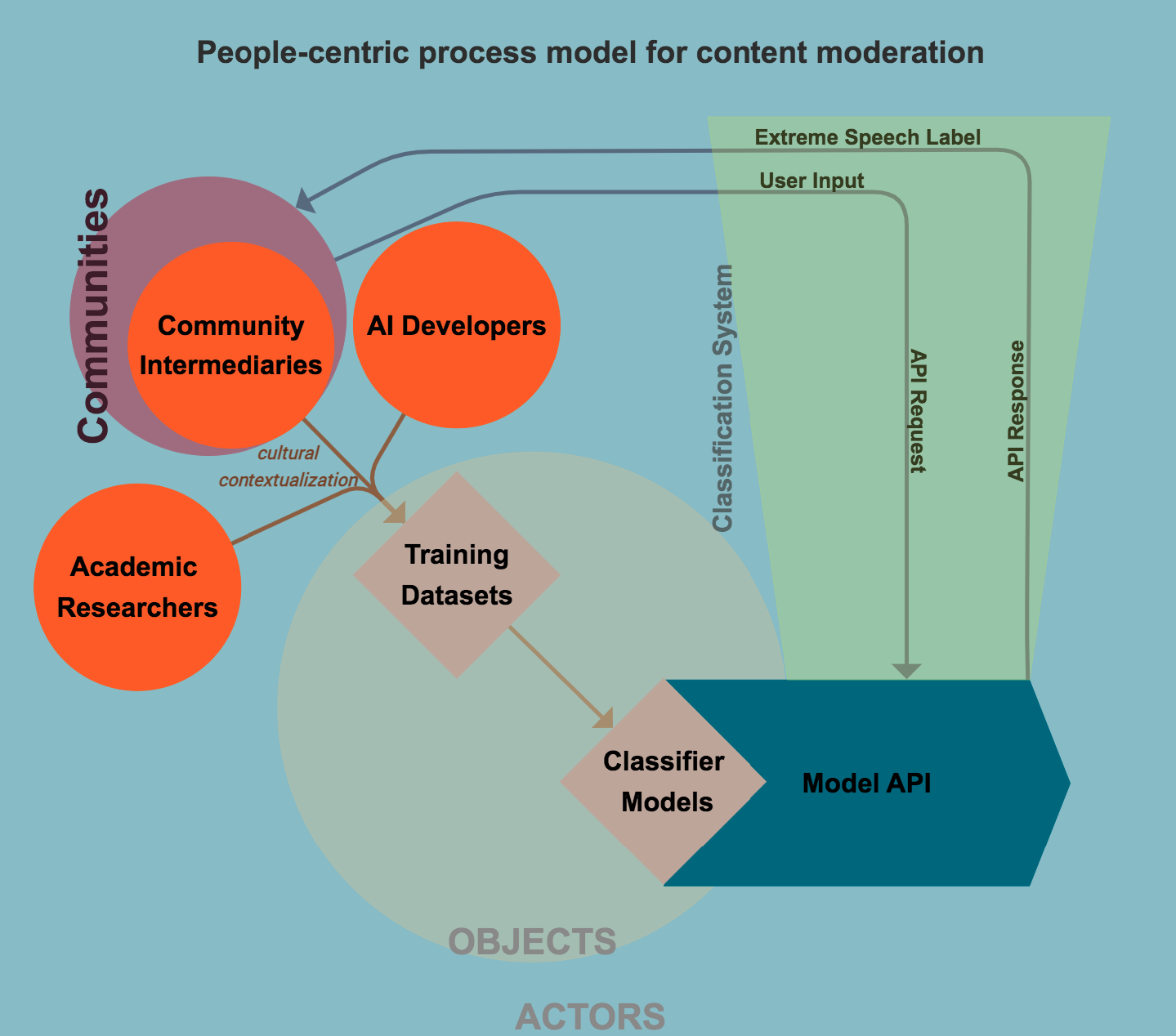

Through these steps, the project has aimed to stabilize a more encompassing collaborative structure for what might be called a “people-centric process model” in which “hybrid” models of human–machine filters are able to incorporate dynamic reciprocity between AI developers, academic researchers and community intermediaries such as independent fact-checkers on a regular basis, and the entire process is kept transparent with clear-enough guidelines for replication. Figure 1 illustrates the basic architecture and components of this people-centric content moderation process.

However, the exercise of involving communities in content moderation is time intensive and exhausting, and comes with the risks of handling contradictory inputs that require careful navigation and vetting. At the outset, context sensitivity is needed for label definitions. By defining derogatory extreme speech as distinct from exclusionary extreme speech and dangerous speech, the project has tried to locate uncivil language as possible efforts to speak against power in some instances, and in others, as early indications of exclusionary discourses that need closer inspection. Identification of target groups in each case provides a clue about the implications of online content, and whether the online post is merely derogatory or more serious. The three part typology has tried to bring more nuance to the label definitions instead of adopting an overarching term such as hate speech.

However, even with a clear enough list of labels, selecting annotators is a daunting challenge. Basic principles of avoiding dehumanizing language, grounded understanding of vulnerable and historically disadvantaged communities, and knowledge around what kind of uncivil speech is aimed at challenging regressive power as opposed to legitimating harms within particular national or social contexts would serve as important guiding principles in selecting community annotators. AI4Dignity project has sought to meet the parameters by involving factcheckers with their close knowledge of extreme speech ecologies, professional training in factchecking, linguistic competence and a broad commitment to social justice (as indicated by their involvement in peace initiatives or a record of publishing factchecks to protect vulnerable populations).

By creating a dialogue between ethnographers, AI developers, and factcheckers, the project has tried to resolve different problems in appraising content as they emerged during the process of annotation and in delineating the target groups. However, this exercise is only a first step in developing a process of community intermediation in AI cultures, and it requires further development and fine tuning with future replications.

Building on the learnings and findings from the project, we highlight below two distinct elements of the process model as critical aspects of ethical scaling in content moderation.

Iteration and experiential knowledge

As the opening vignettes indicate, the process of defining the labels and classification of gathered passages during the project was intensely laborious and dotted with uncertainty and contradiction. These confusions were partly a result of our effort to move beyond a binary classification of extreme and non-extreme and capture the granularity of extreme speech in terms of distinguishing derogatory extreme speech, exclusionary extreme speech and dangerous speech, and different target groups for these types. For instance, the rationale behind including politicians, media and civil society representatives who are closer to establishments of power (even if they hold opposing views) as target groups under “derogatory extreme speech” was to track expressions that stretch the boundaries of civility as also a subversive practice. For policy actions, derogatory extreme speech would require closer inspection, and possible downranking, counter speech, monitoring, redirection and awareness raising but not necessarily removal of content. However, the other two categories (exclusionary extreme speech and dangerous speech) require removal, with the latter (dangerous speech) warranting urgent action. Derogatory extreme speech also presented a highly interesting corpus of data for research purposes as it represented online discourses that challenged the protocols of polite language to speak back to power, but it also constituted a volatile slippery ground on which what is comedic and merely insulting could quickly slide down to downright abuse and threat.38Udupa, Sahana. “Gaali Cultures: The Politics of Abusive Exchange on Social Media.” New Media and Society 20 (4), 2017, pp. 1506–22. https://doi.org/10.1177/1461444817698776. For content moderation, such derogatory expressions can serve as the earliest cultural cues to brewing and more hardboiled antagonisms.

During the course of the project, instances of uncertainty about the distinction between the three categories were plentiful, and the Krippendorff (2003) intercoder agreement score (alpha) between two fact-checkers from the same country averaged 0.24.39Although, as mentioned, there was consensus that all selected passages were instances of extreme speech. The inter-coder agreement scores are also similar to other works in the field: In Ross, Björn, Michael Rist, Guillermo Carbonell, Benjamin Cabrera, Nils Kurowsky, Michael Wojatzki. “Measuring the Reliability of Hate Speech Annotations: The Case of the European Refugee Crisis”, ArXiv:1701.08118 [cs.CL]. 2017, a German dataset, α was between 0.18 and 0.29, in Sap, Maarten, Saadia Gabriel, Lianhui Qin, Dan Jurafsky, Noah A. Smith, and Yejin Choi. “Social bias frames: Reasoning about social and power implications of language.” In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL), 2020, the α score was 0.45, while in Ousidhoum, Nedjma, Zizheng Lin, Hongming Zhang, Yangqiu Song, and Dit-Yan Yeung. “Multilingual and multi-aspect hate speech analysis”, In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), 2019, a multilingual dataset, α was between 0.15 and 0.24. Also, a majority of these works include neutral examples as well. However, two moments stand out as illustrative of the complexity. During several rounds of discussion, it became clear that the list of target groups was itself an active political choice, and it had to reflect the regional and national specificities to the extent possible. In the beginning, we had proposed a list of target groups that included ethnic minorities, immigrants, religious minorities, sexual minorities, racialized groups, historically oppressed indigenous groups and any other. Fact-checkers from Brazil pointed out the severity of online misogyny and suggested adding “women” to the list. Fact-checkers from Kenya pointed out that “ethnic minorities” was not a relevant category since Kikuyu and Kalenjin ethnic groups around whom a large proportion of extreme speech circulated were actually large ethnic groups. Small ethnic groups, they noted, did not play a significant role in the country’s political discourse. While this scenario itself revealed the position of minorities in the political landscape of the country, it was difficult to label extreme speech without giving the option of selecting “large ethnic groups” in the list of target groups. Fact-checkers from Germany pointed out that “refugees” were missing from the list, since immigrants—usually welcomed and desired at least for economic reasons—are different from refugees who are derided as unwanted. We were not able to implement this distinction during the course of the project, but we noted this as a significant point to incorporate in future iterations.

During the annotation process, fact-checkers brought up another knotty issue in relation to the list of target groups. Although politicians were listed only under the derogatory speech category, fact-checkers wondered what to make of politicians who are women or who have a migration background. The opening vignette from Kenya about the “Sugoi thief” signals a scenario, where politicians become a synecdoche for an entire targeted community. “Sawsan Chebli is a politician,” pointed out a fact-checker from Germany, “but she also has migration background.” Chebli, a German politician born to parents who migrated to Germany from Palestine, is a frequent target for right-wing groups. Fact-checkers from India highlighted the difficulty of placing Dalit politicians and Muslim politicians only under the category of “politicians” and therefore only under “derogatory speech” because targeting them could lead to exclusionary speech against the communities they represented. In such cases, we advised the fact-checkers to label this as exclusionary speech and identify the target groups of such passages as “ethnic minorities,” “women,” “historically disadvantaged caste groups,” “immigrants,” or other relevant labels.

Some fact-checkers and participating academic intermediaries also suggested that the three labels—derogatory, exclusionary, and dangerous—could be broken down further to capture the granularity. For instance, under derogatory speech, there could be “intolerance talk” (speech that is intolerant of opposition); “delegitimization of victimhood” (gaslighting and undermining people’s experiences of threat and right to protection); and “celebratory exclusionary speech” (in which exclusionary discourse is ramped up not by using hurtful language but by celebrating the glory of the dominant group). Duncan Omanga, the academic expert on Kenya, objected to the last category and observed that “Mobilization of ethnic groups in Kenya by using glorifying discourses is frequent especially during the elections in the country. Labeling this as derogatory is complicated since it is internalized as the nature of politics and commonly legitimized.” Although several issues could not be resolved partly because of the limitations of time and resources in the project, curating such observations has been helpful in highlighting the importance of iteration in not only determining the labels but also linking the selection of labels with specific regulatory goals. In cases where removal of content versus retaining it is the primary regulatory objective, it is helpful to have a simpler classification, but breaking down the categories further would be important for research as well as for fine grained interventions involving counter speech and positive narratives targeting specific kinds of vitriolic exchange online.

Moreover, the value of iteration is crucial for embedding embodied knowledge of communities most affected by extreme speech into the annotation process, and for ensuring that categories represent the lived experiences and accretions of power built up over time. Without doubt, stark and traumatizing images and messages can be (and should be) spotted by automation since it helps to avoid the emotional costs of exposure to such content in online content moderation. This does not discount responsible news coverage on violence that can sensitize people about the harms of extreme content, but in the day-to-day content moderation operations for online discourses, automation can provide some means for (precariously employed) content moderators to avoid exposure to violent content. Beyond such obvious instances of dehumanizing and violent content, subtle and indirect forms of extreme expression require the keen attention and experiential knowledge of communities who advocate for, or themselves represent, groups targeted by extreme speech.

Participating fact-checkers in the project—being immigrants, LGBTQI+ persons or members of the targeted ethnic or caste groups—weighed in with their own difficult experiences with extreme speech and how fragments of speech acts they picked up for labeling were not merely “data points” but an active, embodied engagement with what they saw as disturbing trends in their lived worlds. Indeed, ethical scaling as conceptualized in AI4Dignity’s iterative exercise does not merely connect parts and components for actions that can magnify effects and enable efficiency, but grounds this entire process by connecting knowledges derived from the experiences of inhabiting and confronting the rough and coercive worlds of extreme speech. As the fact-checker from Brazil expressively shares their experience of spotting homophobic content in the opening vignettes of this essay, hatred that hides between the lines, conceals behind the metaphors, cloaks in ‘humor’ and mashups, or clothes itself in the repertoire of ‘plain facts’—the subtleties of speech that deliver hate in diverse forms—cannot be fully captured by cold analytical distance, or worse still, with an approach that regards moderation as a devalued, cost-incurring activity in corporate systems. As the fact-checker in the opening vignette intoned by referencing the hoax message on Biden appointing an LGBTQI+ person to head the education department, it is the feel for the brewing trouble and insidious coding of hatred between the lines that helps him to flag the trouble as it emerges in different guises:

As I told you, for example the transexual content was very typical hate speech included into a piece of misinformation, but not that explicit at all. So, you have to be in the position of someone who is being a target of hate speech/misinformation, to figure out that this piece is hate speech, not only misinformation. So that was making me kind of nervous, when I was reading newspapers every day and I was watching social media and I see that content spreading around, because this is my opinion on it and its much further, it’s much more dangerous than this [a mere piece of misinformation]. You are…you are telling people that it’s a problem that a transgender person, a transsexual is going to be in charge of education because somehow it’s a danger to our children. So, it makes me kind of uncomfortable and that’s why we decided to join the project [AI4Dignity].

As we navigated extreme speech passages and the thick narratives around how factcheckers encountered and flagged them for the project, it became clear that iteration is an inevitably intricate and time intensive exercise. The AI4Dignity findings show that the performance of ML models (BERT) based on the datasets we gathered averaged performance metrics of other hate speech detection projects, but the model performance in detecting target groups was more than average.40The performance was also constrained by the fact that no comparison corpus for “neutral” passages was given, and instead only examples for the three labels of extreme speech were collected. However, our datasets are closer to real world instances of hateful langauge. Several hate speech detection projects have relied on querying of keywords, while AI4Dignity has sourced the passages from actual discussions online through community intermediaries. The performance of BERT on hate speech datasets is examined thoroughly in Swamy, Steve Durairaj, Anupam Jamatia, and Björn Gambäck. “Studying generalisability across abusive language detection datasets.” In Proceedings of the 23rd Conference on Computational Natural Language Learning (CoNLL), 2019. In Founta, Antigoni-Maria, Constantinos Djouvas, Despoina Chatzakou, Ilias Leontiadis, Jeremy Blackburn, Gianluca Stringhini, Athena Vakali, Michael Sirivianos, and Nicolas Kourtellis. “Large scale crowdsourcing and characterization of twitter abusive behavior”, In 11th International Conference on Web and Social Media, ICWSM 2018. AAAI Press, 2018, the F1 score is 69.6. In Davidson, Thomas, Dana Warmsley, Michael Macy, Ingmar Weber. “Automated hate speech detection and the problem of offensive language”, In International AAAI Conference on Web and Social Media, 2017, F1 is 77.3 while in Waseem, Zeerak, Dirk Hovy. “Hateful symbols or hateful people? predictive features for hatespeech detection on Twitter.” In Proceedings of the NAACL Student Research Workshop, 2016, F1 score is at 58.4. In all those datasets, the majority of content is neutral, an intuitively easier task. In our work, multilingual BERT (mBERT) can predict the extreme speech label of text with an F1 score of 84.8 for Brazil, 64.5 for Germany, 66.2 for India and 72.8 for Kenya. When predicting the target of extreme speech, mBERT scored 94.1 (LRAP, label ranking average precision ) for Brazil, 90.3 in Germany, 92.8 in India and 85.6 in Kenya. These results underscore the point that ethical scaling is not merely about gauging the performance of the machine for its accuracy in the first instance but involves ethical means for scaling a complex process so that problems of cultural contextualization and bias are addressed through reflexive iterations in a systematic and transparent manner.

Name-calling as seed expressions

Such an iterative process, while grounding content moderation, also offers specific entry points to catch signals from types of problematic content that do not contain obvious watchwords, and instead employ complex cultural references, local idioms or multimedia forms. We present one such entry point as a potential scalable strategy that can be developed further in future projects.

Our experience of working with longer real world expressions gathered by fact-checkers rather than keywords selected by academic annotators41A large number of machine learning models rely on keyword-based approaches for training data collection, but there have been efforts lately to “leverage a community-based classification of hateful language” by gathering posts and extracting keywords used commonly by self-identified right-wing groups as training data. See Saleem, Haji Mohammed, Kelly P. Dillon, Susan Benesch, and Derek Ruths. “A Web of Hate: Tackling Hate Speech in Online Social Spaces.” ArXiv Preprint ArXiv:1709.10159. 2017. The AI4Dignity project builds on the community-based classification approach instead of relying on keywords sourced by academic annotators. We have aimed to gather extreme speech data that is actively selected by community intermediaries, thereby uncovering characteristic complex expressions, including those containing more than a word. has shown the importance of name-calling as a useful shorthand to pick up relevant statistical signals for detecting extreme speech. This involves curating, with the help of community intermediaries such as fact-checkers, an evolving list of putdowns and name-calling that oppressive groups use in their extreme speech attacks, and mapping them onto different target groups with a contextual understanding of groups that are historically disadvantaged (e.g., Dalits in India), groups targeted (again) in a shifting context (for instance, the distinction between ‘refugees’ and ‘immigrants’ in Europe), those instrumentalized for partisan political gains and ideological hegemony (e.g., different ethnic groups in Kenya or the religious majority/religious minority distinction in India) or groups that are excluded because of a combination of oppressive factors (e.g., Muslims in India or Europe). This scaling strategy clarifies that the mere identification of name-calling and invectives without knowledge of target communities can be misleading.

For instance, interactions with fact-checkers helped us to sieve over twenty thousand extreme speech passages for specific expressions that can potentially lead to exclusion, threat and even physical danger. Most of these provocative and contentious expressions were still nested in the passages that fact-checkers labeled as “derogatory extreme speech”, but, as mentioned earlier, derogatory expressions could be used to build a catalogue for early warning signals with the potential to normalize and banalize exclusion.

Interestingly, we found that such expressions are not always single keywords, although some unigrams are helpful in getting a sense of the discourse. They are trigrams or passages with a longer word count42The average word count for passages in German was 24.9; in Hindi was 28.9; in Portuguese was 16.2; in Swahili was 14.7; in English & German was 22.9; in English & Hindi was 33.0; in English & Swahili was 24.3; in English in Germany was 6.3, in English in India was 24.1; in English in Kenya was 28.0. often with no known trigger words but contain implicit meanings, indirect dog whistles and ingroup idioms. In Germany, exclusionary extreme speech passages that fact-checkers gathered had several instances of “gehört nicht zu” [does not belong] or “nicht mehr” [no more or a sentiment of having lost something], signaling a hostile opposition to refugees and immigrants. Some expressions had keywords that were popularized by right-wing politicians and other public figures, either by coining new compound words or injecting well-meaning descriptions with insidious sarcasm. For instance, in right-wing discourses, it was common to refer to refugees as “Goldstücke.” A German politician from the center left SPD party, Martin Schulz, in a speech at Hochschule Heidelberg made the statement, “Was die Flüchtlinge zu uns bringen, ist wertvoller als Gold. Es ist der unbeirrbare Glaube an den Traum von Europa. Ein Traum, der uns irgendwann verloren gegangen ist [What refugees bring to us is more valuable than gold. It is the unwavering belief in the dream of Europe. A dream that we lost at some point].”43Stern.de. “Bremer Landgericht Gibt Facebook Recht: Begriff ‘Goldstück’ Kann Hetze Sein.” June 21, 2019. https://www.stern.de/digital/bremer-landgericht-gibt-facebook-recht–begriff–goldstueck–kann-hetze-sein-8763618.html.

In xenophobic circles, this expression was picked up and turned into the term “Goldstücke,” which is sarcastically used to refer to immigrants/refugees. Similarly, academic intermediary Laura Csuka in the German team highlighted another interesting expression, “in der BRD [in the Federal Republic of Germany] as indicating an older age group whose nostalgia could give a clue about its possible mobilization for xenophobic ends.

In Kenya, the term “Jorabuon” used by Luos refers to Kikuyus, and hence, as one of the fact-checkers pointed out, “even if they are communicating the rest in English, this main term is in the mother tongue and could seed hostility.” For communicative purposes, it also holds the value of in-group coding, since terms such as this one, at least for some time, would be intelligible to the community that coins it or appropriates it. In this case, Luos were sharing the term “Jorabuon” to refer to Kikuyus. The word “rabuon” refers to Irish potatoes. Kikuyu, in this coinage, are likened to Irish potatoes since their cuisine prominently features this root, and the mocking name marks them as a distinct group. “It is used by Luos when they don’t want the Kikuyu to realize that they are talking about them,” explained a fact-checker. Such acts of wordplay that test the limits of usage standards gain momentum especially during the elections when representatives of different ethnic groups contest key positions.

Hashtag #religionofpeace holds a similar performative power within religious majoritarian discourses in India. All the participating fact-checkers labeled passages containing this hashtag as derogatory. One of them explained, “#religionofpeace is a derogatory term [aimed at Muslims] because the irony is implied and clear for everybody.” Certain keywords are especially caustic, they pointed out, since they cannot be used in any well-meaning context. One of them explained, “Take the case of ‘Bhimte,’ which is an extremely derogatory word used against the marginalized Dalit community in India. I don’t think there is any way you can use it and say I did not mean that [as an insult]. This one word can convert any sentence into hate speech.” Fact-checkers pointed to a panoply of racist expressions and coded allusions to deride Muslims and Dalits, including “Mulle,” “Madrasa chaap Moulvi” [referring to Muslim religious education centers] and “hara virus” [green virus, the color green depicting Muslims], and the more insidious Potassium Oxide [K20 which phoenetically alludes to “Katuwon” and “Ola Uber” [two riding apps which together phonetically resemble Alla Ho Akbar].

Within online discourses, instrumental use of shifting expressions of name-calling, putdowns and invectives is structurally similar to what Yarimar Bonilla and Jonathan Rosa eloquently describe as the metadiscursive functions of hashtags in “forging a shared political temporality,” which also “functions semiotically by marking the intended significance of an utterance.”44Bonilla, Yarimar, and Jonathan Rosa. “#Ferguson: Digital Protest, Hashtag Ethnography and the Racial Politics of Social Media in the United States.” American Ethnologist 42 (1), 2015, pp. 4–17. https://doi.org/10.1111/amet.12112, p 5. Since name-calling in extreme speech contexts takes up the additional communicative function of coding the expressions for in-group sharing, some of them are so heavily coded that anyone outside the community would be confused or completely fail to grasp the intended meaning. For instance, in the India list, fact-checkers highlighted an intriguing expression in Hindi, “ke naam par” [in the name of]. One of the participating fact-checkers understood this expression as something that could mean “in the name of the nation,” signaling a hypernationalistic rhetoric, but thought it did not have any vitriolic edge. Another fact-checker soon interjected and explained: “‘Ke naam par’ is used for the scheduled caste community because they would say ‘In the name of scheduled castes’ when they are taking up the reservation in the education system and jobs.45Scheduled castes and scheduled tribes are bureaucratic terms to designate most oppressed caste groups for state affirmation policies in India. This is a very common way to insult scheduled castes because they are called people who are always ready to take up everything that is coming free, mainly jobs or seats in medical and engineering institutes.” Although “ke naam par” is invoked in a variety of instances including its use as a common connecting phrase in Hindi, its specific invocation in the right-wing discursive contexts revealed its function as a coded in-joke. During these instances of exchange between factcheckers, it became clear to us that iteration involved not only feeding the AI models with more data but also a meaningful dialogue between community intermediaries and academics so a fuller scope of the semiotic possibilities of coded expressions come into view.

For sure, many of name-calling expressions and putdowns have an inevitable open-endedness and appear in diverse contexts, including well-meaning invocations for inclusive politics and news reportage, but they still serve as useful signaling devices for further examination. In most cases, participating fact-checkers brought their keen understanding of the extreme speech landscape, avowing that they have a “sense” for the proximate conversational time-space in which such expressions appeared online. As a fact-checker from India put it, they have “a grasp of the intentions” of users who posted them.

Are existing machine learning models and content moderation systems equipped to detect such expressions identified through collaborative dialogue and iteration? We carried out two tests and found several gaps and limitations in the extreme speech detection and content moderation practices of large social media companies such as Google and Twitter. Although Facebook and WhatsApp constituted prominent sources of extreme speech instances that fact-checkers gathered for the project, we were unable to include them in the tests due to severe restrictions on data access on these platforms and applications.

Perspective API test

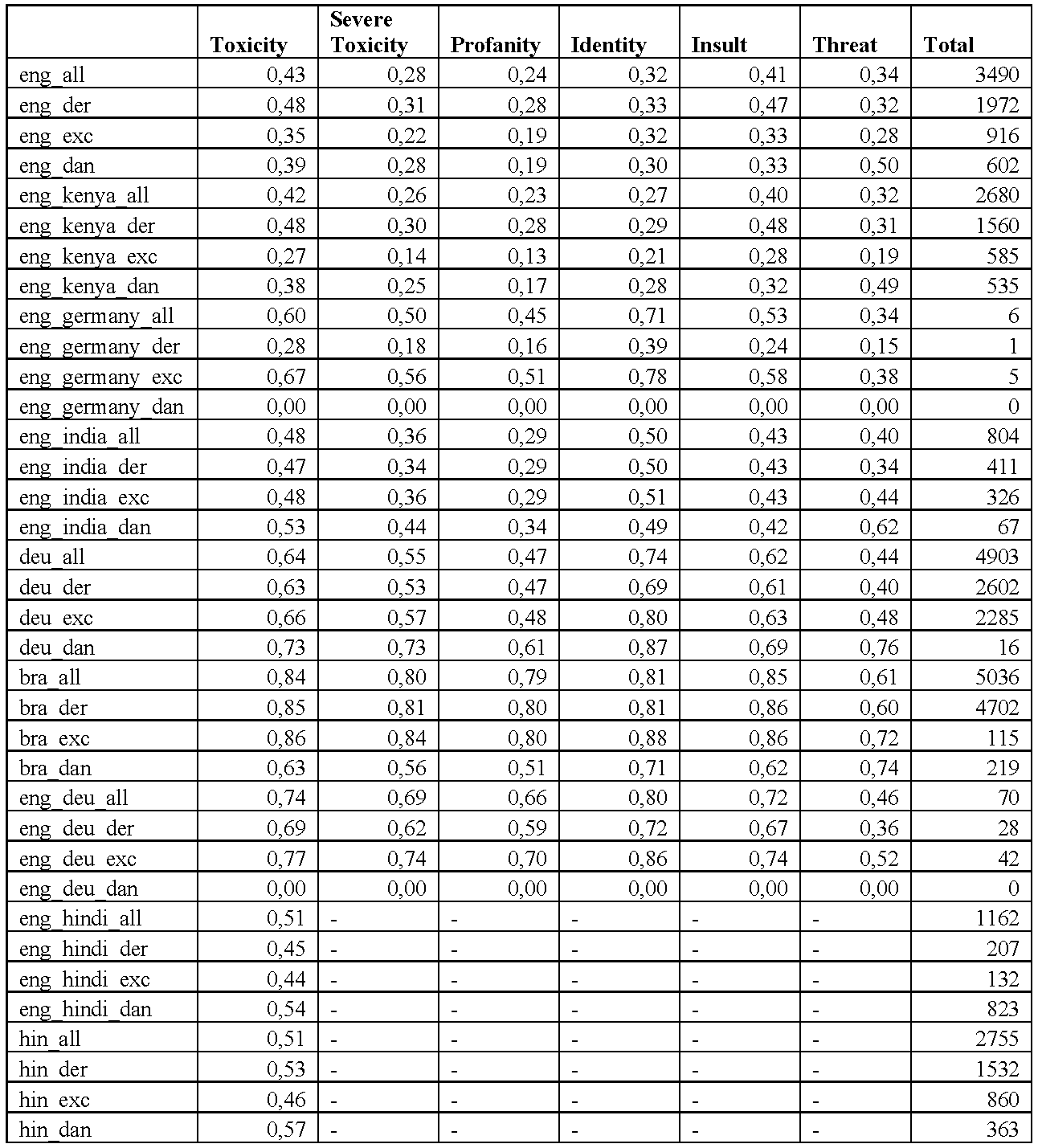

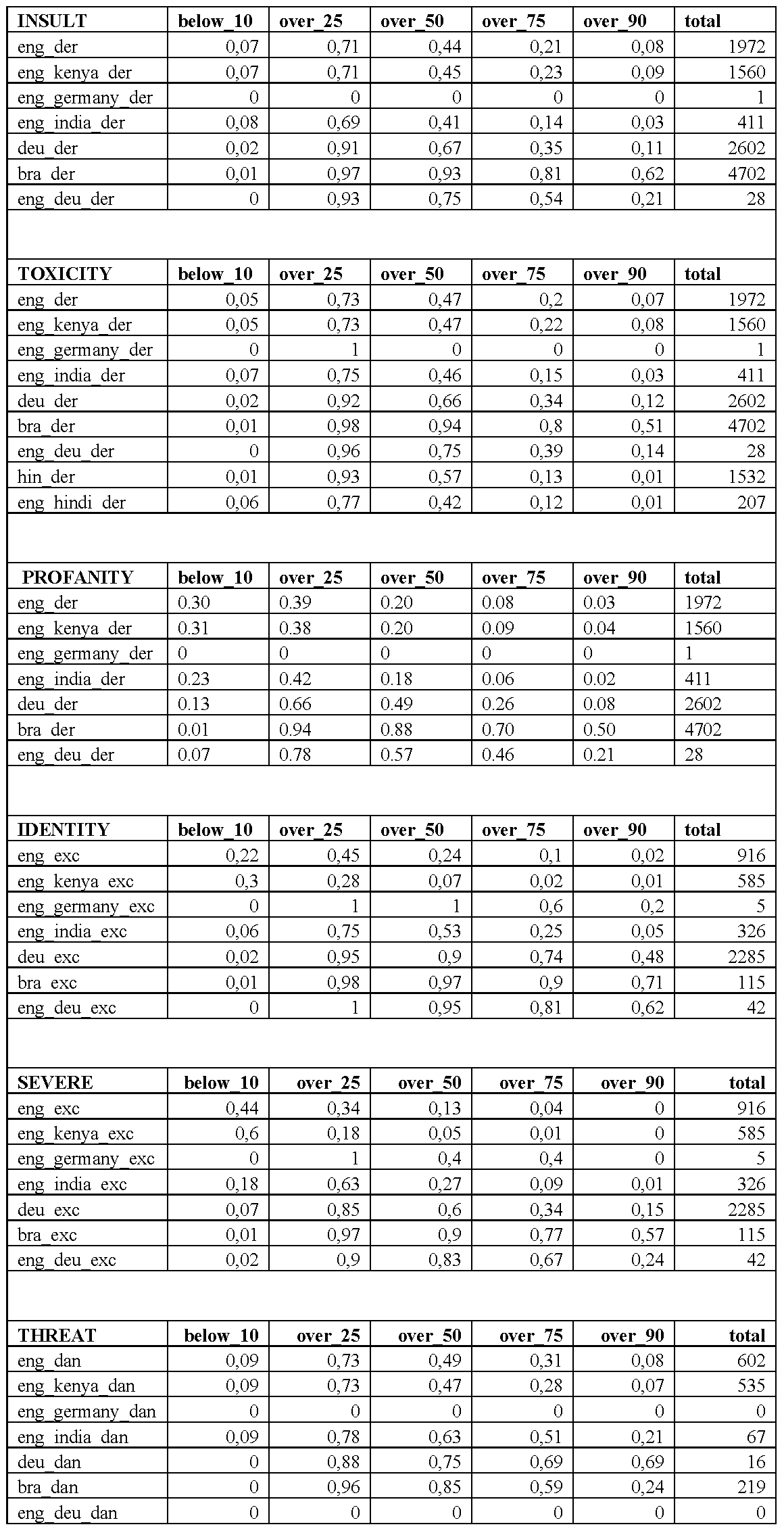

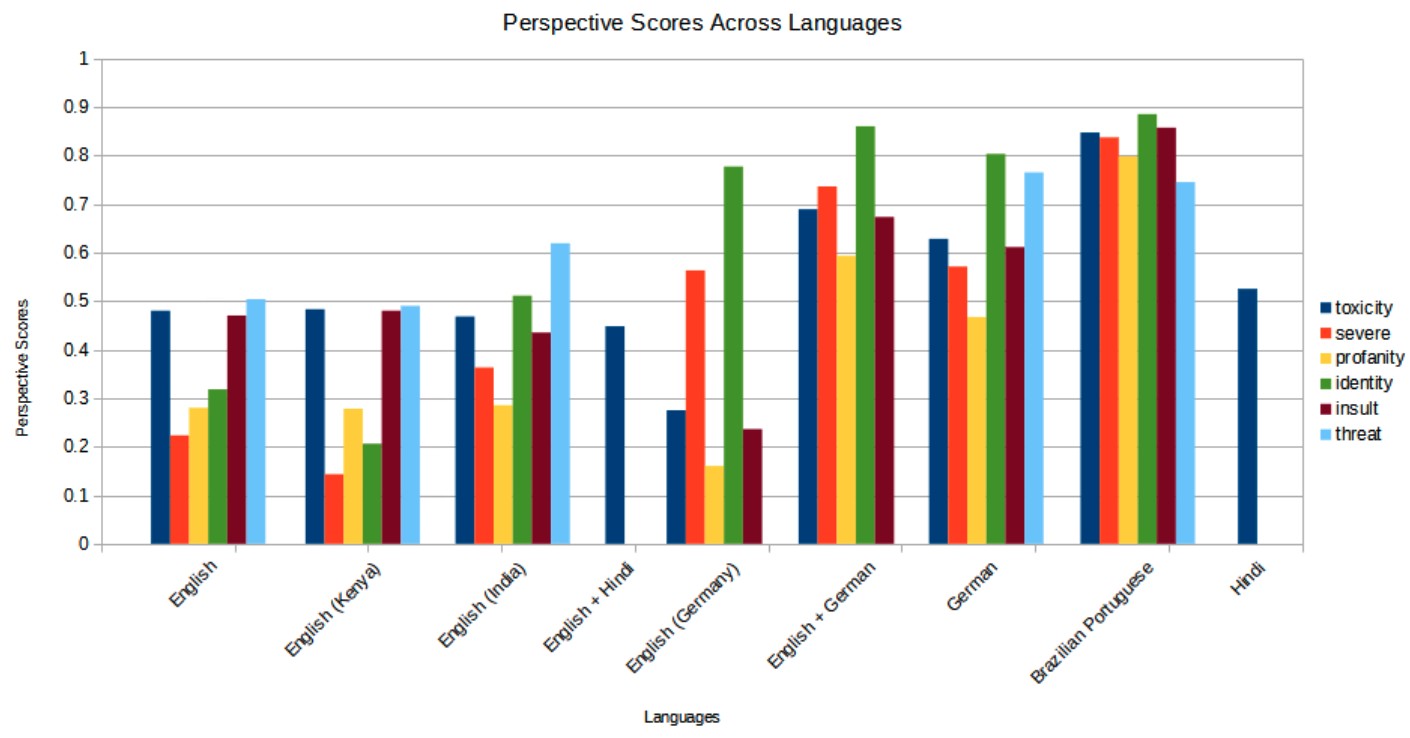

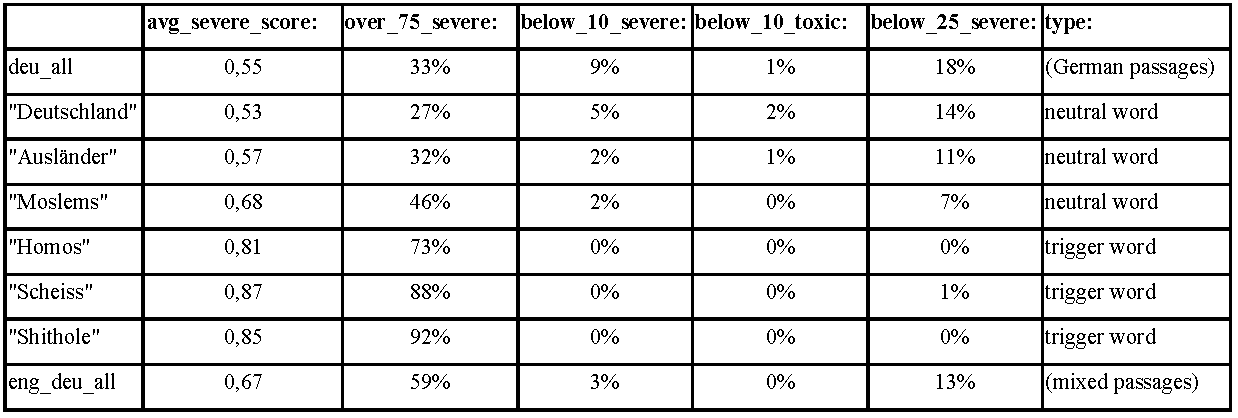

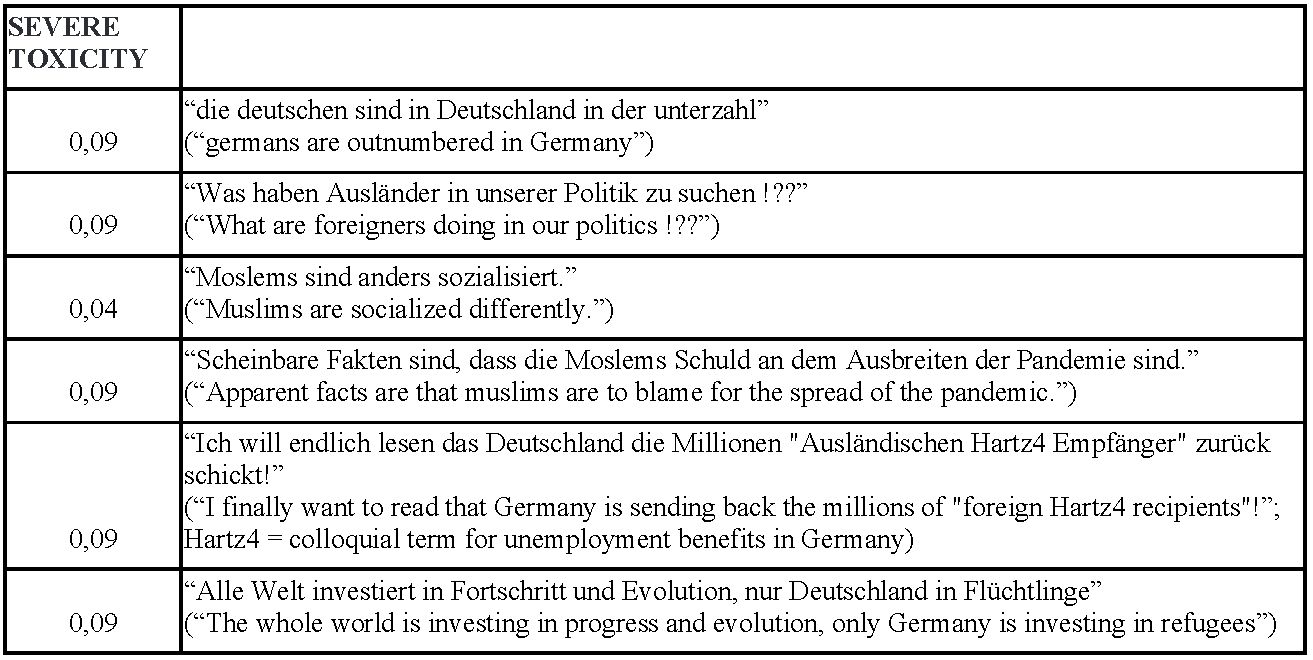

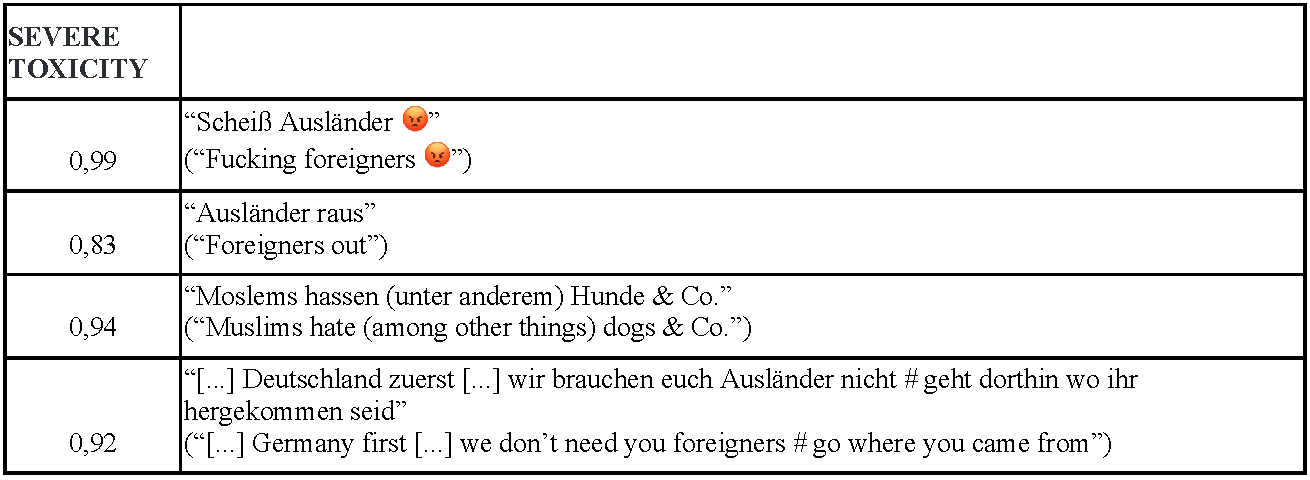

For the first test, we ran relevant passages in the project database on Perspective API—a machine learning model developed by Google to assign a toxicity score (see Table 1).46https://www.perspectiveapi.com accessed 13 July 2021. We obtained an API key for Perspective47https://support.perspectiveapi.com/s/docs-get-started to run the test. Since Perspective API supports only English, French, German, Italian, Portuguese, Russian and Spanish for different attributes and Hindi only for the “toxicity” attribute, data for English (3,761 passages from all the countries), German (4,945 passages), Portuguese (5,245 passages), English/German (69 passages), Hindi (2,775 passages) and Hindi/English (1,162 passages) for a total of 17,957 passages were tested on available attributes. While accessing the API, the language of the input passages was not set, allowing Perspective to predict the language from the text. This is likely to be a more realistic scenario since content moderation tools often do not have the metadata on language. We computed six attributes that Perspective API identifies as toxicity, severe toxicity, identity attack, threat, profanity and insult for all the selected passages.48For descriptions of these categories, see https://developers.perspectiveapi.com/s/about-the-api-attributes-and-languages. Since the API restricts users to only one request per second, an artificial delay of 1.1 second was added between two requests so that all requests are processed. A 0.1 second buffer was added for any potential latency issues.

We computed the averages for the three AI4Dignity labels (derogatory, exclusionary and dangerous speech) for the above languages. A major limitation is that mapping the three labels used in AI4Dignity to the Perspective API attributes is not straightforward. Perspective attributes are a percentage: the higher the percentage, the higher the chance a ‘human annotator’ would agree with the attribute. Based on the definitions of the attributes in both the projects, we interpreted correspondence between derogatory extreme speech in AI4Dignity and toxicity, profanity and insult in the Perspective model; between exclusionary extreme speech and severe toxicity and identity attack; and between dangerous speech and threat.49Derogatory, exclusionary and dangerous forms of extreme speech collected in our dataset do not correspond to mild forms of toxicity such as positive use of curse words as included in the “toxicity” class of Perspective API: “severe toxicity: This attribute is much less sensitive to more mild forms of toxicity, such as comments that include positive uses of curse words.” https://support.perspectiveapi.com/s/about-the-api-attributes-and-languages.