The Information Disorder Update: November 5, 2018

Amid warnings of a looming civil war and an immigrant “invasion” from Central America, the 2018 midterm campaign has thrived on hyperbole and misinformation that have become weapons in an online battlefield.

In this digital warfare, the angriest, most extreme voices have dominated the political arena. Finally, on the eve of the midterm election, the online vitriol has morphed into violence including pipe bombs mailed to perceived foes and a massacre in a Pittsburgh synagogue.

The Information Disorder Lab team has monitored this uncivil internet conversation for six months, with a focus on the midterm campaign. We’ve seen a significant volume of outright fabrication on social media and websites. But our research team has seen an even greater share of misleading information — content that can claim a tenuous connection to fact but is twisted or manipulated, either innocently or intentionally.

Beyond those straightforward forms of mis- and disinformation, we’ve seen less obvious but equally dangerous problems: online hate and fear. These are also cases of information disorder; they use and abuse emotions to polarize and demonize.

During this campaign season, the major social media platforms have begun to respond more aggressively to confront these challenges. Twitter and Facebook have removed millions of fake and “inauthentic” accounts. Facebook created a war-room to monitor and act against disinformation. YouTube removed conspiracy theorists including Alex Jones and Mike Adams. Gab was shut down, at least briefly. This showdown is certain to intensify as we turn instantly from the midterms to the 2020 presidential campaign.

While the platforms have taken action against some Russian and Iranian, it’s clear to us that a significant proportion of disinformation infecting U.S. platforms is domestic, with Americans targeting Americans. Solutions will have to be homegrown.

Here’s a sketch of the key trends we have documented:

Hate

Race and religion provided ammunition for online hatemongers.

- Muslim candidates have run in unprecedented numbers in the 2018 midterms — and some have been pelted with misinformation. Twitter users accused Ilhan Omar, a Democrat running for Congress in Michigan, of following Sharia Law and marrying her brother, among other groundless allegations. Other candidates such as Rashida Tlaib have been judged guilty by association with Islamic organizations or other Muslims such as activist Linda Sarsour. California candidate Campa-Najjar was said to have terrorist links because his grandfather had helped stage the Munich terror attack against Israeli athletes in 1972. In fact, the grandfather died 16 years before Campa-Najjar was born in San Diego.

- The Pittsburgh synagogue attacker left a long trail of anti-Semitic rants on Gab, and the poison didn’t end with the mass shooting. The shooter’s supporters took to the internet, lauding the suspect with the hashtag #HeroRobertBowers. Several users continued to “upvote” Bowers’ final post until Gab was shut down (it came back online Nov. 5). Anti-Semitic abuse has surged during the campaign, much of it aimed at billionaire activist George Soros. It persisted throughout the week after the attack; a 4chan user called for genocide against “Jews, the greatest evil the world has ever known.”

- Racist social media posts even exploited Halloween, with anonymous users on 4chan sharing instructions on how to make “It’s Okay to be White” memes designed to provoke media coverage amplifying the idea. Black candidates drew disparaging racist tweets — a tweet derided California Rep. Maxine Waters as “low IQ.” echoing a tweet from President Trump in June. African-American gubernatorial candidates Stacey Abrams in Georgia and Andrew Gillum in Florida were falsely labeled socialist or communist, such as in this manipulated image.

Fear

Misinformation often preyed on emotions, reinforcing the findings of researchers that the more extreme the sentiment, the more likely a social media post is to provoke fear or anger — and the more likely it is to whip up more online traffic.

- The principal target for fearmongers’ disinformation was illegal immigration. Social media language embraced military terms, with a growing emphasis on threats of “invasion,” according to one post that was retweeted nearly 5,000 times. Militia groups at the U.S. border told the IDLab they were appealing for volunteers to help the border police combat the approaching Central American migrants, whom they claimed numbered more than 40,000 — ten times the number estimated by Mexican authorities. A YouTube video making a similar claim drew 4,800 views.

- The immigrant fears aligned with a broader confrontational theme pushed by far-right conspiracy theorists that another civil war is looming in the United States. This became a coordinated campaign waged by Mike Adams, founder of the much-criticized Natural News website. Adams used a network of similar far-right sites to spread several articles warning of civil war, and he made a video promoting the idea that was viewed more than 50,000 times on Brighteon, a fringe alternative to YouTube.

- As the midterm voting approached, heated social media battles swirled over the integrity of the election itself. Democrats and their online supporters accused Republican state officials, sometimes inaccurately, of conducting coordinated voter purges that eliminated millions of voters from the rolls, many of them people of color. For their part, Republicans raised allegations of potential serious fraud in the form of votes by vast numbers of undocumented immigrants — despite the absence of any evidence of significant voter fraud. Some social media versions of these arguments dispensed with nuance and context, contributing to a climate of mistrust.

- As early voting began in many states, disinformation emerged about the voting process itself. A Facebook group called The Deplorables insisted that George Soros remove his voting machines from states. (He has none). The post had 15,000 shares.

Conspiracy

Alleged conspiracies took on energy online, usually without a shred of evidence but sometimes tied to a grain of fact:

- The long-simmering QAnon conspiracy movement leaped into the mainstream media at a rally by President Trump on July 31; beforehand, participants on 4chan had organized together to make and sell t-shirts for those who planned to attend the rally. Major media organizations then piled onto the story, generating front-page coverage for what had been a fringe far-right movement.

- Soros became the target of several major conspiratorial themes, many of them anti-Semitic: he was falsely said to have funded the legal costs of Christine Blasey Ford in the Kavanaugh hearings; he was falsely alleged to be behind the protesters in the elevator who accosted Sen. Jeff Flake (they worked for a non-profit that gets some funding from his foundation); he was falsely said to have financed the Central American caravan; and he was accused of being the largest donor to Democrat Beto O’Rourke in Texas. Groups including the Anti-Defamation League said the wave of online attacks on Soros threatened to inflame anti-Semitism in the country; Soros was among those who received mailed pipe bombs from a man who left a trail of angry, conspiracy-laden social media posts.

Sarcasm

Amid this grim social media torrent, there also was an element of humor, even if often barbed and built on falsehoods. Manipulated and fabricated memes surged throughout the midterm race.

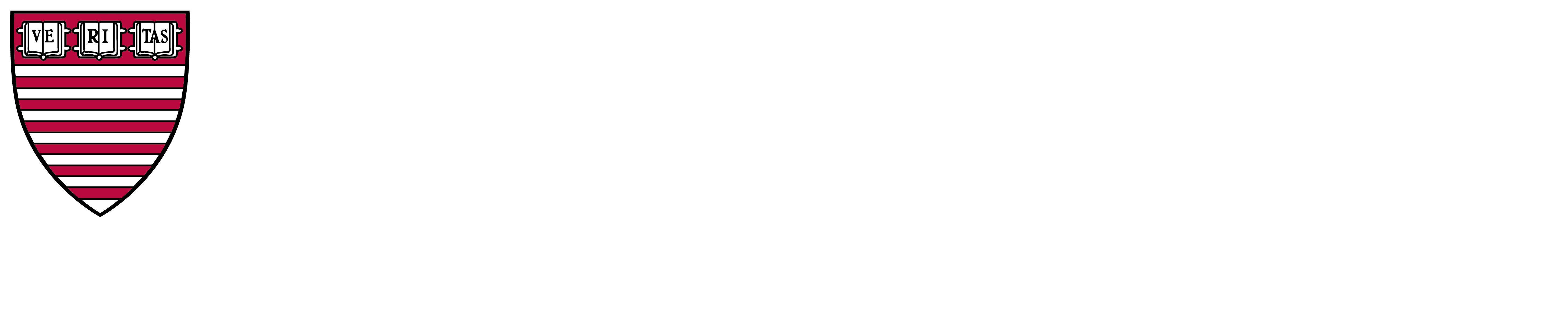

- NPC’s, or non-playable characters, became a staple of the right to portray liberals as easily manipulated. Conspiracy theorist Alex Jones’ Infowars website promoted a contest for the best meme “capable of reflecting the Left’s robotic and predictable nature, with a humorous twist.” Twitter banned hundreds of accounts with NPC avatars that were tweeting false information about the midterm elections.

- The Brett Kavanaugh hearings generated memes poking fun not only of Kavanaugh and his accuser, Christine Blasey Ford, but at spinoff themes such as beer-drinking.

- A fabricated interview portraying New York Democrat Alexandria Ocasio-Cortez as inept drew more than 1.5 million video views in one day.

Techniques

Recycled Content

- Numerous examples of misinforming content appear when old images, stories and videos are recycled as if they are current. When the critical temporal context is omitted, confusion is likely. This tactic is pervasive and powerful. When a user sees a story that resonates because of current events they can aggressively share it without reflecting on the timestamp. In many cases the original information was or is true, but is not relevant today. In other cases, old false memes or ideas re-appear despite having been previously debunked.

False Connection

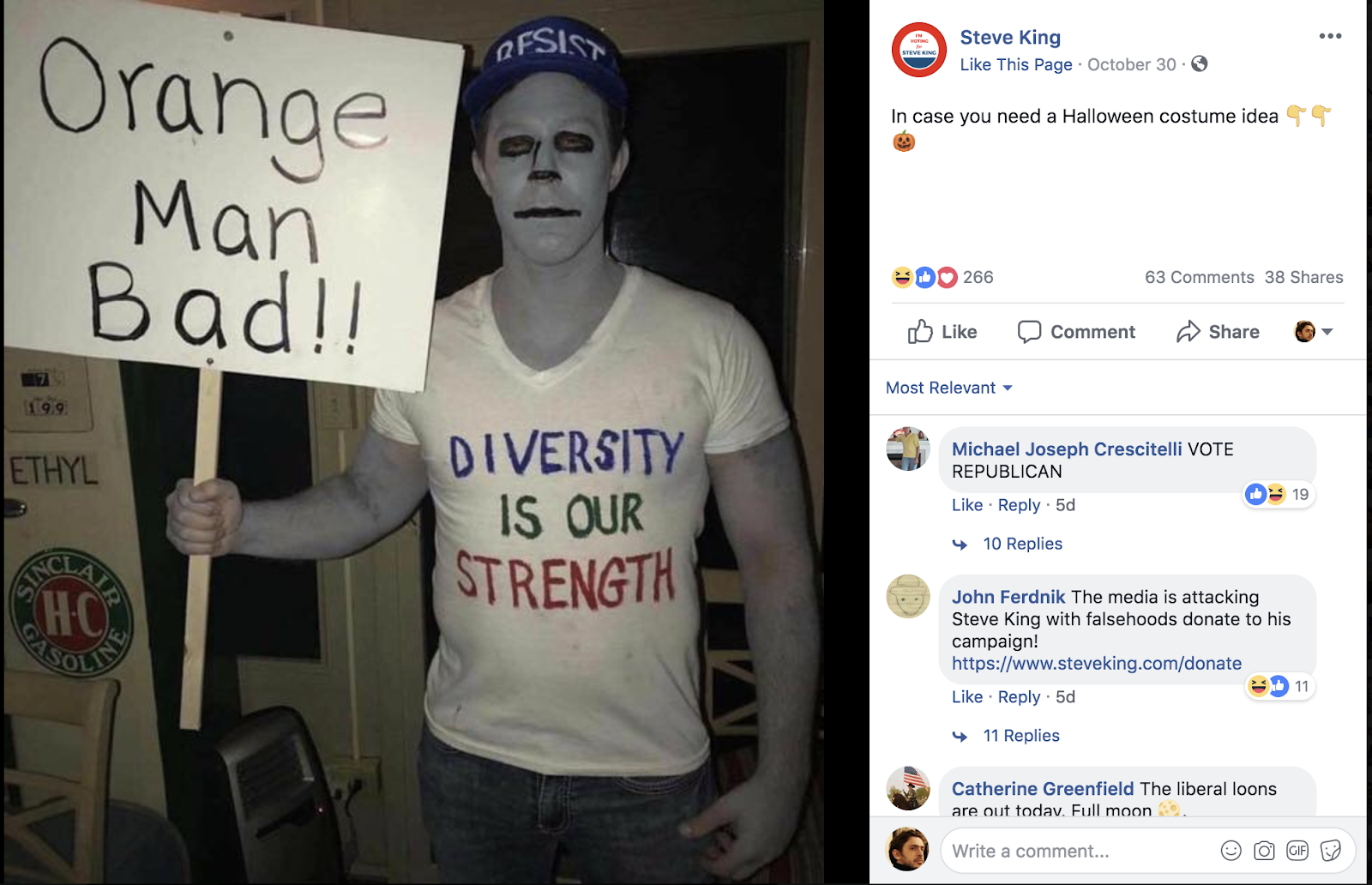

False connections that inaccurately link people and ideas abound on social media. These connections are created directly when falsely attributing a quote to a person, through innuendo, when an open ended question is asked (“Soros?”), and through images, when something a photograph is falsely associated with a location or moment.

- Meme Implying Quote From NJ Governor

- Rep. Gaetz Questioning Source Of Caravan Cash, “Soros?”

- Homeless Image Falsely Located In SF

Once the votes are tallied and attention turns to the outcomes of the midterm elections, the IDLab will assess the patterns in information disorder that emerged in the 2018 voting. We welcome your thoughts and tips as we deconstruct how information was weaponized in this election to better understand how to monitor and investigate the phenomenon in the lead-up to 2020.