This report was published by the Council of Europe, with support from the Shorenstein Center on Media, Politics and Public Policy at Harvard Kennedy School and First Draft. Reproduced with permission of the Council of Europe.

The opinions expressed in this work are the responsibility of the authors and do not necessarily reflect the official policy of the Council of Europe.

All rights reserved. No part of this publication may be translated, reproduced or transmitted in any form or by any means without the prior permission in writing from the Directorate of Communications (F-67075 Strasbourg Cedex or publishing@coe.int). Report and photos © Council of Europe, October, 2017. www.coe.int

Download as a PDF.

Contents

The Three Types of Information Disorder

The Phases and Elements of Information Disorder

The Three Phases of Information Disorder

The Three Elements of Information Disorder

1) The Agents: Who are they and what motivates them?

2) The Messages: What format do they take?

3) Interpreters: How do they make sense of the messages?

Part 2: Challenges of filter bubbles and echo chambers

Appendix: European Fact-checking and Debunking Initiatives

Authors’ Biographies

Claire Wardle, PhD

Claire Wardle is the Executive Director of First Draft, which is dedicated to finding solutions to the challenges associated with trust and truth in the digital age. Claire is also a Research Fellow at the Shorenstein Center on Media, Politics and Public Policy at the Harvard Kennedy School. She sits on the World Economic Forum’s Global Agenda Council on the Future of Information and Entertainment. She was previously the Research Director at the Tow Center for Digital Journalism at Columbia Journalism School, head of social media for the UN Refugee Agency and Director of News Services for Storyful. She is one of the world’s experts on user generated content, and has led two substantial research projects investigating how it is handled by news organizations. In 2009 she was asked by the BBC to develop a comprehensive social media training curriculum for the organization and she led a team of 19 staff delivering training around the world. She holds a PhD in Communication and an MA in Political Science from the University of Pennsylvania, where she won a prestigious Thouron Scholarship. She started her career as a professor at Cardiff University’s School of Journalism, Media and Cultural Studies.

Hossein Derakhshan

Hossein Derakhshan is an Iranian-Canadian writer and researcher. The pioneer of blogging in Iran in the early 2000s, he later spent six years in prison there over his writings and web activities. He is the author of ‘The Web We Have to Save’ (Matter, July 2015), which was widely translated and published around the world. His current research is focused on the theory and socio-political implications of digital and social media. His writings have appeared in Libération, Die Zeit, the New York Times, MIT Technology Review, and The Guardian. He studied Sociology in Tehran and Media and Communication in London.

Executive Summary

This report is an attempt to comprehensively examine information disorder and its related challenges, such as filter bubbles and echo chambers. While the historical impact of rumours and fabricated content have been well documented, we argue that contemporary social technology means that we are witnessing something new: information pollution at a global scale; a complex web of motivations for creating, disseminating and consuming these ‘polluted’ messages; a myriad of content types and techniques for amplifying content; innumerable platforms hosting and reproducing this content; and breakneck speeds of communication between trusted peers.

The direct and indirect impacts of information pollution are difficult to quantify. We’re only at the earliest of stages of understanding their implications. Since the results of the ‘Brexit’ vote in the UK, Donald Trump’s victory in the US and Kenya’s recent decision to nullify its national election result, there has been much discussion of how information disorder is influencing democracies. More concerning, however, are the long-term implications of dis-information campaigns designed specifically to sow mistrust and confusion and to sharpen existing socio-cultural divisions using nationalistic, ethnic, racial and religious tensions.

So, how do we begin to address information pollution? To effectively tackle the problems of mis-, dis- and mal- information, we need to work together on the following fronts:

- Definitions. Think more critically about the language we use so we can effectively capture the complexity of the phenomenon;

- Implications for democracy. Properly investigate the implications for democracy when false or misleading information circulates online;

- Role of television. Illuminate the power of the mainstream media, and in particular television, in the dissemination and amplification of poor-quality information that originates online;

- Implications of weakened local media. Understand how the collapse of local journalism has enabled mis-and dis-information to take hold, and find ways to support local journalism;

- Micro-targeting. Discern the scale and impact of campaigns that use demographic profiles and online behavior to micro-target fake or misleading information[1];

- Computational amplification. Investigate the extent to which influence is bought through digital ‘astroturfing’—the use of bots and cyborgs to manipulate the outcome of online petitions, change search engine results and boost certain messages on social media;

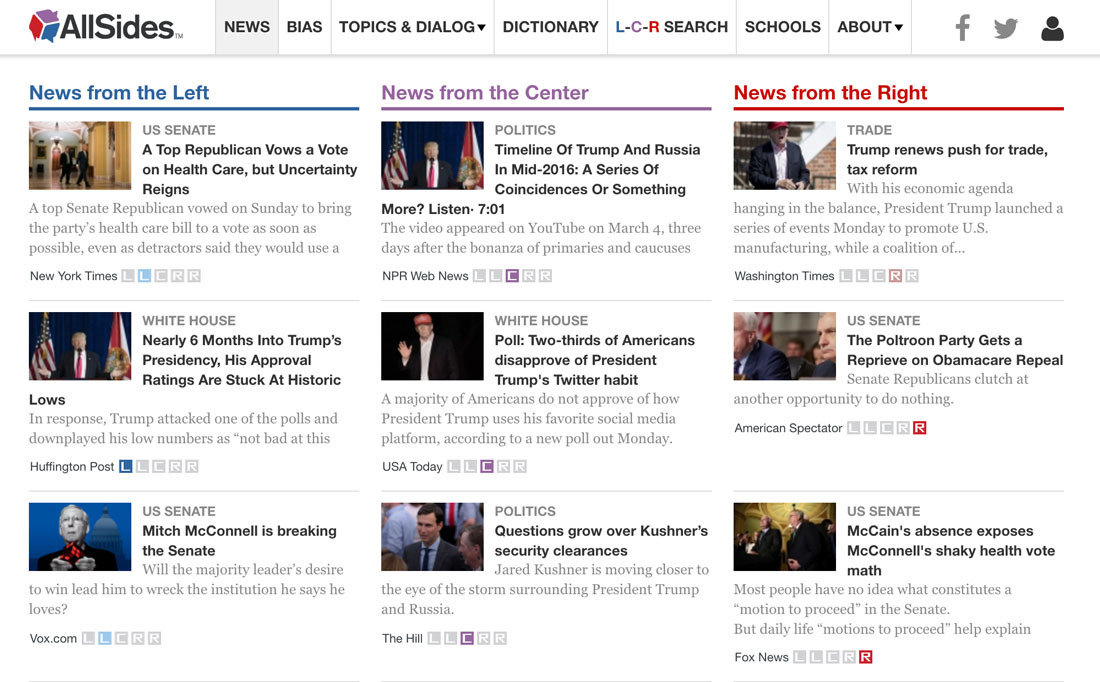

- Filter bubbles and echo chambers. Consider the implications of the filter bubbles and echo chambers that have emerged because of media fragmentation, both offline (mediated via partisan talk radio and cable news) and online (mediated via hyper-partisan websites, algorithmically derived feeds on social networks and radical communities on WhatsApp, Reddit and 4chan.)[2]

- Declining trust in evidence. Understand the implications of different communities failing to share a sense of reality based on facts and expertise.

In this report, we refrain from using the term ‘fake news’, for two reasons. First, it is woefully inadequate to describe the complex phenomena of information pollution. The term has also begun to be appropriated by politicians around the world to describe news organisations whose coverage they find disagreeable. In this way, it’s becoming a mechanism by which the powerful can clamp down upon, restrict, undermine and circumvent the free press.

We therefore introduce a new conceptual framework for examining information disorder, identifying the three different types: mis-, dis- and mal-information. Using the dimensions of harm and falseness, we describe the differences between these three types of information:

- Mis-information is when false information is shared, but no harm is meant.

- Dis-information is when false information is knowingly shared to cause harm.

- Mal-information is when genuine information is shared to cause harm, often by moving information designed to stay private into the public sphere.

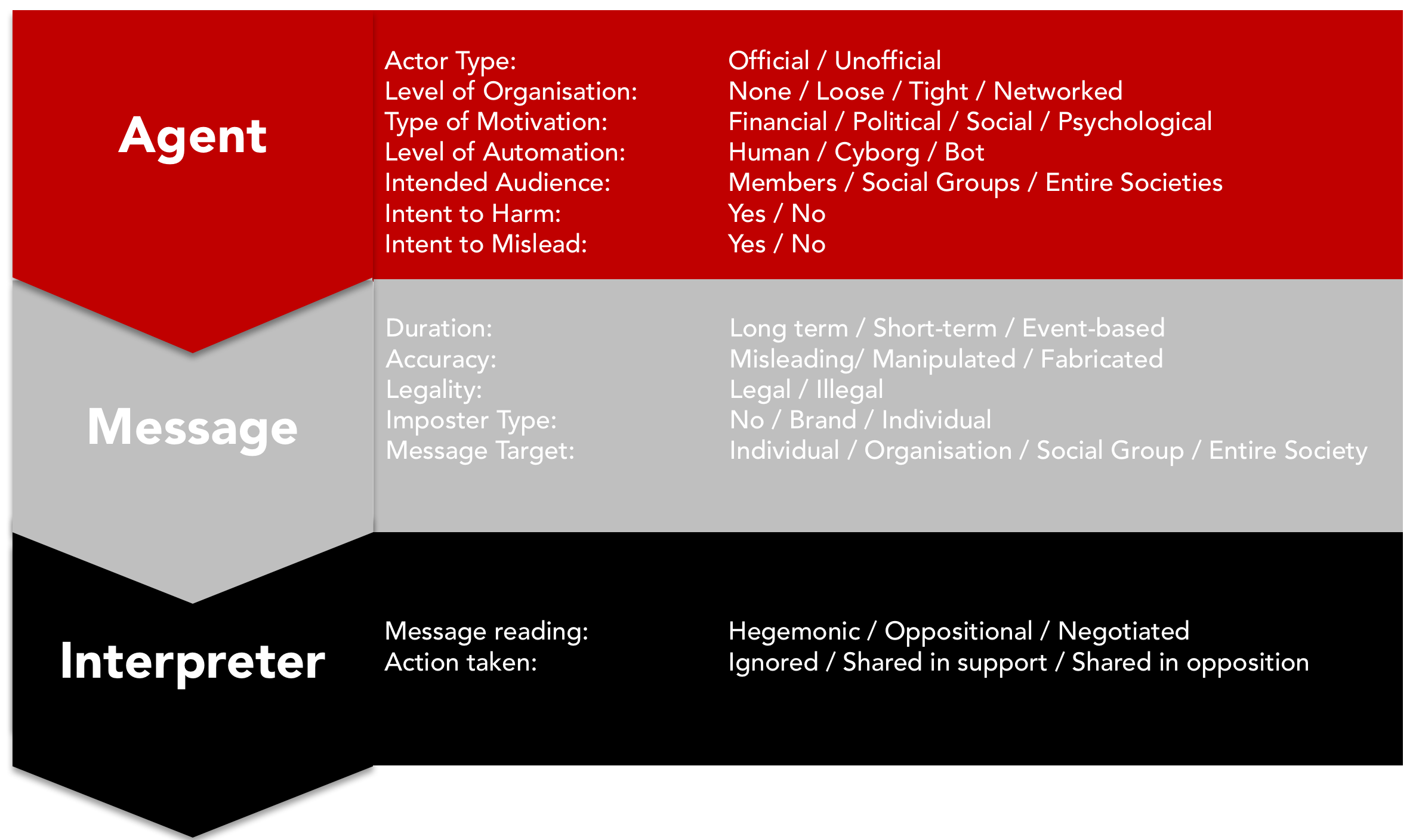

We also argue that we need to separately examine the ‘elements’ (the agent, messages and interpreters) of information disorder. In this matrix we pose questions that need to be asked of each element.

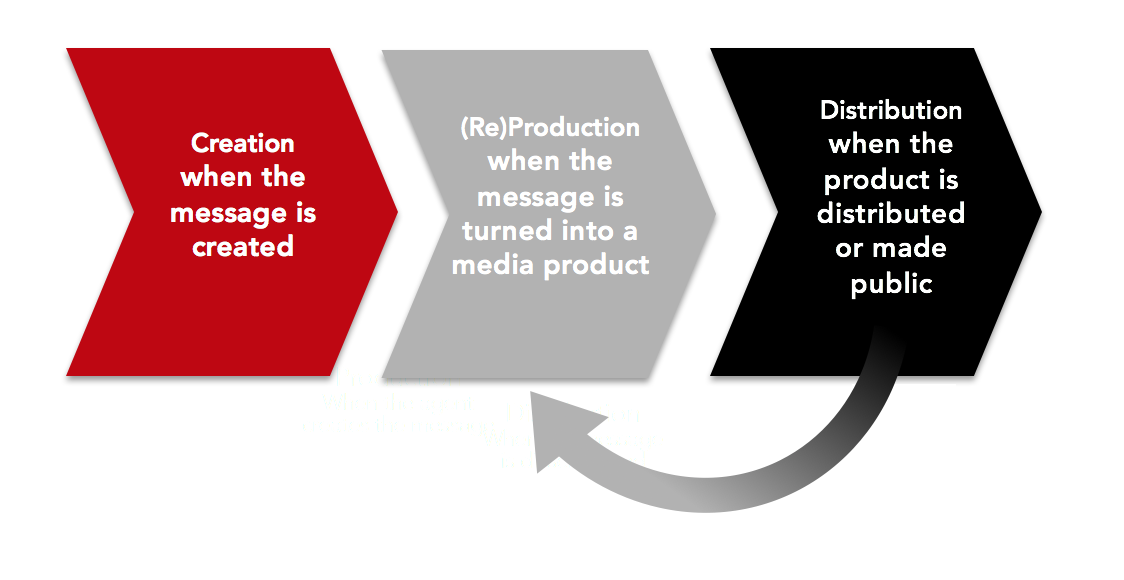

We also emphasise the need to consider the three different ‘phases’ (creation, production, distribution) of information disorder.

As we explain, the ‘agent’ who creates a fabricated message might be different to the agent who produces that message—who might also be different from the ‘agent’ who distributes the message. Similarly, we need a thorough understanding of who these agents are and what motivates them.

We must also understand the different types of messages being distributed by agents, so that we can start estimating the scale of each and addressing them. (The debate to date has been overwhelmingly focused on fabricated text news sites, when visual content is just as widespread and much harder to identify and debunk.)

Finally, we need to examine how mis-, dis- and mal-information are being consumed, interpreted and acted upon. Are they being re-shared as the original agent intended? Or are they being re-shared with an oppositional message attached? Are these rumours continuing to travel online, or do they move offline into personal conversations, which are difficult to capture?

A key argument within this report, which draws from the work of the scholar James Carey, is that we need to understand the ritualistic function of communication. Rather than simply thinking about communication as the transmission of information from one person to another, we must recognize that communication plays a fundamental role in representing shared beliefs. It is not just information, but drama — “a portrayal of the contending forces in the world.”[3]

The most ‘successful’ of problematic content is that which plays on people’s emotions, encouraging feelings of superiority, anger or fear. That’s because these factors drive re-sharing among people who want to connect with their online communities and ‘tribes’.

When most social platforms are engineered for people to publicly ‘perform’ through likes, comments or shares, it’s easy to understand why emotional content travels so quickly and widely, even as we see an explosion in fact-checking and debunking organizations.

In addition to our conceptual framework, we provide a round-up of related research, reports and practical initiatives connected to the topic of information disorder, as well as filter bubbles and echo chambers. We examine solutions that have been rolled out by the social networks and consider ideas for strengthening existing media, news literacy projects and regulation. We also introduce some key future trends, particularly in terms of the rise of closed messaging apps and the implications of artificial intelligence technology for manufacturing as well as detecting dis-information.

The report ends with an explanation of thirty-four recommendations, targeted at technology companies, national governments, media organisations, civil society, education ministries and funding bodies. They are explained in detail after the report conclusions.

What could technology companies do?

- Create an international advisory council.

- Provide researchers with the data related to initiatives aimed at improving public discourse.

- Provide transparent criteria for any algorithmic changes that down-rank content.

- Work collaboratively.

- Highlight contextual details and build visual indicators.

- Eliminate financial incentives.

- Crack down on computational amplification.

- Adequately moderate non-English content.

- Pay attention to audio/visual forms of mis- and dis-information.

- Provide metadata to trusted partners.

- Build fact-checking and verification tools.

- Build ‘authenticity engines’.

- Work on solutions specifically aimed at minimising the impact of filter bubbles:

a. Let users customize feed and search algorithms.

b. Diversify exposure to different people and views.

c. Allow users to consume information privately

d. Change the terminology used by the social networks.

What could national governments do?

- Commission research to map information disorder.

- Regulate ad networks.

- Require transparency around Facebook ads.

- Support public service media organisations and local news outlets.

- Roll out advanced cybersecurity training.

- Enforce minimum levels of public service news on to the platforms.

What could media organisations do?

- Collaborate

- Agree policies on strategic silence.

- Ensure strong ethical standards across all media.

- Debunk sources as well as content.

- Produce more news literacy segments and features.

- Tell stories about the scale and threat posed by information disorder.

- Focus on improving the quality of headlines.

- Don’t disseminate fabricated content.

What could civil society do?

- Educate the public about the threat of information disorder.

- Act as honest brokers.

What could education ministries do?

- Work internationally to create a standardized news literacy curriculum.

- Work with libraries.

- Update journalism school curricula.

What could funding bodies do?

- Provide support for testing solutions.

- Support technological solutions.

- Support programs teaching people critical research and information skills.

Introduction

Rumours, conspiracy theories and fabricated information are far from new.[4] Politicians have forever made unrealistic promises during election campaigns. Corporations have always nudged people away from thinking about issues in particular ways. And the media has long disseminated misleading stories for their shock value. However, the complexity and scale of information pollution in our digitally-connected world presents an unprecedented challenge.

While it is easy to dismiss the sudden focus on this issue because of the long and varied history of mis- and dis-information[5], we argue that there is an immediate need to seek workable solutions for the polluted information streams that are now characteristic of our modern, networked and increasingly polarised world.

It is also important to underline from the outset that, while much of the contemporary furor about mis-information has focused on its political varieties, ‘information pollution’[6] contaminates public discourse on a range of issues. For example, medical mis-information has always posed a worldwide threat to health, and research has demonstrated how incorrect treatment advice is perpetuated through spoken rumours[7], tweets[8], Google results[9] and Pinterest boards[10]. Furthermore, in the realm of climate change, a recent study examined the impact of exposure to climate-related conspiracy theories. It found that exposure to such theories created a sense of powerlessness, resulting in disengagement from politics and a reduced likelihood of people to make small changes that would reduce their carbon footprint.[11]

In this report, we hope to provide a framework for policy-makers, legislators, researchers, technologists and practitioners working on challenges related to mis-, dis- and mal-information—which together we call information disorder.

But first, how did we get to this point? Certainly, the 2016 US Presidential election led to an immediate search for answers from those who had not considered the possibility of a Trump victory—namely the major news outlets, pundits and pollsters. And while the US election result was caused by an incredibly complex set of factors – socio-economic, cultural, political and technological – there was a desire for simple explanations, and the idea that fabricated news sites could provide those explanations drove a frenzied period of reporting, conferences and workshops.[12]

Reporting by Buzzfeed News’ Craig Silverman provided an empirical framework for these discussions, offering evidence that the most popular of these fabricated stories were shared more widely than the most popular stories from the mainstream media: “In the final three months of the US presidential campaign, 20 top-performing false election stories from hoax sites and hyper-partisan blogs generated 8,711,000 shares, reactions, and comments on Facebook. Within the same time period, the 20 best-performing election stories from 19 major news websites generated a total of 7,367,000 shares, reactions, and comments on Facebook.”[13]

In addition, research on referral data shows that “fake news” stories relied heavily on social media for traffic during the election[14]. Only 10.1% of traffic to the top news sites came from social media, compared with 41.8% for ‘fake news sites’. (Other traffic referral types were direct browsing, other links and search engines.)

While we know that mis-information is not new, the emergence of the internet and social technology have brought about fundamental changes to the way information is produced, communicated and distributed. Other characteristics of the modern information environment include:

a) Widely accessible, cheap and sophisticated editing and publishing technology has made it easier than ever for anyone to create and distribute content;

b) Information consumption, which was once private, has become public because of social media;

c) The speed at which information is disseminated has been supercharged by an accelerated news cycle and mobile handsets;

d) Information is passed in real-time between trusted peers, and any piece of information is far less likely to be challenged.

As Frederic Filloux explained: “What we see unfolding right before our eyes is nothing less than Moore’s Law applied to the distribution of mis-information: an exponential growth of available technology coupled with a rapid collapse of costs.”[15]

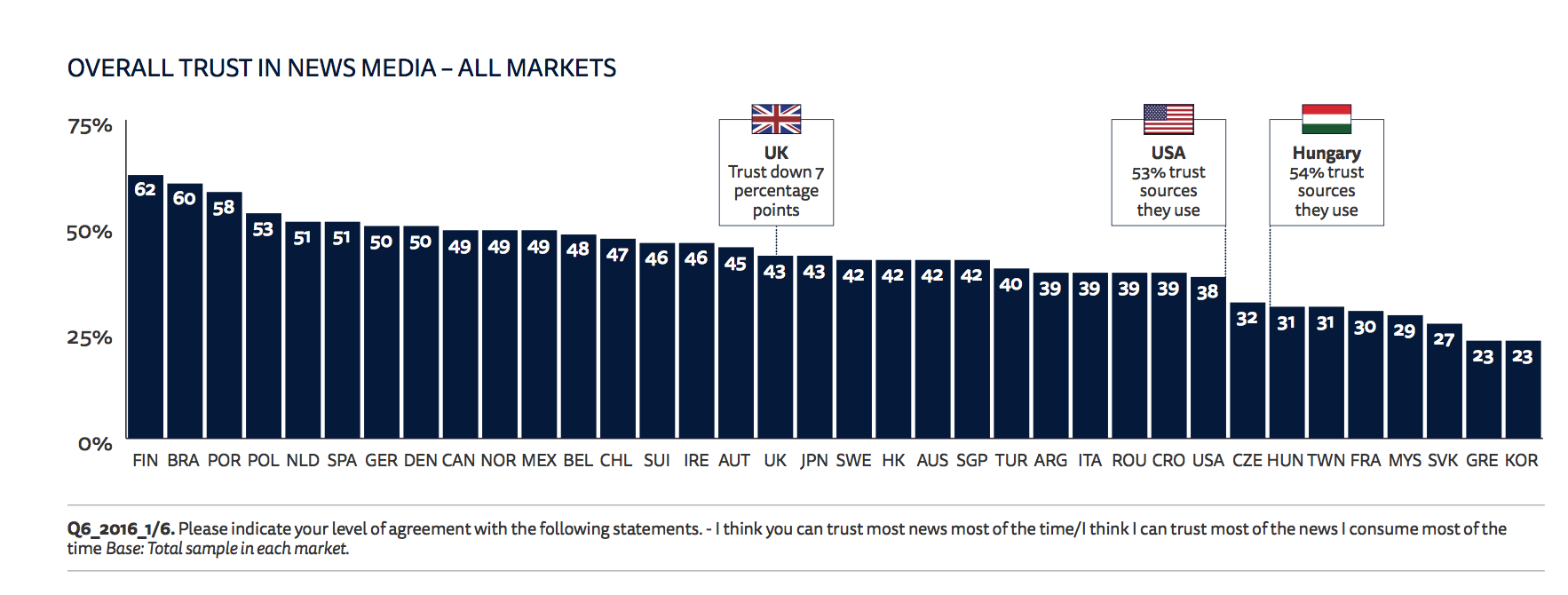

A study conducted in eighteen countries by the BBC World Service in September 2017 found that 79% of respondents said they worried about what was fake and what was real on the internet.[16] Brazilians were most troubled, with 92% of respondents from that country expressing some concern about the issue. The least concerned were Germans, where 51% of respondents indicated that they were worried. Unfortunately, we don’t have similar data from previous years to understand whether concern has increased in light of recent discussions about the phenomenon. But one thing to bear in mind is that when the purpose of dis-information campaigns is to sow mistrust and confusion about what sources of information are authentic, it is important that we continue to track attitudes about the information people source from the internet.

Another critical point is that popular social networks make it difficult for people to judge the credibility of any message, because posts from publications as unlike as the New York Times and a conspiracy site look nearly identical. This means that people are increasingly reliant on friends and family members to guide them through the information ecosystem. As Messing and Westwood have argued, “social media has had two effects: by collating stories from multiple sources, the focus is on the story, and not on the source; secondly, endorsements and social recommendations guide readership”[17] rather than traditional gatekeepers or ingrained reading habits.

Daily, we spend twice as much time online compared with 2008. During that protracted amount time, we consume incredible amounts of information[18] and inevitably make mistakes. Recent research by Filippo Menczer and colleagues shows we are so utterly inundated that we share untruths. Parsing information and judging the credibility of sources on Facebook or other social platforms will require our brains to adapt with new cognitive strategies for processing information. But Facebook is only 13 years old.[19]

Social networks are driven by the sharing of emotional content. The architecture of these sites is designed such that every time a user posts content—and it is liked, commented upon or shared further— their brain releases a tiny hit of dopamine. As social beings, we intuit the types of posts that will conform best to the prevailing attitudes of our social circle.[20] And so, on this issue of information disorder, this performative aspect of how people use social networks is critical to understanding how mis- and dis-information spreads.

However, we must also recognize the role of television in spreading dis-information.[21] While much has been written about the growing influence of Sputnik and Russia Today[22], as well as its new youth channel, In the Now, the unintentional amplification of dis-information by the mainstream media across the world needs to be acknowledged. From the New York Times’ inaccurate reporting on Iraq’s weapons of mass destruction, to the wall-to-wall coverage of Hillary Clinton’s leaked emails (now known to be carried out by Russian hackers), or the almost daily amplification of Trump’s tweets (some including information from conspiracy sites[23]), getting the mainstream media to amplify rumour and dis-information is the ultimate goal of those who seek to manipulate. Without amplification, dis-information goes nowhere.

It is within this context that we have to study information disorder. These technology platforms are not neutral communication pipelines. They cannot be, as they are inherently social, driven by billions of humans sharing words, images, videos and memes that affirm their positions in their own real-life social networks.

The shock of the Brexit referendum, the US election, Le Pen reaching the run-off vote in the French election and the overturning of the Kenyan election have been used as examples of the potential power of systematic dis-information campaigns. However, empirical data about the exact influence of such campaigns does not exist.

As danah boyd argues about recent responses to fears about mis- and dis-information, “It’s part of a long and complicated history, and it sheds light on a variety of social, economic, cultural, technological, and political dynamics that will not be addressed through simplistic solutions.”[24] Certainly, we have to look for explanations for how societies, particularly in the West, have become so segregated in terms of terms of age, race, religion, class and politics.[25]

Recognizing the impact of factors such as the collapse of the welfare state, the failure of democratic institutions to provide public services, climate change and miscalculated foreign interventions are required. We cannot see the phenomenon of mis- and dis-information in isolation, but must consider its impact amid the new-media ecosystem. This ecosystem is dominated by increasingly partisan radio, television and social media; exaggerated emotional articulations of the world; quick delivery via algorithmically derived feeds on smartphones and audiences that skim headlines to cope with the floods of information before them.

Making sense of mis-, dis- and mal-information as a type of information disorder, and learning how it works, is a necessity for open democracies. Likewise, neglecting to understand the structural reasons for its effectiveness is a grave mistake.

Communication as Ritual

One of the most important communication theorists, James Carey, compared two ways of viewing communication – transmission and ritual – in his book Communication as Culture: Essays on Media and Society.[26]

Carey wrote, “The transmission view of communication is the commonest in our culture—perhaps in all industrial cultures… It is defined by terms such as ‘imparting,’ ‘sending,’ ‘transmitting,’ or ‘giving information to others.’”[27] The ‘ritual view of communication’, by contrast, is not about “the act of imparting information but the representation of shared beliefs.”

Under a transmission view of communication, one sees the newspaper as an instrument for disseminating knowledge. Questions arise as to its effects on audiences—as enlightening or obscuring reality, as changing or hardening attitudes or as breeding credibility or doubt. However, a ritual view of communication does not consider the act of reading a newspaper to be driven by the need for new information. Rather, it likens it to attending a church service. It’s a performance in which nothing is learned, but a particular view of the world is portrayed and confirmed. In this way, news reading and writing is a ritualistic and dramatic act.[28]

In this report, we pay close attention to social and psychological theories that help to make sense of why certain types of dis-information are widely consumed and shared. Considering information consumption and dissemination from merely the transmission view is unhelpful as we try and understand information disorder.

Four Key Points

The term ‘fake news’ and the need for definitional rigour

Before we continue, a note on terminology. One depressing aspect of the past few months is that, while it has resulted in an astonishing number of reports, books, conferences and events, it has produced little other than funding opportunities for research and the development of tools. One key reason for this stagnation, we argue, is an absence of definitional rigour, which has resulted in a failure to recognize the diversity of mis- and dis-information, whether of form, motivation or dissemination.

As researchers like Claire Wardle[29], Ethan Zuckerman[30], danah boyd[31] and Caroline Jack[32] and journalists like the Washington Post’s Margaret Sullivan[33] have argued, the term ‘fake news’ is woefully inadequate to describe the complex phenomena of mis- and dis-information. As Zuckerman states, “It’s a vague and ambiguous term that spans everything from false balance (actual news that doesn’t deserve our attention), propaganda (weaponized speech designed to support one party over another) and disinformatzya (information designed to sow doubt and increase mistrust in institutions).”[34]

A study by Tandoc et al., published in August 2017, examined 34 academic articles that used the term ‘fake news’ between 2003 and 2017.[35] The authors noted that the term has been used to describe a number of different phenomena over the past 15 years: news satire, news parody, fabrication, manipulation, advertising and propaganda. Indeed, this term has a long history, long predating President Trump’s recent obsession with the phrase.

The term “fake news” has also begun to be appropriated by politicians around the world to describe news organisations whose coverage they find disagreeable. In this way, it’s becoming a mechanism by which the powerful can clamp down upon, restrict, undermine and circumvent the free press. It’s also worth noting that the term and its visual derivatives (e.g., the red ‘FAKE’ stamp) have been even more widely appropriated by websites, organisations and political figures identified as untrustworthy by fact-checkers to undermine opposing reporting and news organizations.[36] We therefore do not use the term in this report and argue that the term should not be used to describe this phenomenon.

Many have offered new definitional frameworks in attempts to better reflect the complexities of mis- and dis-information. Facebook defined a few helpful terms in their paper on information operations:

- Information (or Influence) Operations. Actions taken by governments or organized non-state actors to distort domestic or foreign political sentiment, most frequently to achieve a strategic and/or geopolitical outcome. These operations can use a combination of methods, such as false news, dis-information or networks of fake accounts aimed at manipulating public opinion (false amplifiers).

- False News. News articles that purport to be factual, but contain intentional misstatements of fact to arouse passions, attract viewership or deceive.

- False Amplifiers. Coordinated activity by inauthentic accounts that has the intent of manipulating political discussion (e.g., by discouraging specific parties from participating in discussion or amplifying sensationalistic voices over others).

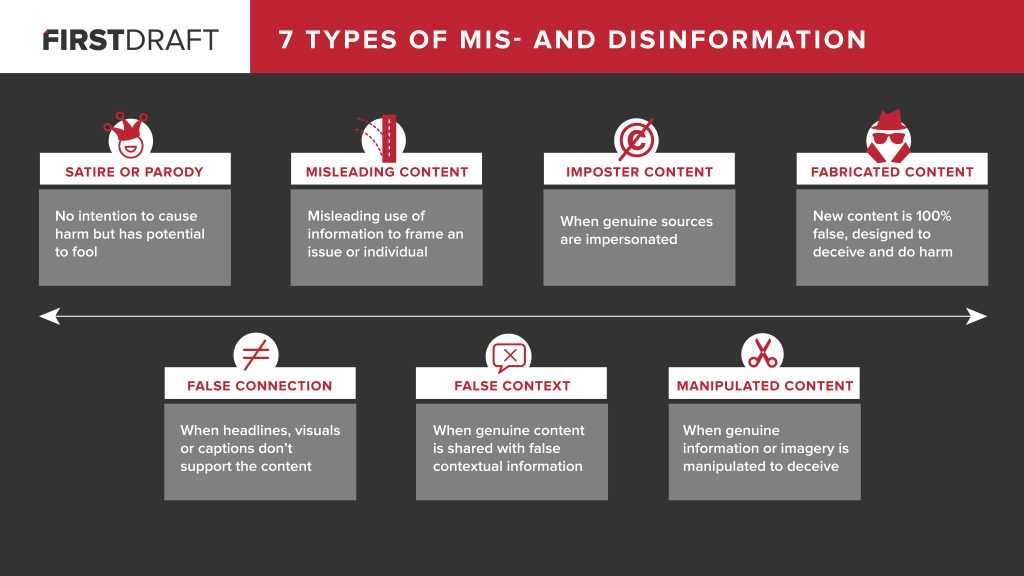

In ‘Fake News. It’s Complicated’, Wardle outlines seven types of mis- and dis-information, revealing the wide spectrum of problematic content online, from satire and parody (which, while a form of art, can become mis-information when audiences misinterpret the message) to full-blown fabricated content.

Figure 1: 7 Types of Mis- and Dis-information (Credit: Claire Wardle, First Draft) view larger

While these seven classifications are helpful in encouraging people to see beyond the infamous ‘Pope endorses Trump’-type news sites that received so much attention after the US election, the phenomenon requires an even more nuanced conceptual framework—particularly one that highlights the impact of visuals in perpetuating dis-information. We have therefore created such a framework, and we will use as the organizing structure for the report.

While we work through terms and descriptions, it’s important that we recognise the importance of shared definitions. As Caroline Jack argued in the introduction to her recent report, Lexicon of Lies, for Data & Society:

“Journalists, commentators, policymakers, and scholars have a variety of words at their disposal — propaganda, dis-information, mis-information, and so on — to describe the accuracy and relevance of media content. These terms can carry a lot of baggage. They have each accrued different cultural associations and historical meanings, and they can take on different shades of meaning in different contexts. These differences may seem small, but they matter. The words we choose to describe media manipulation can lead to assumptions about how information spreads, who spreads it, and who receives it. These assumptions can shape what kinds of interventions or solutions seem desirable, appropriate, or even possible.”[37]

Visuals, Visuals, Visuals

As well as the other problematic aspects of the popular term ‘fake news’ outlined above, it has also allowed the debate to be framed as a textual problem. The focus on fabricated news ‘sites’ means the implications of misleading, manipulated or fabricated visual content, whether that’s an image, a visualization, a graphic, or a video are rarely considered. The solutions by the technology companies have been aimed squarely at articles, and while admittedly that is because natural language processing is more advanced, and therefore text is easier to analyse computationally, the framing of the debate as ‘fake news’, has not helped.

As we describe in this report, visuals can be far more persuasive than other forms of communication[38], which can make them much more powerful vehicles for mis- and dis-information. In addition, over the past couple of months, we’ve been confronted with the technological implications whereby relatively limited audio or video clips of someone can act as very powerful ‘training data’ allowing for the creation of completely fabricated audio or video files, making it appear that someone has said something that they have not. [39]

Source-Checking vs Fact-Checking

There is much discussion of fact-checking in this report. There has been an explosion of projects and initiatives around the world, and this emphasis on providing additional context to public statements is a very positive development. Many of these organisations are focused on authenticating official sources: politicians, reports by think tanks or news reports (a list of European fact-checking organization are listed in Appendix A), but in this age of dis-information where we are increasingly seeing information created by unofficial sources (from social media accounts we don’t know, or websites which have only recently appeared), we argue that we need to be doing source-checking as well as fact-checking.

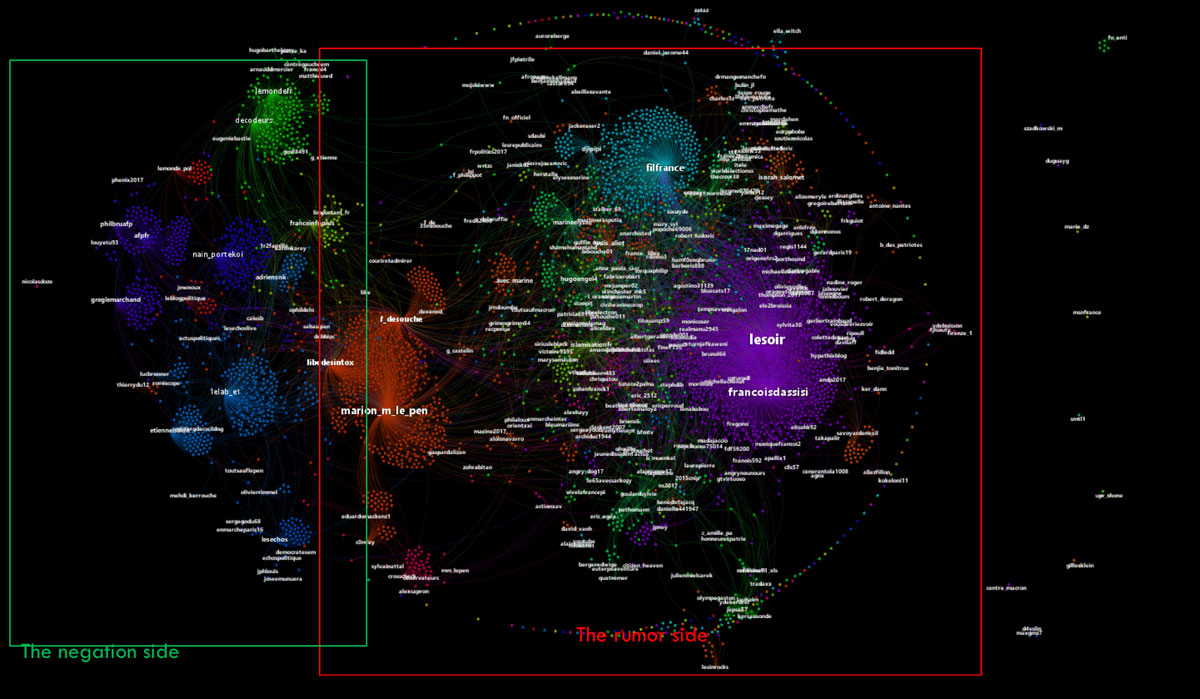

Increasingly, when assessing the credibility of a piece of information, the source who originally created the content or first shared it, can provide the strongest evidence about whether something is accurate. Newsrooms, and people relying on social media for information, need to be investigating the source, almost before they look at the content itself. For example, routinely people should be researching the date and location embedded in domain registration information of a supposed ‘news site’ to seeing whether it was created two weeks ago in Macedonia. Similarly, people should be instinctively checking whether a particular tweeted message has appeared elsewhere, as it could be that the same message was tweeted out by ten different accounts at exactly the same time, and six of them were located in other countries. Newsrooms in particular need more powerful tools to be able to visually map online networks and connections to understand how dis-information is being created, spread and amplified.

Strategic Silence

Newsrooms also need more powerful tools to help them understand how dis-information is moving across communities. In election monitoring projects First Draft has been involved with in France, the UK and Germany, Newswhip (a platform which helps newsrooms discover content before it goes viral) was used as a way of monitoring whether a piece of misleading, manipulated or fabricated content was predicted to be shared widely. Newswhip has a prediction algorithm which allows the user to see how many social interactions a piece of content has received at any given moment and to offer a prediction about how many interactions it would have twenty-four hours later. First Draft used this technology to inform decisions about what stories to debunk and which ones to ignore. If certain stories, rumours or visual content, however problematic, were not gaining traction, a decision was made not to provide additional oxygen to that information. The media needs to consider that publishing debunks can cause more harm than good, especially as agents behind dis-information campaigns see media amplification as a key technique for success. Debunks themselves can be considered a form of engagement. The news industry needs to come together to think about the implications of this type of reporting and the philosophical and practical aspects of incorporating these ideas related to strategic silence.

The Report

The report starts with a new conceptual framework for talking about information disorder, including three types, three phases and three elements. We then consider the specific challenges of filter bubbles and echo chambers, before moving on to examine the solutions that have been put it place to date (including those by the technology companies, education initiatives, the media and regulatory bodies). We end the report with a look at future trends, before wrapping up with some conclusions, and additional details about the thirty-four recommendations we are proposing.

Part 1: Conceptual Framework

Our conceptual framework has three components, each of which is also broken down into three parts:

- The Three Types of Information Disorder: Dis-information, Mis-information and Mal-information

- The Three Phases of Information Disorder: Creation, Production and Distribution

- The Three Elements of Information Disorder: Agent, Message and Interpreter

The Three Types of Information Disorder

Much of the discourse on ‘fake news’ conflates three notions: mis-information, dis-information and mal-information. But it’s important to distinguish messages that are true from those that are false, and messages that are created, produced or distributed by “agents” who intend to do harm from those that are not:

- Dis-information. Information that is false and deliberately created to harm a person, social group, organization or country.

- Mis-information. Information that is false, but not created with the intention of causing harm.

- Mal-information. Information that is based on reality, used to inflict harm on a person, organization or country.

Figure 2: Examining how mis-, dis- and mal-information intersect around the concepts of falseness and harm. We include some types of hate speech and harassment under the mal-information category, as people are often targeted because of their personal history or affiliations. While the information can sometimes be based on reality (for example targeting someone based on their religion) the information is being used strategically to cause harm.

The 2017 French Presidential election[40] provides examples that illustrate all three types of information disorder.

1) Examples of dis-information:

One of the most high profile hoaxes of the campaign, was the creation of a sophisticated duplicate version of the Belgian newspaper Le Soir, with a false article claiming that Macron was being funded by Saudi Arabia.[41] Another example was the circulation of documents online claiming falsely that Macron had opened an offshore bank account in the Bahamas.[42] And finally, dis-information circulated via ‘Twitter raids’ in which loosely connected networks of individuals simultaneously took to Twitter with identical hashtags and messages to spread rumours about Macron (e.g., that he was in a relationship with his step-daughter).

2) Examples of mis-information:

The attack on the Champs Elysees on 20 April 2017 inspired a great deal of mis-information[43], as is the case in almost all breaking news situations. Individuals on social media unwittingly published a number of rumours, for example the news that a second policeman had been killed. The people sharing this type of content are rarely doing so to cause harm. Rather, they are caught up in the moment, trying to be helpful, and fail to adequately inspect the information they are sharing.

3) Examples of mal-information:

One striking example of mal-information occurred when Emmanuel Macron’s emails were leaked the Friday before the run-off vote on 7 May. The information contained in the emails was real, although Macron’s campaign allegedly included false information to diminish the impact of any potential leak.[44] However, by releasing private information into the public sphere minutes before the media blackout in France, the leak was designed to cause maximum harm to the Macron campaign.

In this report, our primary focus is mis- and dis-information, as we are most concerned about false information and content spreading. However, we believe it’s important to consider this third type of information disorder and think about how it relates to the other two categories. However, hate speech, harassment and leaks raise a significant number of distinct issues, and there is not space in this report to consider those as well. The research institute Data & Society is doing particularly good work on mal-information and we would recommend reading their report Media Manipulation and Disinformation Online.[45]

The Phases and Elements of Information Disorder

In trying to understand any example of information disorder, it is useful to consider it in three elements:

- Agent. Who were the ‘agents’ that created, produced and distributed the example, and what was their motivation?

- Message. What type of message was it? What format did it take? What were the characteristics?

- Interpreter. When the message was received by someone, how did they interpret the message? What action, if any, did they take?

Figure 3: The Three Elements of Information Disorder

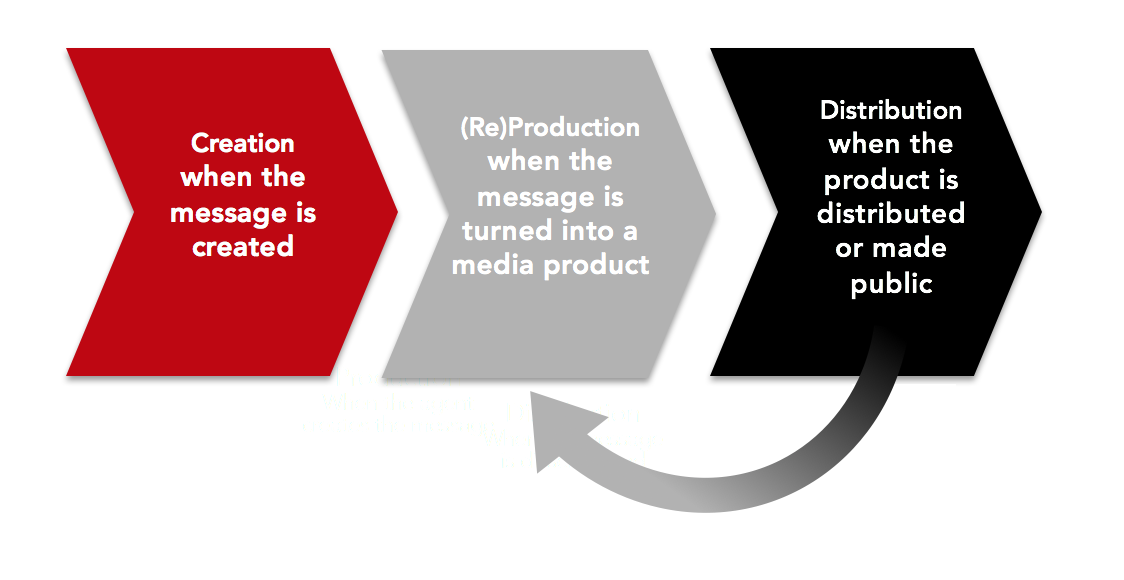

We argue that it is also productive to consider the life of an example of information disorder as having three phases:

- Creation. The message is created.

- Production. The message is turned into a media product.

- Distribution. The message is distributed or made public.

Figure 4: The Three Phases of Information Disorder

In particular, it’s important to consider the different phases of an instance of information disorder alongside its elements, because the agent that creates the content is often fundamentally different from the agent who produces it. For example, the motivations of the mastermind who ‘creates’ a state-sponsored dis-information campaign are very different from those of the low-paid ‘trolls’ tasked with turning the campaign’s themes into specific posts. And once a message has been distributed, it can be reproduced and redistributed endlessly, by many different agents, all with different motivations. For example, a social media post can be distributed by several communities, leading its message to be picked up and reproduced by the mainstream media and further distributed to still other communities. Only by dissecting information disorder in this manner can we begin to understand these nuances.

In the next two sections, we will examine these elements and phases of information disorder in more detail.

The Three Phases of Information Disorder

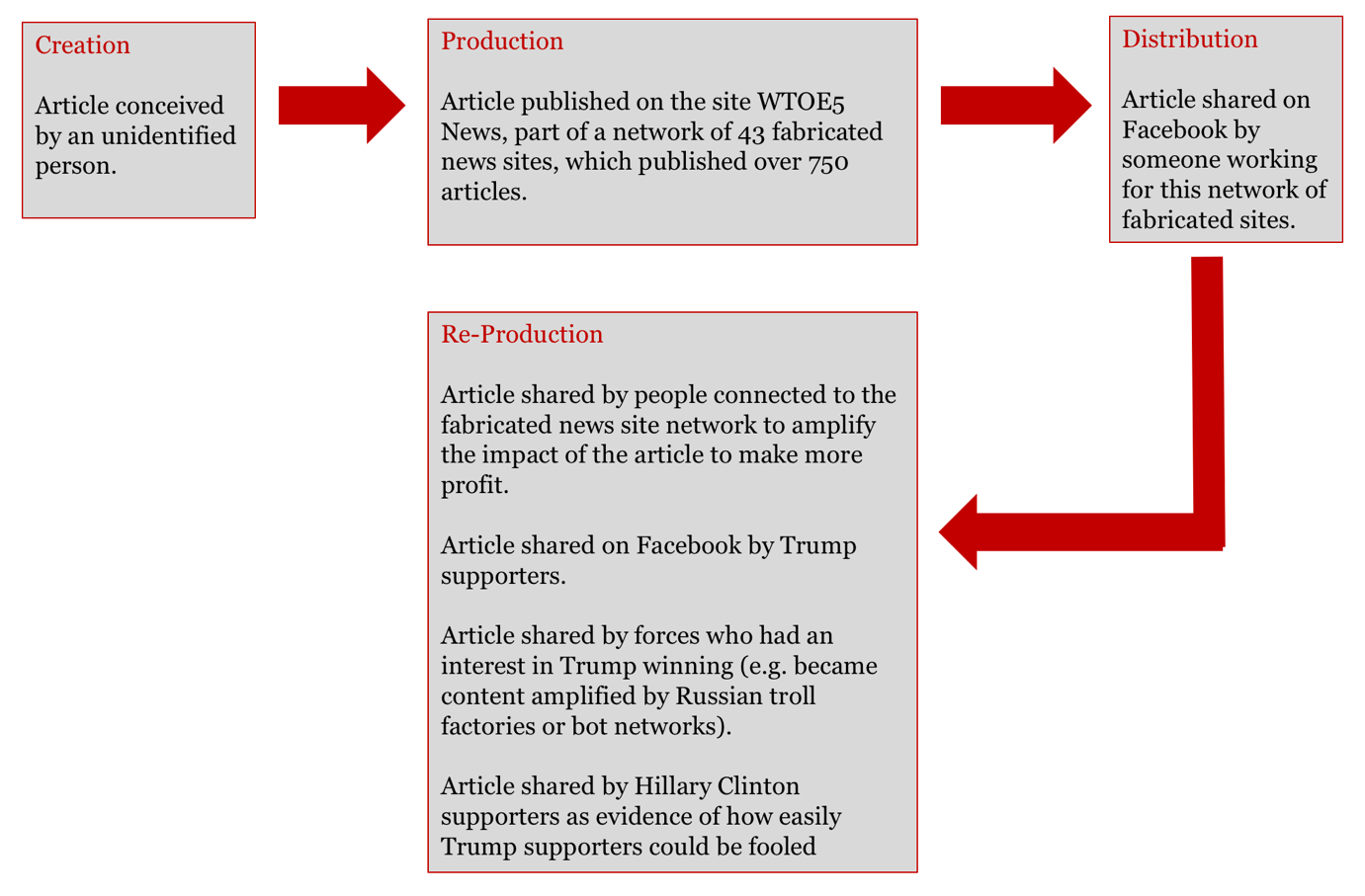

To examine how the phases of creation, production and distribution help us understand information disorder, let’s use the example of the article ‘Pope Francis Shocks World, Endorses Donald Trump for President, Releases Statement’ published on the self-proclaimed fantasy news site WTOE 5 in July 2016. For an in-depth analysis of this article and the network of sites connected to it, we would recommend reading ‘The True Story Behind The Biggest Fake News Hit Of The Election’ from Buzzfeed.[46]

Figure 5: Screenshot of the fabricated news article published in July 2016 on WTOE5News.com (The site no longer exists).

If we think about the three phases in this example, we can see how different agents were involved in creating the impact of this content.

Figure 6: Using the example of the ‘Pope Francis Shocks World, Endorses Donald Trump for President, Releases Statement’ fabricated news articles to test the Three Phases of Information Disorder

The role of the mainstream media as agents in amplifying (intentionally or not) fabricated or misleading content is crucial to understanding information disorder. Fact-checking has always been fundamental to quality journalism, but the techniques used by hoaxers and those attempting to disseminate dis-information have never been this sophisticated. With newsrooms increasingly relying on the social web for story ideas and content, forensic verification skills and the ability to identify networks of fabricated news websites and bots is more important than ever before.

The Three Elements of Information Disorder

The Agent

Agents are involved in all three phases of the information chain – creation, production and distribution – and have various motivations. Importantly, the characteristics of agents can vary from phase to phase.

We suggest seven questions to ask about an agent:

1) What type of actor are they?

Agents can be official, like intelligence services, political parties, news organizations. They can also be unofficial, like groups of citizens that have become evangelized about an issue.

2) How organized are they?

Agents can work individually, in longstanding, tightly-organized organizations (e.g., PR firms or lobbying groups) or in impromptu groups organized around common interests.

3) What are their motivations?

There are four potential motivating factors: Financial: Profiting from information disorder through advertising; Political: Discrediting a political candidate in an election and other attempts to influence public opinion; Social: Connecting with a certain group online or off; and Psychological: Seeking prestige or reinforcement.

4) Which audiences to they intend to reach?

Different agents might have different audiences in mind. These audiences can vary from an organization’s internal mailing lists or consumers, to social groups based on socioeconomic characteristics, to an entire society.

5) Is the agent using automated technology?

The ability to automate the creation and dissemination of messages online has become much easier and, crucially, cheaper. There is much discussion about how to define a bot. One popular definition from the Oxford Internet Institute is an account that posts more than 50 times a day, on average. Such accounts are often automated, but could conceivably be operated by people. Other accounts, known as cyborgs, are operated jointly by software and people.

6) Do they intend to mislead?

The agent may or may not intend to deliberately mislead the target audience.

7) Do they intend to harm?

The agent may or may not intend deliberately to cause harm

The Message

Messages can be communicated by agents in person (via gossip, speeches, etc.), in text (newspaper articles or pamphlets) or in audio/visual material (images, videos, motion-graphics, edited audio-clip, memes, etc.). While much of the current discussion about ‘fake news’ has focused on fabricated text articles, mis- and dis-information often appears in visual formats. This is important, as technologies for automatically analysing text are significantly different from those for analysing still and moving imagery.

We offer five questions to ask about a message:

1) How durable is the message?

Some messages are designed to stay relevant and impactful for the long term (throughout an entire war or in perpetuity). Others are designed for the short term (during an election) or just one moment, as in the case of an individual message during a breaking news event.

2) How accurate is the message?

The accuracy of a message is also important to examine. As discussed earlier, mal-information is truthful information used to harm (either by moving private information into the public arena or using people’s affiliations, like their religion, against them). For inaccurate information, there is a scale of accuracy from false connection (a clickbait headline that is mismatched with its article’s content) to 100% fabricated information.

3) Is the message legal?

The message might be illegal, as in the cases of recognised hate speech, intellectual property violations, privacy infringements or harassment. Of course, what messages are legal differs by jurisdiction.

4) Is the message ‘imposter content’, i.e. posing as an official source?

The message may use official branding (e.g., logos) unofficially, or it may steal the name or image of an individual (e.g., a well-known journalist) in order to appear credible.

5) What is the message’s intended target?

The agent has an intended audience in mind (the audience they want to influence) but this is different to the target of the message (those who are being discredited). The target can be an individual (a candidate or a political or business leader), an organisation (a private firm or a government agency), a social group (a race, ethnicity, the elite, etc.) or an entire society.

The Interpreter

Audiences are very rarely passive recipients of information. An ‘audience’ is made up of many individuals, each of which interprets information according to his or her own socio-cultural status, political positions and personal experiences.

As outlined earlier, understanding the ritualistic aspect of communication is critical for understanding how and why individuals react to messages in different ways. The types of information we consume, and the ways in which we make sense of them, are significantly impacted by our self-identity and the ‘tribes’ we associate with. And, in a world where what we like, comment on and share is visible to our friends, family and colleagues, these ‘social’ and performative forces are more powerful than ever.

Having to accept information that challenges our sense of self can be jarring. Irrespective of how persuasive a message may appear to a neutral observer, it is easier to ignore or resist information that opposes our own worldview. Certainly, evidence suggests that fact-checks do tend to nudge individuals’ knowledge in the direction of the correct information, but it certainly doesn’t replace the mis- or dis-information entirely.

This reality complicates our search for solutions to information disorder. If we accept that human brains do not always work rationally, simply disseminating more quality information is not the answer. Solutions must grapple with the social and performance characteristics that have helped make certain fabricated content so popular on Facebook. How, for example, can we make sharing false information publicly shameful and embarrassing? What can we learn from the theories of performativity, particularly in performance and identity management in an online setting that could help us experiment with some potential solutions?

What the ‘interpreter’ can do with a message highlights how the three elements of information disorder should be considered parts of a potential never-ending cycle. In an era of social media, where everyone is a potential publisher, the interpreter can become the next ‘agent,’ deciding how to share and frame the message for their own networks. Will they show support for the message by liking or commenting on it, or will they decide to share the message? If they do share the message, have they done so with the same intent as the original agent, or will they share it to, for example, show their disagreement?

Figure 7: Questions to ask about each element of an example of information disorder view larger

Figure 8: Using the ‘Three Elements of Information Disorder’ to examine the ‘Pope Endorses Trump’ article

In the next section, we will review literature that helps provide a deeper historical and theoretical understanding of the three elements of information disorder.

1) The Agents: Who are they and what motivates them?

In this section, we explore the role of agents, or those who create, produce and distribute messages. Again, the motivations of the person who creates and posts a meme on an invite-only chat group on Discord could be different from the person who sees the meme on their Facebook feed and shares it with a WhatsApp group.

Official vs Unofficial Actors?

When official actors are involved, the sophistication, funding and potential impact of a message or campaign of systematic messages is far greater. Much has been written about the impact of Russian propaganda on information ecosystems in Europe and further afield. One of the most notable is the Rand Corporation’s report from July 2016, entitled “The Russian ‘Firehose of Falsehood’ Propaganda Model,”[47] which identified four characteristics of modern Russian propaganda:

- Voluminous and multi-channeled

- Rapid, continuous and repetitive

- Noncommittal to objective reality

- Inconsistent in its messaging

The EU Stratcomm Taskforce provides regular analysis of Russian propaganda messaging across the European Union.[48]. Likewise, their research shows that a key strategy of Russia is to spread as many conflicting messages as possible, in order to persuade audiences that there are too many versions of events to find the truth. As they explain, “Not only (are) big media outlets like Russia Today or Sputnik … deployed, but also seemingly marginal sources, like fringe websites, blog sites and Facebook pages. Trolls are deployed not only to amplify dis-information messages but to bully those… brave enough to oppose them. And the network goes wider: NGOs and “GONGOs” (government organised NGOs); Russian government representatives; and other pro-Kremlin mouthpieces in Europe, often on the far-right and far-left. In all, literally thousands of channels are used to spread pro-Kremlin dis-information, all creating an impression of seemingly independent sources confirming each other’s message.”[49]

In April 2017, Facebook published a paper by three members of its Security team, entitled “Information Operations and Facebook,” that outlines the use of the platform by state actors. They define information operations as “actions taken by organized actors (governments or non-state actors) to distort domestic or foreign political sentiment, most frequently to achieve a strategic and/or geopolitical outcome. These operations can use a combination of methods, such as false news, dis-information or networks of fake accounts aimed at manipulating public opinion (we refer to these as ‘false amplifiers’).”[50]

While Russian propaganda techniques are the current focus of much concern, digital astroturfing campaigns – that is, campaigns that use troll factories, click farms and automated social media accounts – have been used by other state actors for years. A recent report by the Computational Propaganda Research Project tracked this activity across twenty-eight countries, showing the scale of these operations.[51]

Perhaps the most notable of these state actors is China, which has paid people to post millions of fabricated social media posts per year, as part of an effort to “regularly distract the public and change the subject” from any policy-related issues that threaten to incite protests.[52] In countries like Bahrain and Azerbaijan, there is evidence of PR firms creating fake accounts on social media to influence public opinion.[53] Duterte’s government has used sophisticated ‘astroturfing’ techniques to target individual journalists and news organizations.[54]

Additionally, in South Africa, an email leak in May exposed large-scale dis-information efforts by the powerful Gupta family to distract attention from its business dealings with the government. These efforts included paying Twitter users to abuse journalists and spread dis-information and the use of bots to amplify fabricated stories.[55]

In contrast to official actors, unofficial actors are those who work alone or with loose networks of citizens, and create false content to harm, make money, or entertain other like-minded people.

Following the outcry about the role of fabricated websites in the 2016 US election, journalists tracked down some of these ‘unofficial’ agents. One was Jestin Coler, who, in an interview with NPR, admitted that his “whole idea from the start was to build a site that could kind of infiltrate the echo chambers of the alt-right, publish blatantly [false] or fictional stories and then… publicly denounce those stories and point out the fact that they were fiction.” As NPR explains, “[Coler] was amazed at how quickly fake news could spread and how easily people believe[d] it.”[56]

How organised are the agents?

Trolls have existed since the internet was invented.[57] Definitions vary, but one aspect is key: trolls provoke emotions by publicly offending their targets. Trolls are humans who post behind a username or handle. Yet, similar to bots, they can amplify dis-information in coordinated ways to evoke conformity among others. What they do better than bots is target those who question the veracity of a piece of information. Trolls work efficiently to silence naysayers in the early stages of dis-information distribution by posting personal attacks to undermine that person’s position on the board. And we know that some governments organize agents to pursue specific messaging goals on social media, whether through bots, cyborgs or ‘troll factories.’[58]

In the report entitled ‘Media Manipulation and Disinformation Online’ from Data & Society, Alice Marwick and Rebecca Lewis analyzed ‘Gamergate’, an online campaign of bullying and harassment that took place in late 2014. They identified organized brigades, networked and agile groups, men’s rights activists and conspiracy theorists as exploiting “young men’s rebellion and dislike of ‘political correctness’ to spread white supremacist thought, Islamophobia, and misogyny through irony and knowledge of internet culture”.[59]

Buzzfeed’s Ryan Broderick examined similar, loosely-affiliated groups of Trump supporters in the US who were active during the French election.[60] Using technologies like Discord (a set of invite-only chat rooms), Google documents, Google forms and Dropmark (a file-sharing site like Dropbox), they organized ‘Twitter raids’ where they would simultaneously bombard Twitter accounts they hoped to influence with messages using the same hashtags.

Analysis of mis-information during the French election by Storyful and the Atlantic Council showed that such loose, online networks of actors push messages across different platforms. Anyone wishing to understand their influence needs to monitor several closed and open platforms. For example, in the context of the US election, Trump supporters produced and “audience-tested many anti-Clinton memes in 4Chan and fed the ones with the best responses into the Reddit forum ‘The_Donald.’ The Trump campaign also monitored the forum for material to circulate in more mainstream social media channels.”[61]

Finally, it’s worth mentioning ‘fake tanks’, or partisan bodies disguised as think tanks. As Transparify, the group that provides global ratings on the financial transparency of think tanks, has explained, “[T]hese [fake tanks] range from essentially fictitious entities purposefully set up to promote the very narrow agenda and vested interests of (typically one single) hidden funder at one extreme, to more established organisations that work on multiple policy issues but (occasionally or routinely) compromise their intellectual independence and research integrity in line with multiple funders’ agendas and vested interests…”

Representatives from fake tanks “regularly appear on television, radio, or in newspaper columns to argue for or against certain policies, their credibility bolstered by the abuse of the think tank label and misleading job titles such as “senior scholar.”[62]

What is the motivation of the agent?

Looking into what motivates agents not only provides a deeper understanding of how dis- or mal-information campaigns work, it also points to possible ways to resist them.

It is a mistake to talk generally about agents’ motivations, since they vary in each phase. It is quite likely that publishers (e.g., an editor of a cable news show) or distributors (e.g., a user on a social network) of a message may not even be fully aware of the real purpose behind a piece of dis-information.

As we illustrate above, if a message is partly or entirely false, but no harm is intended by its producer, it doesn’t fall under the definition of dis-information. For this reason, it’s important to differentiate between mis-information (false, but not intended to harm) and mal-information (true, but intended to harm).

i) Political

Producers of dis-information campaigns, from Russia and elsewhere, sometimes have political motivations. A great deal has been written about Russian dis-information activity in Europe, but it’s worth quoting at length from a statement given by Constanze Stelzenmüller to the US Senate Committee on Intelligence, in June 2017, on the subject of potential interference by Russia in the German Federal elections:

“Three things are new about Russian interference today. Firstly, it appears to be directed not just at Europe’s periphery, or at specific European nations like Germany, but at destabilizing the European project from the inside out: dismantling decades of progress toward building a democratic Europe that is whole, free, and at peace. Secondly, its covert and overt “active measures” are much more diverse, larger-scale, and more technologically sophisticated; they continually adapt and morph in accordance with changing technology and circumstances. Thirdly, by striking at Europe and the United States at the same time, the interference appears to be geared towards undermining the effectiveness and cohesion of the Western alliance as such—and at the legitimacy of the West as a normative force upholding a global order based on universal rules rather than might alone. That said, Russia’s active measures are presumably directed at a domestic audience as much as towards the West: They are designed to show that Europe and the U.S. are no alternative to Putin’s Russia. Life under Putin, the message runs, may be less than perfect; but at least it is stable.”[63]

In terms of Russian dis-information, one of the best sources of information is the EU East StratCom Task Force,[64] which has a site called ‘euvsdisinfo.eu’ that provides regular updates about Russian dis-information campaigns across Europe. As they explain, “the dis-information campaign is a non-military measure for achieving political goals. Russian authorities are explicit about this, for example through the infamous Gerasimov doctrine and through statements by top Russian generals that the use of ‘false data’ and ‘destabilising propaganda’ are legitimate tools in their tool kit.” Elsewhere, the Task Force wrote, “The Russian Minister of Defence describes information as ‘another type of armed forces.’”[65]

One critical aspect to understanding Russian dis-information, as noted by information warfare expert Molly McKew, is that “information operations aim to mobilize actions and behavioral change. It isn’t just information.”[66] As the recent revelations about Russian operatives purchasing dark ads on Facebook[67] and organizing protests via Facebook’s Events feature[68] show, the aim of these acts is to create division along socio-cultural lines.

ii) Financial

Some of those who produce or distribute dis-information may do so merely for financial gain, as in the case of PR firms and fabricated news outlets. Indeed, entire businesses might be based on dis-information campaigns.[69]

Fabricated ‘news’ websites created solely for profit have existed for years. Craig Silverman documented some of the most prolific in the US in his 2015 report[70] for the Tow Center for Digital Journalism. However, the US election shone a light on how many of these sites are located overseas, but aimed at US audiences. Buzzfeed was one of the first news organisations to detail the phenomenon of English-language websites created by Macedonians to capitalise on US readers’ enthusiasm for sensationalist stories.[71] The small city of Veles in Macedonia produced “an enterprise of cool, pure amorality, free not only of ideology but of any concern or feeling about the substance of the election. These Macedonians on Facebook didn’t care if Trump won or lost the White House. They only wanted pocket money to pay for things.”[72]

This example from Veles also underscores the difficulty of assessing the true motivation of any particular agent. The dominant narrative has been that these young people were motivated by the financial benefits. We can assume this is true, as they undoubtedly made money, but we will unlikely ever know whether there was any coordinated attempt to encourage these teenagers to start this type of work in the first place.

‘Fake news’ websites make money through advertising. While Google and Facebook have taken steps to prevent these sites from getting money through their ad networks, there are still many other networks through which site owners can make money.

French startup Storyzy alerts brands when they appear on dubious websites. In a August 2017 write-up of their work, Frederic Filloux explains that over 600 brands had advertisements on questionable sites. When they were approached for comment, Filloux concluded that few cared, as long as their “overall return on investment was fine.” They certainly did not seem to consider the ethical implications of helping to fuel a ‘vast network of mis-information.’[73]

iii) Social and Psychological

While much of the debate around dis-information has focused on political and financial motivations, we argue that understanding the potential social and psychological motivations for creating dis-information is also worth exploring.

For example, consider the motivation to simply cause trouble or entertain. There have always been small numbers of people trying to ‘hoax’ the news media—from Tommaso Debenedetti, who frequently uses fake Twitter accounts to announce the death of high profile people,[74] to the person behind the ‘Marie Christmas’ account, who fooled CNN into thinking he or she was a witness to the San Bernardino shooting.[75]

Some share mis-information as a joke, only to find that people take it seriously. Most recently, during Hurricane Harvey, Jason Michael McCann tweeted the old, already-debunked image from Hurricane Sandy of a shark swimming in a flood highway. When Craig Silverman reached out to him for comment, he explained, “Of course I knew it was fake, it was part of the reason I shared the bloomin’ thing… What I had expected was to tweet that and have my 1,300 followers in Scotland to laugh at it.”

On more serious matters, the previously-mentioned research by Marwick and Lewis[76] takes a deep dive into alt-right communities and discusses the importance of considering their shared identity in understanding their actions online.

Examining the audiences of hyper-partisans sites, such as Occupy Democrats in the US and The Canary in the UK, we can also see the influence of political tribalism and identity. These types of sites do not peddle 100% fabricated content, but they are very successful in using emotive (and some would argue misleading) headlines, images and captions – which are often all of an article that’s read on platforms like Facebook – to get their audiences to share their messages.

In August 2017, Silverman and his colleagues at Buzzfeed published the most comprehensive study to date of the growing universe of US-focused, hyper-partisan websites and Facebook pages. They revealed that, in 2016 alone, at least 187 new websites launched, and that the candidacy and election of Donald Trump “unleashed a golden age of aggressive, divisive political content that reaches a massive amount of people on Facebook.”[77]

Is the agent using automation?

Currently, machines are poor at creating dis-information, but they can efficiently publish and distribute it. Recent research by Shao and colleagues concluded that “[a]ccounts that actively spread mis-information are significantly more likely to be bots.” They also found that bots are “particularly active in the early spreading phases of viral claims, and tend to target influential users.”[78]

Bots can manipulate majority-oriented platform algorithms to gain vast visibility and can create conformity among human agents who would then further distribute their messages.[79] Many bots are designed to amplify the reach of dis-information[80] and exploit the vulnerabilities that stem from our cognitive and social biases. They also create the illusion that several individuals have independently come to endorse the same piece of information.[81]

As a recent report on computational amplification by Gu et al. concluded: “A properly designed propaganda campaign is designed to have the appearance of peer pressure—bots pretending to be humans, guru accounts that have acquired a positive reputation in social media circles—these can make a propaganda campaign-planted story appear to be more popular than it actually is.”[82] Despite the platforms’ public commitment to stifle automated accounts, bots continue to amplify certain messages, hashtags or accounts, creating the appearance of certain perspectives being popular and, by implication, true.[83]

A recent report by NATO StratCom entitled ‘Robotrolling’ found that two in three Twitter accounts posting in Russian about the NATO presence in the Baltics and Poland were bots. They also found the density of bots is 2 to 3 times greater among Russian-tweeting accounts than in English-tweeting accounts. The authors conclude that foreign-language sources on social networks are policed and moderated much less effectively than English-language sources.[84]

It also seems possible that there may be a black market for social bots. Ferrara found that many bots who supported Trump in the 2016 election also engaged with the #MacronLeaks trend, but made few posts in-between.[85]

Important research has been done on bots recently, particularly in terms of thinking about their definition, scale and influence. The most comprehensive body of research has been carried out by the Oxford Internet Institute’s Computational Propaganda Research Project.[86] They define high-frequency accounts as those that tweet more than 50 times per day on average. While often these accounts are bots, we also need to realise there are some humans who tweet that frequently. There are also cyborg accounts[87], which are jointly operated by people and software. As Nic Dias argues, looking at an account’s posting frequency can be more useful than a fixation on whether an account is fake or not.[88]

There are certainly highly partisan individuals whose accounts could be mistaken for bots. A Politico article in August 2017 described how tens of thousands of tweets per day continue to emanate from a very human, grassroots organisation. Using Group Direct Messages on Twitter, they organize people into “invite-only rooms with names like ‘Patriots United’ and ‘Trump Train. Many rooms have accompanying hashtags to track members’ tweets as they propagate, and each can accommodate as many as 50 people.”[89]

Returning to our discussion around agents’ motivations, these examples show the power of social and psychological motivations for creating and disseminating mis- and dis-information. Being a part of the tribe is a powerful, motivating force.

2) The Messages: What format do they take?

In the previous section we examined the different characteristics of the ‘agents’ those who are involved in creating, producing or disseminating information disorder. We now turn our attention to the messages themselves.

There are four characteristics that make a message more appealing and thus more likely to be consumed, processed and shared widely:

- It provokes an emotional response.

- It has a powerful visual component.

- It has a strong narrative.

- It is repeated.

Those who create information campaigns, true or false, understand the power of this formula. Identifying these characteristics helps us to recognize dis-information campaigns which are more likely to be successful, and to inform our attempts to counter dis-information (see more in Part Three).

Verbal, text or audio?

While much of the conversation about mis- and dis-information has focused on the role of the internet in propagating messages, we must not forget that information travels by word of mouth. The offline and online worlds are not separate, although the challenges researchers face in effectively studying the effects of different forms of communication simultaneously means it’s easier to think about these elements separately.

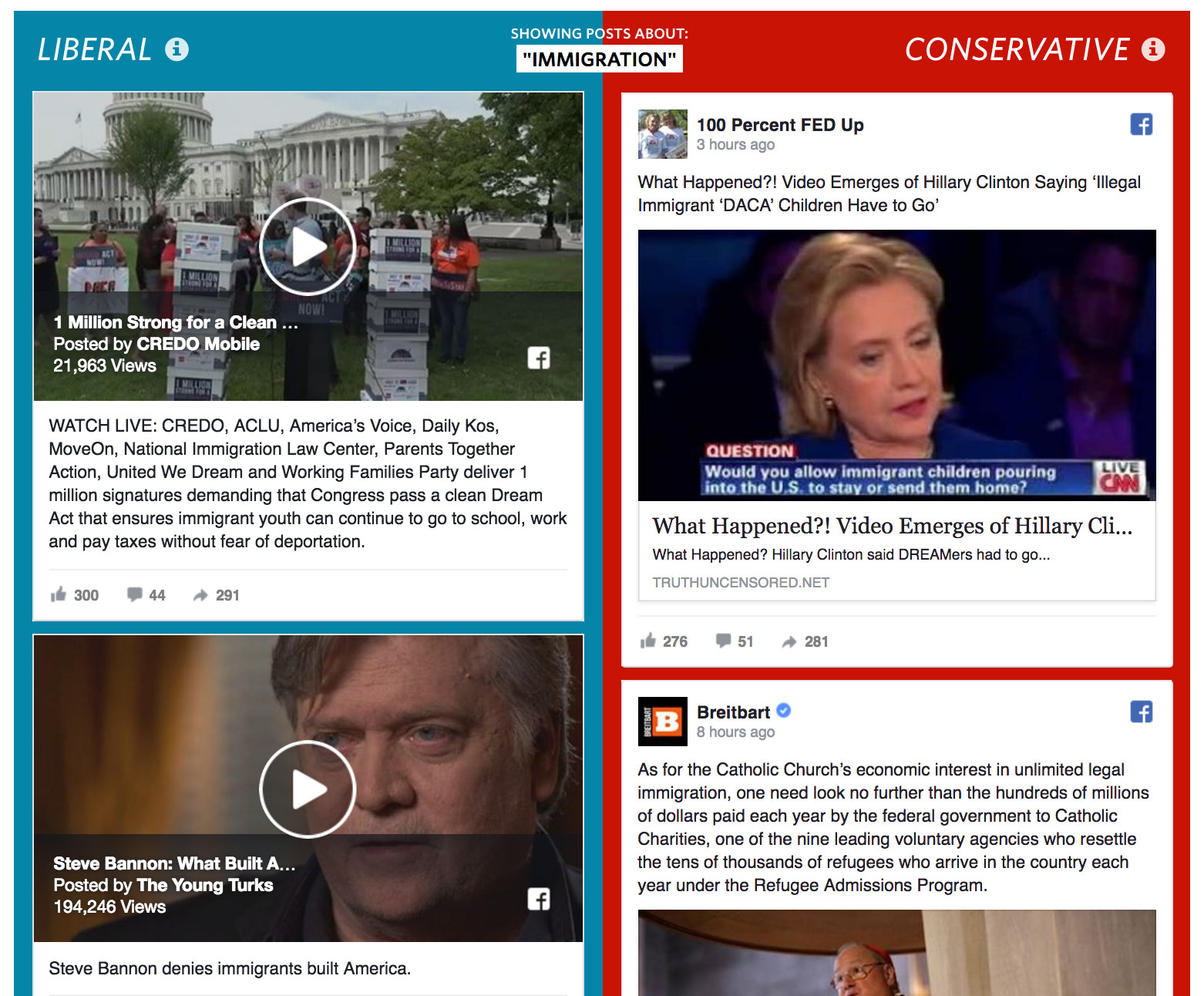

The ‘fake news’ conversation has also focussed on text-based, fabricated news websites. As Nausicaa Renner argues, “the fake news conversation has taken place in the realm of words, but that’s missing a big part of the story. Much of the content that circulates on Facebook are images, often memes. They’re not attached to an article, and there’s often no way to trace their source. And while Facebook’s algorithm is notoriously elusive, it seems to favor images and video over text. As such, images have the potential to reach more readers than articles — whether fake, real, non-partisan or hyper-partisan.”[90]

Certainly, in the election-based projects First Draft led in France and the U.K., visuals were overwhelmingly the most shared and the most difficult to debunk of misleading content. In both cases, while there were almost no examples of fabricated news sites as we saw in the US context, there were large numbers of highly shareable images, infographics and memes (i.e., compelling images with large block text layered over top.)[91]

Figure 9: An example of a ‘meme’ shared widely during the UK election

As scholarship of visuals[92] has shown, the way we understand imagery is fundamentally different to how we understand text.[93] Our brains process images at an incredible speed when compared with text.[94] As a result, our critical reasoning skills are less likely to engage with what we’re seeing.

Technology that could identify manipulated or fabricated images lags behind technology for parsing and analysing text. While Google’s Reverse Image Search engine (see also TinEye and Yandex) is a good starting point for identifying when images have circulated before, we still don’t have publicly available reverse-video search engines or OCR (Optical Character Recognition) tools capable of reading the text on memes in a timely manner. We need more sophisticated, widely accessible tools to help identify problematic visual content.

Over the next few years, we will certainly see the development of artificial intelligence technologies to create as well as identify dis-information. (Simply understood, artificial intelligence is the ability of computers to undertake tasks that we previously needed human brains to work, like speech recognition or visual identification.) It is critical that the engineers who develop the new products, tools and platforms have been provided ethical training on the unintended consequences of the algorithms they write.

Upon whom are the messages focused?

While agents have particular audiences in mind when they create dis-information, the targeted subject of the message will be different. Dis-information often deliberately highlights differences and divisions, whether they be between supporters of different political parties, nationalities, races, ethnicities, religious groups, socio-economic classes or castes. As Greenhill argues, these types of messages enable discriminatory and inflammatory ideas to enter public discourse and to be treated as fact. Once embedded, such ideas can in turn be used to create scapegoats, normalize prejudices, harden us-versus-them mentalities and, in extreme cases, even catalyze and justify violence.[95]

Most discussion around dis-information in the US and European contexts has focused on political messages, which, while worrying from a democratic perspective, tend not to incite violence. However, in other parts of the world, dis-information directed toward people due to their religious, ethnic or racial identities has led to violence. As Samantha Stanley explained, “perhaps the most obvious example of how mis-information can lead to violent offline action is the two-day riots in Myanmar’s second largest city, Mandalay, in July 2014. Following an unsubstantiated rumor posted on Facebook that a Muslim tea shop owner raped a Buddhist employee, a mob of almost 500 people wreaked havoc on the city and incited lingering fear amongst its Muslim citizens. Two people were killed during the riot, one Buddhist and one Muslim.”[96]

3) Interpreters: How do they make sense of the messages?

As Stuart Hall explained in his seminal work on reception theory[97], messages are encoded by the producer, but then decoded by individual audience members in one of three ways:

- Hegemonic. Accepting the message as it was encoded.

- Negotiated. Accepting aspects of the message, but not all of it.

- Oppositional. Declining the way the message was encoded.

In this section, we outline the work of key cultural and social theorists who have attempted to explain how audiences make sense of messages.

George Lakoff sees rationality and emotions as being tied together to the extent that, as human beings, we cannot think without emotions. The emotions in our brains are structured around certain metaphors, narratives and frames. They help us make sense of things, and, without them, we would become disoriented. We would not know what or how to think.

Lakoff distinguishes two different kinds of reason: ‘False reason’ and ‘real reason.’[98] False reason, he says, ‘sees reason as fully conscious, as literal, disembodied, yet somehow fitting the world directly, and working not via frame-based, metaphorical, narrative and emotional logic, but via the logic of logicians alone.’ Real reason, alternatively, is an unconscious thought that ‘arises from embodied metaphors.’[99] He argues that false reason does not work in contemporary politics, as we’ve become increasingly emotional about our political affiliations.

Understanding how our brains make sense of language is also relevant here. Every word is neurally connected to a particular frame, which is in turn linked together with other frames in a moral system. These ‘moral systems’ are subconscious, automatic and acquired through repetition. As the language of conservative morality, for example, is repeated, frames and in turn the conservative system of thought are activated and strengthened unconsciously and automatically. Thus, conservative media and Republican messaging work unconsciously to activate and reinforce the conservative moral system, making it harder for fact-checks to penetrate.[100]

Considering Trump’s success, D’Ancona recently argued, “He communicated a brutal empathy to [his supporters], rooted not in statistics, empiricism or meticulously acquired information, but an uninhibited talent for rage, impatience and the attribution of blame.”[101] Ultimately, news consumers “face a tradeoff: they have a private incentive to consume precise and unbiased news, but they also receive psychological utility from confirmatory news.”[102]

As we will discuss in Part Two, the emotional allure of situating ourselves within our filter bubbles and having our worldviews supported and reinforced by ‘confirmatory news’ is incredibly powerful. Finding solutions to this is going to require a mixture of technological and educational solutions and, ultimately, a psychological shift whereby one-sided media diets are deemed socially unacceptable.

Communication as Ritual

When town criers announced news to crowds, runners read newspapers aloud in coffeehouses and families listened to or watched the evening news together, news consumption was largely a collective experience. However, news consumption has slowly evolved into an individual behavior with the emergence of portable radios and television and, more recently, the ubiquitous adoption of laptops, tablets and smartphones.

But while we might physically consume the news alone, what we choose to consume is increasingly visible because of social media. The posts that we like or comment on and the articles, videos or podcast episodes we share are all public. Borrowing from Erving Goffman’s metaphor of life as theatre, invariably, when we use social media to share news, we become performers.[103] Whatever we like or share is often visible to our network of friends, family and acquaintances, and it affects their perceptions of us.[104]

If social media is a stage, our behaviour is a performance and our circle of friends or followers are our audience. Goffman thinks our goal for this performance is to manage our audience’s perception of us.[105] Therefore, we tend to like or share things on social media that our friends or followers would expect us to like or share—or, in other words, what we would normally like or share.[106]

Similarly, as Maffesoli argued in his 1996 book The Time of the Tribes[107], to understand someone’s behavior, one must consider the sociological implications of the many different, small and temporary groups that he or she is a member of at any given time of day. Maffesoli’s writings aptly describe the realities of users who have to navigate different online groups throughout the day, deciding what information to post or share to different ‘tribes’ online and off.